I worked with dozens of companies migrating their legacy data warehouses or analytical databases to the cloud. I saw the difficulty to let go of the monolithic thinking and design and to benefit from the modern cloud architecture fully. In this article, I’ll share my pattern for a scalable, flexible, and cost-effective data analytics platform in the AWS cloud, which was successfully implemented in these companies.

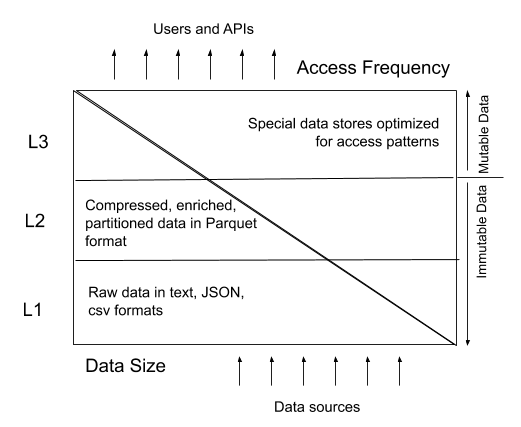

TL;DR, design the data platform with three layers, L1 with raw files data, L2 with optimized files data, and L3 with cache in mind. Ingest the data as it comes into L1, and transform each use-case independently into L2, and when a specific access pattern demands it, cache some of the data into a dedicated data store.

Mistake 1: “One Data Store To Rule Them All”

The main difference that companies are facing when modernizing their existing data analytics platform is giving up on a single database that was used in their legacy system. It is hard to give on it after the massive investment of building it and operating it. I met companies that spent millions of dollars and hundreds of years of development to built their data warehouse and the many ETL processes, stored procedures, and reporting tools that are part of it. It is also hard to give up on the benefits that a single tool is giving in terms of “a single neck to choke,” or answers to “where is the (analytical) data that I need?”.

A few days ago, Amazon.com announced that they finally shut down the last Oracle database in their retail business. It was a long process that was running for more than four years. My first role as a solutions architect for Amazon.com was to help with the design of the migration away from relational databases in general and Oracle specifically. I worked with dozens of teams across the business to re-design their systems from the classical relational to the more scalable and flexible newer data stores. The goal was to shift to NoSQL (DynamoDB mainly) or analytical (Amazon Redshift was the main target then) databases. It was hard for the teams to give up on the easy life of being able to query (or search, as they called it) on every column, to use standard query language as SQL for all their data needs, and mainly to use the tools they were familiar with. However, Amazon.com took the long term perspective and decided to invest in building infrastructure that is (almost infinitely) scalable. They wanted to be able to grow their business without technical limitations.

During these years, Amazon.com, which is famous for its “simplify and invent” principle, built many tools to make this migration easier. They also built, using AWS, a set of new databases that can be used as targets, mainly Amazon Aurora (almost drop-in replacement for Oracle with its PostgreSQL flavor), and Amazon Athena, which we will discuss shortly.

The limitations of a single tool are also apparent to many companies, in terms of flexibility, scale, cost, agility, and others that are part of modern architecture in the cloud. However, the break down or curve out of a monolithic system is painful for most companies. Therefore, many companies desire to replace a non-scalable and expensive on-premises database, such as Oracle or MS-SQL, with a cloud service, such as Amazon Redshift (data warehouse service) or Amazon Athena (managed Presto service), Azure Databricks (managed Spark service) or Google BigQuery. They are hoping that the single cloud service will replace the single monolithic on-prem database. Sadly, this is often a disappointment, as the limitation is on using a single tool and not only on where they are operating.

Mistake 2: “Hadoop is dead, long live the new king — Spark.”

Every about five years, new technology is coming along and changing the way to build a modern architecture. Ten years ago, it was Hadoop that opened up scalable opportunities to handle a large amount of data with tools such as Hive, Pig, HBase, and others. Five years ago, it was Spark that changed the game with much faster big data processing, better SQL than Hive, newer functional programming languages with Scala and Python than Hadoop’s Java, new streaming capabilities, and many others.

Spark is also enjoying the maturity of the tools and the popularity among many big data developers. The combination of running Spark SQL, Spark Streaming, and even machine learning with Spark MLlib is very appealing, and many companies have standardized their big data on Spark. However, the growth of the popularity and need for data analytics and machine learning exposed the limitations of Spark. As a Spark expert, I’m often asked to come to review and fix the Spark code that is too complex or too slow as it grows. I also see many companies trying to build their machine learning using the Spark library, which Databricks is developing and pushing a lot.

My recommendation now is to write the data transformation logic using SQL based on PrestoDB. SQL has many benefits compared to the Scala or Python of Spark, mainly in its concise form, fewer bugs that can sneak into the code, and many more people who can write the logic using it. The main objection I get is based on the resistance of the current developers who are less comfortable with SQL than with Scala or Python they are using today.

Mistake 3: “So, Presto is the new king.”

The term modern cloud architecture is referring to an architecture that is based on microservices, serverless, and pay-for-what-you-use (and not pay-for-what-you-provision). The poster boy of this modern architecture is AWS Lambda (or Azure Functions/Google Cloud Functions). You write the business logic, and the cloud is managing the rest for you. No more Application servers, no more starting, and terminating servers or virtual machines, no more waiting for the yearly product release, and no more “only Java in production.” The future is thousands of functions developed as needed and executed when needed calling one another in a perfect mesh of business logic and scaled up and down just-in-time.

Amazon Athena is the serverless option when it comes to data. The service is currently running a managed PrestoDB engine. The reason for the “currently” modifier in the previous sentence is to allow Amazon to upgrade the engine based on the best SQL engine on files in S3 at the time. The evolution from Hive, Impala, SparkSQL, and now Presto only proves that we will see in the future an even better engine. Amazon wants to avoid the mistake they did with naming the EMR (used to be Elastic-Map-Reduce) service, which is running today more complex distributed computing than Map-Reduce.

In Amazon Athena, you write your business logic using SQL, and the query is sent to a fleet of workers that are optimized to meet the complexity of the data and the query. In most cases, in a few seconds, you have the query result in a CSV file in S3. No servers to manage, no time to wait for the servers to spin up, and no payment for idle machines. Real serverless..

However, I hear often that Amazon Athena is too expensive, especially when you are running a lot of heavy analytical queries. I listened to the same comments on AWS Lambda. The move from paying once for the resource (cluster of Presto or application server for business logic) to pay-for-what-you-use can be scary and risky. The secret is to know how to optimize your usage, which is usually much harder and less appealing when you are managing your resources.

As the cost of Amazon Athena is based on the amount of data scanned by the query, every reduction in the size of the data reduces the cost of the query. The main mistake in using Athena is using the fact that it can query raw data in huge volume and raw formats like JSON or CSV, and relying on it for too many queries.

Then, what do you recommend?

Let’s summarize what did we learn so far. We learned that we shouldn’t use only a single datastore as in time, it will limit our ability to grow the data usage. We learned that we should be curious and learn, test, and adopt new tools and technology, once they mature from the “nice idea” stage. We should take the long term perspective when designing our technical systems, to allow unlimited business growth for our company.

With this background and vision, we can better explain why do we spend so much effort on the following data tiers, instead of merely dropping everything into a super database _____ (fill in your current favorite database).

I see that you are now ready to see my recommended recipe.

Tier I (L1) — Raw Data in low-cost storage (such as S3)

All data should land in its raw form from every source with little modification or filtering. The data can come from IoT devices, streaming sources such as Kafka or Kinesis, textual log files, JSON payload from NoSQL or web services interactions, images, videos, textual comments, Excel or CSV files from partners, or anything that you would like one day to analyze and learn from.

The data should NOT be organized nicely with foreign keys between tables, or harmonized to have the same format of the address or product ID. Harmony is not part of tier I, and this is critical to make the system flexible enough to grow. Too many data projects are failing because they take too long to organize all the data without knowing which of the analysis on the data can give significant business values. Thus, Failing to award more investment into the data analytics platform.

Tier II (L2) — Multiple optimized data derivatives still in low-cost storage

The second tier is built gradually from the data coming into the first tier above. The second tier is starting as soon as the first file lands in the first tier, and it will evolve as more and more data is coming in. The evolution will be directed based on the data availability, and mainly based on the business usage of the analysis output, such as machine learning predictions or analytical reports. Let’s briefly discuss each part of this tier description:

Multiple — every analytical use case should have its own dedicated and independent flow of data. Even if it means that data will be replicated dozens of times and be calculated differently. Remember that every analysis is looking at the data from a different angle and for a different business need, and eventually, it is developed by a different team. For example, sales analytics is different than marketing analytics or logistics analytics.

Optimized — the transformations of the data from its raw form toward an analytical insight allow tremendous optimization opportunities. An obvious one is taking JSON data and storing it in Parquet format that is both columnar (query on a single column only scans the data of that column) and compressed. In most such transformation, using Create Table As Select (CTAS) in Athena, you can get 1,000–10,000 times cost improvements. The same goes for transcribing audio and video to textual captions or analyzing images to classes, faces sentiments, or face recognition. Running analytical queries on the face sentiments of your customers on different days or stores should be simple and low cost to be used by the business people.

Data Derivatives — The data in the second tier is mostly aggregated, filtered, or transformed from its original raw form to fit a specific business question. If I need to predict the daily sales of a brand, I don’t need to analyze every individual purchase for every unique product. I can look at daily and brand aggregation. We should not be afraid to make the derivative “too specific,” as we still have the raw data in Tier I. We will have many other specific derivatives to the other business use cases. Having the “same” data in different forms is not a problem, as this is not the same data, but a derivative of it.

Still in low-cost storage — if you want to be able to keep dozens of “copies” of the already big data that you have in your company, each “copy” of that data must be very low cost. I saw too many companies trying to work only with raw data (“because we can”) or write too quickly into a database with expensive compute and memory capabilities, and miss on this critical tier II.

Tier III (L3) — Optional Cache Datastores

To allow users’ interactions with the results of the data analytics, we often need to cache these results to make them usable for humans, in terms of speed and query capabilities.

The most recommended cache options (and obviously, there are more than one as each is better for different use cases) are:

- DynamoDB for GraphQL access from a client application,

- ElasticSearch for textual queries,

- Redis for fast operations on in-memory data sets (such as Sorted-Sets), or

- Neptune for graph queries (not to be confused with GraphQL).

It is also common to cache into a relational database (such as MySQL or Aurora PostgreSQL), which can be OK for relatively small data sets and visualization or BI tools that know how to work only with such databases.

As long as you treat it as cache, understanding that it much more expensive and therefore is used only for the actual use cases of the users, and you can always recreate it or delete as needed, you will have the required flexibility and cost-effectiveness that you need to build your analytical use cases within your organization. It takes time to transform companies to be “smarter” and use data more efficiently, and this time must be planned for agility, cost, scale, and simplicity, which the above architecture provides.

Operation: Orchestration and Monitoring

Running such a multi-tier, multi-use-cases, multi-line-of-business, multi-data-store, and other multipliers are not something that you can do manually with a single DBA or even a team of DBAs. You have to have automation in mind from the very beginning of the project.

In many companies, the practices of DevOps already started to evolve, and capabilities around micro-services, containers, continuous integration, and deployment (CI/CD) are already emerging. The migration to the cloud is also a growing interest, plan, and sometimes even execution and contributes to the IT power to support this modern architecture. Nevertheless, the ability to do DataOps efficiently is hard and new to most organizations. The agile and evolving building of the new architecture must include an essential aspect of people skills and choosing the right tools to automate the process.

The main options for orchestration I see today being used most often, are AWS native (Step Functions), Open-Source tools (mainly Apache AirFlow), or managed services (such as Upsolver). I have excellent experience with all these options, and the decision on which way to go is based on your specific use case, data sources, budget, technical capabilities, etc.

Let’s put it all together

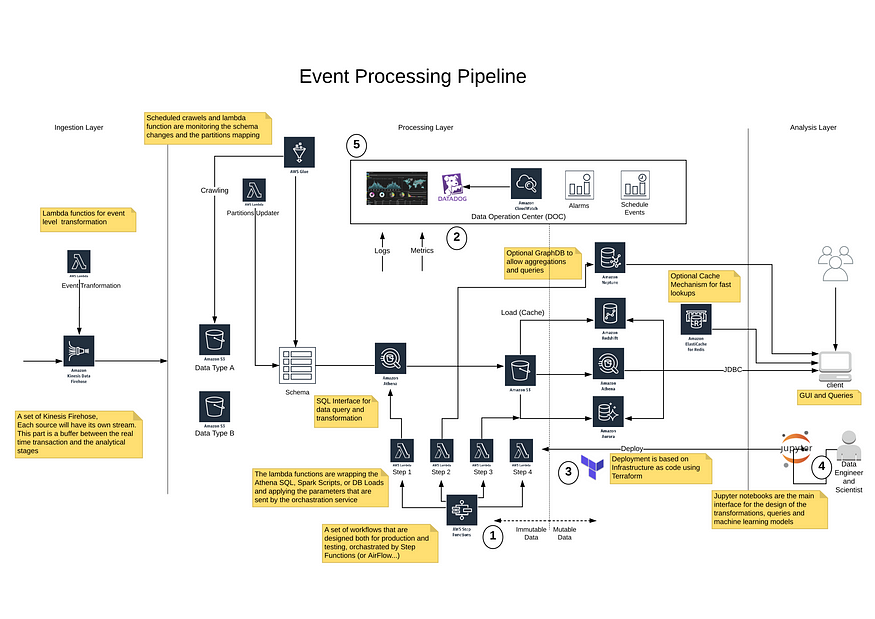

The diagram below is often too overwhelming when I show it for the first time, and this is why I kept it only to the end. I hope that after reading the explanations and the reasons for it, you will find it more useful and straightforward to understand.

The diagram shows a specific project I’ve implemented in one of my customers, and the usage of Step Functions for orchestration, DataDog for monitoring or Terraform for deployment, can be replaced with any of your favorite tools (AirFlow, Grafana, and Jenkins, for example). The central concept of the cloud is the modularity of the architectures and the ability to add, replace, scale, and remove any part of it when needed by the business. As long as you are curious and able to learn new and better technologies, in the rapid pace of technological advancements we live in, you can build and operate a powerful and modern data platform. This data platform is an essential part of the digital and AI transformation of every company that wants to stay relevant and competitive today.

This article has been published from the source link without modifications to the text. Only the headline has been changed.