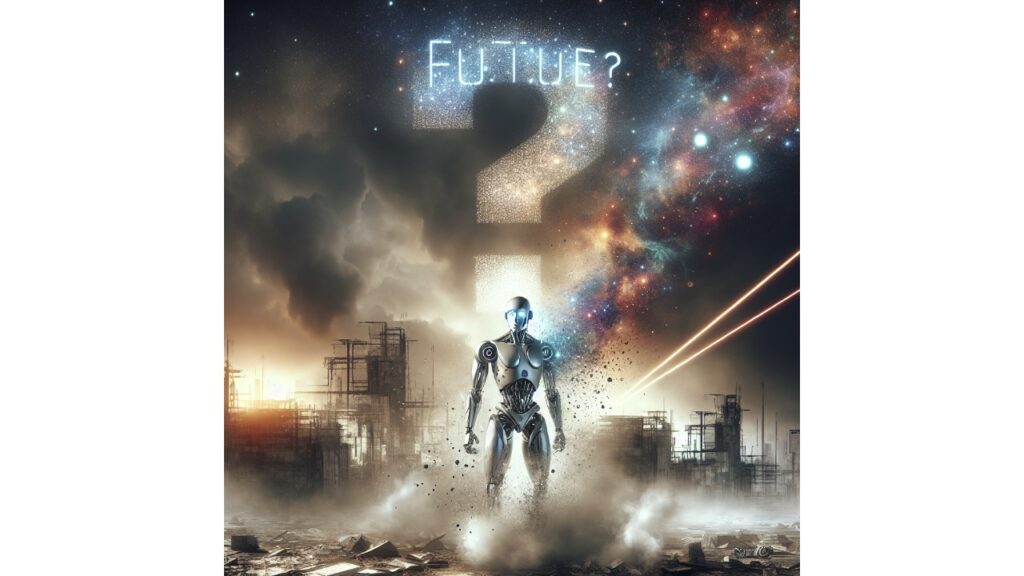

“From the ashes of the nuclear fire, the machines rose.” Crushed skulls beneath robot treads of a tank. Flashes of laser from dark, hovering spaceship. Tiny human figures running for their lives.

The film The Terminator, which opens with these famous shots, may have done more than any other to combine the sci-fi anxieties of the 1980s into a single, decent film set in Los Angeles in 2029 following nuclear war.

Are we heading for something like to Hollywood’s hellscape, in which machines have exterminated or subjugated nearly the entire human race, while we charge recklessly into the AI future with fresh armaments races and insufficient consideration for safety concerns?

Indeed, it is currently taking place. After two years of drone assaults back and forth, Russia and Ukraine have seen a rise in the number of autonomous drones. AI targeting programs for thousands of infrastructure and human targets in Gaza are purportedly being used by Israel, frequently with little to no human oversight.

Nearer to home, the U.S. Department of Defense has made no secret of its intentions to integrate artificial intelligence (AI) into numerous facets of its weaponry. The Pentagon unveiled a new AI policy in late 2023, and Deputy Defense Secretary Kathleen Hicks stated that the major reason for their focus on quickly and responsibly integrating AI into their operations has been clear: it increases their decision advantage.

Artificial intelligence (AI) is already hundreds of times faster than humans in many activities and may eventually surpass them by millions or perhaps billions. This is the main reason behind the trend toward integrating AI into military systems. The subtitle of the new AI strategy document reads, “accelerating decision advantage” reasoning, and it applies to all types of weapons systems, including nuclear weapons.

The integration of sophisticated artificial intelligence (AI) systems into weapons launch and surveillance systems has been a cause for concern among experts. James Johnson’s book AI and the Bomb: Nuclear Strategy and Risk in the Digital Age, published in December, makes the case that AI-powered nuclear weapons systems will soon be the most dangerous weapons ever created. A well-known expert on defense strategy and former senator from Georgia, Sam Nunn, along with numerous others, have cautioned about the possible disaster(s) that could arise from the improper deployment of AI.

Nunn and others connected to the Nuclear Threat Initiative, which he co-founded, signed a paper outlining seven principles “for global nuclear order,” one of which is: Developing strong and widely recognized techniques to shorten leaders’ decision-making times, particularly in high-stress and dire circumstances where leaders fear their countries are being attacked, could be a shared conceptual objective that connects short- and long-term measures for stabilizing the situation and promoting mutual security. It is immediately evident that this principle runs counter to the present trend of integrating AI into everything. In fact, Nunn and his co-authors acknowledge this innate conflict in their following principle: Artificial intelligence has the potential to drastically shorten decision-making times and make it more difficult to safely handle extremely deadly weaponry.

There was agreement on the extremely serious potential harm from AI in the use of nuclear weapons, even while there was disagreement about the precise nature of the threat or the best course of action when spoke with military and AI experts about these topics.

Gladstone AI, a startup created by national security experts Edouard and Jeremie Harris, produced a significant report on AI safety risks that the State Department issued in February. following a correspondence with them on certain gaps in their report. Though their report concentrated more on conventional weapons systems, Edouard did not consider the integration of AI into nuclear weapons surveillance and launch decisions to be a short-term threat. However, he did state at the conclusion of the conversation that the fear of being discovered or tricked by adversaries using AI is a “meaningful risk factor” in possible military escalation. Governments “are less likely to give AI control over nuclear weapons… than they are to give AI control over conventional weapons,” the speaker proposed.

However, during the conversation, he acknowledged that states might be inclined to give AI systems control over their nuclear arsenals out of fear that they would be more adept than humans at making decisions in the face of hostile AI-generated misinformation. Johnson drew out a scenario similar to this in AI and the Bomb.

The eminent Hungarian-American mathematician John von Neumann supported nuclear first strikes as a form of “preventive war,” helping to establish game theory as a field of study. According to him, common sense and game theory strongly urged that the United States hit the Soviet Union hard before they accumulated enough nuclear weapons to make any counterattack impossible. In 1950, he said, “If you say we should bomb them tomorrow, I say we should bomb them today. I say why not today? If you say today at five o’clock, I say why not one o’clock?”

The United States did not really attack the Soviet Union with nuclear or conventional weapons, despite this luminary’s bellicose advice. Will artificial intelligence integrated into conventional and nuclear weapons systems adopt a hawkish game theory approach similar to that of Hans Neumann? Or will the programs use more compassionate and understanding standards when making decisions?

The large language model (LLM) AIs of today are infamously a “black box,” thus we can’t know for sure today, and neither will the defense policy makers nor their AI programmers. Essentially, this means that humans have no idea how these robots decide what to do. It is impossible to predict how artificial intelligence will react in a specific situation until after it has happened, even though military-focused AI will undoubtedly receive training and instructions.

And that is the very frightening risk associated with the mad dash to integrate AI into our weaponry.

According to some, military decision-makers will resist the temptation to integrate artificial intelligence (AI) into nuclear weapons systems because the stakes are so much higher and there might not be any real benefits to drastically accelerating launch decisions. Nuclear weapons systems are different from conventional weapons systems.

Significant blurring of these distinctions is caused by the rise of tactical nuclear bombs, which are much smaller and intended for use in the battlefield. This disparity is made all the more apparent by Russia’s threatening to use tactical nuclear weapons in Ukraine.

Strong legislation is now required to prevent the removal of people from nuclear decision-making. Removing “nuclear ashes” and Skynet is the ultimate goal. Ever.