Preparing your data for a neural network requires converting all features into numerical features, generally normalizing them, and more. In particular, if your data contains categorical features or text features, they need to be converted to numbers. This can be done ahead of time when preparing your data files, using any tool you like (e.g., NumPy, pandas, or Scikit-Learn). Alternatively, you can preprocess your data on the fly when loading it with the Data API (e.g., using the dataset’s map() method, as we saw earlier), or you can include a preprocessing layer directly in your model. Let’s look at this last option now.

For example, here is how you can implement a standardization layer using a Lambda layer. For each feature, it subtracts the mean and divides by its standard deviation (plus a tiny smoothing term to avoid division by zero):

means = np.mean(X_train, axis=0, keepdims=True)

stds = np.std(X_train, axis=0, keepdims=True)

eps = keras.backend.epsilon()

model = keras.models.Sequential([

keras.layers.Lambda(lambda inputs: (inputs - means) / (stds + eps)),

[...] # other layers

])That’s not too hard! However, you may prefer to use a nice self-contained custom layer (much like Scikit-Learn’s StandardScaler), rather than having global variables like means and stds dangling around:

class Standardization(keras.layers.Layer):

def adapt(self, data_sample):

self.means_ = np.mean(data_sample, axis=0, keepdims=True)

self.stds_ = np.std(data_sample, axis=0, keepdims=True)

def call(self, inputs):

return (inputs - self.means_) / (self.stds_ + keras.backend.epsilon())Before you can use this standardization layer, you will need to adapt it to your dataset by calling the adapt() method and passing it a data sample. This will allow it to use the appropriate mean and standard deviation for each feature:

std_layer = Standardization()

std_layer.adapt(data_sample) This sample must be large enough to be representative of your dataset, but it does not have to be the full training set: in general, a few hundred randomly selected instances will suffice (however, this depends on your task). Next, you can use this preprocessing layer like a normal layer:

model = keras.Sequential()

model.add(std_layer)

[...] # create the rest of the model

model.compile([...])

model.fit([...]) If you are thinking that Keras should contain a standardization layer like this one, here’s some good news for you: by the time you read this, the keras.layers.Normal ization layer will probably be available. It will work very much like our custom Standardization layer: first, create the layer, then adapt it to your dataset by passing a data sample to the adapt() method, and finally use the layer normally.

Now let’s look at categorical features. We will start by encoding them as one-hot vectors.

Encoding Categorical Features Using One-Hot Vectors

Consider the ocean_proximity feature in the California housing dataset we explored earlier in this book: it is a categorical feature with five possible values: “<1H OCEAN”, “INLAND”, “NEAR OCEAN”, “NEAR BAY“, and “ISLAND“. We need to encode this feature before we feed it to a neural network. Since there are very few categories, we can use one-hot encoding. For this, we first need to map each category to its index (0 to 4), which can be done using a lookup table:

vocab = ["<1H OCEAN", "INLAND", "NEAR OCEAN", "NEAR BAY", "ISLAND"]

indices = tf.range(len(vocab), dtype=tf.int64)

table_init = tf.lookup.KeyValueTensorInitializer(vocab, indices)

num_oov_buckets = 2

table = tf.lookup.StaticVocabularyTable(table_init, num_oov_buckets) Let’s go through this code:

• We first define the vocabulary: this is the list of all possible categories.

• Then we create a tensor with the corresponding indices (0 to 4).

• Next, we create an initializer for the lookup table, passing it the list of categories and their corresponding indices. In this example, we already have this data, so we use a KeyValueTensorInitializer; but if the categories were listed in a text file (with one category per line), we would use a TextFileInitializer instead.

• In the last two lines we create the lookup table, giving it the initializer and specifying the number of out-of-vocabulary (oov) buckets. If we look up a category that does not exist in the vocabulary, the lookup table will compute a hash of this category and use it to assign the unknown category to one of the oov buckets. Their indices start after the known categories, so in this example the indices of the two oov buckets are 5 and 6.

Why use oov buckets? Well, if the number of categories is large (e.g., zip codes, cities, words, products, or users) and the dataset is large as well, or it keeps changing, then getting the full list of categories may not be convenient. One solution is to define the vocabulary based on a data sample (rather than the whole training set) and add some oov buckets for the other categories that were not in the data sample. The more unknown categories you expect to find during training, the more oov buckets you should use. Indeed, if there are not enough oov buckets, there will be collisions: different categories will end up in the same bucket, so the neural network will not be able to distinguish them (at least not based on this feature).

Now let’s use the lookup table to encode a small batch of categorical features to onehot vectors:

>>> categories = tf.constant(["NEAR BAY", "DESERT", "INLAND", "INLAND"])

>>> cat_indices = table.lookup(categories)

<tf.Tensor: id=514, shape=(4,), dtype=int64, numpy=array([3, 5, 1, 1])>

>>> cat_one_hot = tf.one_hot(cat_indices, depth=len(vocab) + num_oov_buckets)

>>> cat_one_hot

<tf.Tensor: id=524, shape=(4, 7), dtype=float32, numpy=

array([[0., 0., 0., 1., 0., 0., 0.],

[0., 0., 0., 0., 0., 1., 0.],

[0., 1., 0., 0., 0., 0., 0.]], dtype=float32)> As you can see, “NEAR BAY” was mapped to index 3, the unknown category “DESERT” was mapped to one of the two oov buckets (at index 5), and “INLAND” was mapped to index 1, twice. Then we used tf.one_hot() to one-hot encode these indices. Notice that we have to tell this function the total number of indices, which is equal to the vocabulary size plus the number of oov buckets. Now you know how to encode categorical features to one-hot vectors using TensorFlow!

Just like earlier, it wouldn’t be too difficult to bundle all of this logic into a nice selfcontained class. Its adapt() method would take a data sample and extract all the distinct categories it contains. It would create a lookup table to map each category to its index (including unknown categories using oov buckets). Then its call() method would use the lookup table to map the input categories to their indices. Well, here’s more good news: by the time you read this, Keras will probably include a layer called keras.layers.TextVectorization, which will be capable of doing exactly that: its adapt() method will extract the vocabulary from a data sample, and its call() method will convert each category to its index in the vocabulary. You could add this layer at the beginning of your model, followed by a Lambda layer that would apply the tf.one_hot() function, if you want to convert these indices to one-hot vectors.

This may not be the best solution, though. The size of each one-hot vector is the vocabulary length plus the number of oov buckets. This is fine when there are just a few possible categories, but if the vocabulary is large, it is much more efficient to encode them using embeddings instead.

As a rule of thumb, if the number of categories is lower than 10, then one-hot encoding is generally the way to go (but your mileage may vary!). If the number of categories is greater than 50 (which is often the case when you use hash buckets), then embeddings are usually preferable. In between 10 and 50 categories, you may want to experiment with both options and see which one works best for your use case.

Encoding Categorical Features Using Embeddings

An embedding is a trainable dense vector that represents a category. By default, embeddings are initialized randomly, so for example the “NEAR BAY” category could be represented initially by a random vector such as [0.131, 0.890], while the “NEAR OCEAN” category might be represented by another random vector such as [0.631, 0.791]. In this example, we use 2D embeddings, but the number of dimensions is a hyperparameter you can tweak. Since these embeddings are trainable, they will gradually improve during training; and as they represent fairly similar categories, Gradient Descent will certainly end up pushing them closer together, while it will tend to move them away from the “INLAND” category’s embedding (see Figure 1-4). Indeed, the better the representation, the easier it will be for the neural network to make accurate predictions, so training tends to make embeddings useful representations of the categories. This is called representation learning (we will see other types of representation learning in a later chapter).

Word Embeddings

Not only will embeddings generally be useful representations for the task at hand, but quite often these same embeddings can be reused successfully for other tasks. The most common example of this is word embeddings (i.e., embeddings of individual words): when you are working on a natural language processing task, you are often better off reusing pretrained word embeddings than training your own.

The idea of using vectors to represent words dates back to the 1960s, and many sophisticated techniques have been used to generate useful vectors, including using neural networks. But things really took off in 2013, when Tomáš Mikolov and other Google researchers published a paper9 describing an efficient technique to learn word embeddings using neural networks, significantly outperforming previous attempts. This allowed them to learn embeddings on a very large corpus of text: they trained a neural network to predict the words near any given word, and obtained astounding word embeddings. For example, synonyms had very close embeddings, and semantically related words such as France, Spain, and Italy ended up clustered together.

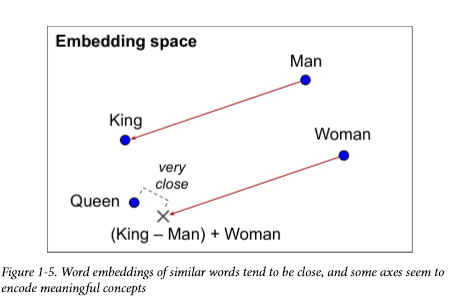

It’s not just about proximity, though: word embeddings were also organized along meaningful axes in the embedding space. Here is a famous example: if you compute King – Man + Woman (adding and subtracting the embedding vectors of these words), then the result will be very close to the embedding of the word Queen (see Figure 1-5). In other words, the word embeddings encode the concept of gender! Similarly, you can compute Madrid – Spain + France, and the result is close to Paris, which seems to show that the notion of capital city was also encoded in the embeddings.

Unfortunately, word embeddings sometimes capture our worst biases. For example, although they correctly learn that Man is to King as Woman is to Queen, they also seem to learn that Man is to Doctor as Woman is to Nurse: quite a sexist bias! To be fair, this particular example is probably exaggerated, as was pointed out in a 2019 paper10 by Malvina Nissim et al. Nevertheless, ensuring fairness in Deep Learning algorithms is an important and active research topic.

Let’s look at how we could implement embeddings manually, to understand how they work (then we will use a simple Keras layer instead). First, we need to create an embedding matrix containing each category’s embedding, initialized randomly; it will have one row per category and per oov bucket, and one column per embedding dimension:

embedding_dim = 2

embed_init = tf.random.uniform([len(vocab) + num_oov_buckets, embedding_dim])

embedding_matrix = tf.Variable(embed_init) In this example we are using 2D embeddings, but as a rule of thumb embeddings typically have 10 to 300 dimensions, depending on the task and the vocabulary size (you will have to tune this hyperparameter).

This embedding matrix is a random 6 × 2 matrix, stored in a variable (so it can be tweaked by Gradient Descent during training):

>>> embedding_matrix

<tf.Variable 'Variable:0' shape=(6, 2) dtype=float32, numpy=

array([[0.6645621 , 0.44100678],

[0.3528825 , 0.46448255],

[0.03366041, 0.68467236],

[0.74011743, 0.8724445 ],

[0.22632635, 0.22319686],

[0.3103881 , 0.7223358 ]], dtype=float32)>Now let’s encode the same batch of categorical features as earlier, but this time using these embeddings:

>>> categories = tf.constant(["NEAR BAY", "DESERT", "INLAND", "INLAND"])

>>> cat_indices = table.lookup(categories)

>>> cat_indices

<tf.Tensor: id=741, shape=(4,), dtype=int64, numpy=array([3, 5, 1, 1])>

>>> tf.nn.embedding_lookup(embedding_matrix, cat_indices)

<tf.Tensor: id=864, shape=(4, 2), dtype=float32, numpy=

array([[0.74011743, 0.8724445 ],

[0.3103881 , 0.7223358 ],

[0.3528825 , 0.46448255],

[0.3528825 , 0.46448255]], dtype=float32)>The tf.nn.embedding_lookup() function looks up the rows in the embedding matrix, at the given indices—that’s all it does. For example, the lookup table says that the “INLAND” category is at index 1, so the tf.nn.embedding_lookup() function returns the embedding at row 1 in the embedding matrix (twice): [0.3528825, 0.46448255].

Keras provides a keras.layers.Embedding layer that handles the embedding matrix (trainable, by default); when the layer is created it initializes the embedding matrix randomly, and then when it is called with some category indices it returns the rows at those indices in the embedding matrix:

>>> embedding = keras.layers.Embedding(input_dim=len(vocab) + num_oov_buckets,

... output_dim=embedding_dim)

...

>>> embedding(cat_indices)

<tf.Tensor: id=814, shape=(4, 2), dtype=float32, numpy=

array([[ 0.02401174, 0.03724445],

[-0.01896119, 0.02223358],

[-0.01471175, -0.00355174],

[-0.01471175, -0.00355174]], dtype=float32)>Putting everything together, we can now create a Keras model that can process categorical features (along with regular numerical features) and learn an embedding for each category (as well as for each oov bucket):

regular_inputs = keras.layers.Input(shape=[8])

categories = keras.layers.Input(shape=[], dtype=tf.string)

cat_indices = keras.layers.Lambda(lambda cats: table.lookup(cats))(categories)

cat_embed = keras.layers.Embedding(input_dim=6, output_dim=2)(cat_indices)

encoded_inputs = keras.layers.concatenate([regular_inputs, cat_embed])

outputs = keras.layers.Dense(1)(encoded_inputs)

model = keras.models.Model(inputs=[regular_inputs, categories],

outputs=[outputs]) This model takes two inputs: a regular input containing eight numerical features per instance, plus a categorical input (containing one categorical feature per instance). It uses a Lambda layer to look up each category’s index, then it looks up the embeddings for these indices. Next, it concatenates the embeddings and the regular inputs in order to give the encoded inputs, which are ready to be fed to a neural network. We could add any kind of neural network at this point, but we just add a dense output layer, and we create the Keras model.

When the keras.layers.TextVectorization layer is available, you can call its adapt() method to make it extract the vocabulary from a data sample (it will take care of creating the lookup table for you). Then you can add it to your model, and it will perform the index lookup (replacing the Lambda layer in the previous code example).

One-hot encoding followed by a Dense layer (with no activation function and no biases) is equivalent to an Embedding layer. However, the Embedding layer uses way fewer computations (the performance difference becomes clear when the size of the embedding matrix grows). The Dense layer’s weight matrix plays the role of the embedding matrix. For example, using one-hot vectors of size 20 and a Dense layer with 10 units is equivalent to using an Embedding layer with input_dim=20 and output_dim=10. As a result, it would be wasteful to use more embedding dimensions than the number of units in the layer that follows the Embedding layer.

Now let’s look a bit more closely at the Keras preprocessing layers.

Keras Preprocessing Layers

The TensorFlow team is working on providing a set of standard Keras preprocessing layers. They will probably be available by the time you read this; however, the API may change slightly by then, so please refer to the notebook for this chapter if anything behaves unexpectedly. This new API will likely supersede the existing Feature Columns API, which is harder to use and less intuitive (if you want to learn more about the Feature Columns API anyway, please check out the notebook for this chapter).

We already discussed two of these layers: the keras.layers.Normalization layer that will perform feature standardization (it will be equivalent to the Standardization layer we defined earlier), and the TextVectorization layer that will be capable of encoding each word in the inputs into its index in the vocabulary. In both cases, you create the layer, you call its adapt() method with a data sample, and then you use the layer normally in your model. The other preprocessing layers will follow the same pattern.

The API will also include a keras.layers.Discretization layer that will chop continuous data into different bins and encode each bin as a one-hot vector. For example, you could use it to discretize prices into three categories, (low, medium, high), which would be encoded as [1, 0, 0], [0, 1, 0], and [0, 0, 1], respectively. Of course this loses a lot of information, but in some cases it can help the model detect patterns that would otherwise not be obvious when just looking at the continuous values.

The Discretization layer will not be differentiable, and it should only be used at the start of your model. Indeed, the model’s preprocessing layers will be frozen during training, so their parameters will not be affected by Gradient Descent, and thus they do not need to be differentiable. This also means that you should not use an Embedding layer directly in a custom preprocessing layer, if you want it to be trainable: instead, it should be added separately to your model, as in the previous code example.

It will also be possible to chain multiple preprocessing layers using the Preproces singStage class. For example, the following code will create a preprocessing pipeline that will first normalize the inputs, then discretize them (this may remind you of Scikit-Learn pipelines). After you adapt this pipeline to a data sample, you can use it like a regular layer in your models (but again, only at the start of the model, since it contains a nondifferentiable preprocessing layer):

normalization = keras.layers.Normalization()

discretization = keras.layers.Discretization([...])

pipeline = keras.layers.PreprocessingStage([normalization, discretization])

pipeline.adapt(data_sample) The TextVectorization layer will also have an option to output word-count vectors instead of word indices. For example, if the vocabulary contains three words, say [“and”, “basketball”, “more”], then the text “more and more” will be mapped to the vector [1, 0, 2]: the word “and” appears once, the word “basketball” does not appear at all, and the word “more” appears twice. This text representation is called a bag of words, since it completely loses the order of the words. Common words like “and” will have a large value in most texts, even though they are usually the least interesting (e.g., in the text “more and more basketball” the word “basketball” is clearly the most important, precisely because it is not a very frequent word). So, the word counts should be normalized in a way that reduces the importance of frequent words. A common way to do this is to divide each word count by the log of the total number of training instances in which the word appears. This technique is called Term-Frequency × Inverse-Document-Frequency (TF-IDF). For example, let’s imagine that the words “and”, “basketball“, and “more” appear respectively in 200, 10, and 100 text instances in the training set: in this case, the final vector will be [1/ log(200), 0/log(10), 2/log(100)], which is approximately equal to [0.19, 0., 0.43]. The TextVectorization layer will (likely) have an option to perform TF-IDF.

If the standard preprocessing layers are insufficient for your task, you will still have the option to create your own custom preprocessing layer, much like we did earlier with the Standardization class. Create a subclass of the keras.layers.PreprocessingLayer class with an adapt() method, which should take a data_sample argument and optionally an extra reset_state argument: if True, then the adapt() method should reset any existing state before computing the new state; if False, it should try to update the existing state.

As you can see, these Keras preprocessing layers will make preprocessing much easier! Now, whether you choose to write your own preprocessing layers or use Keras’s (or even use the Feature Columns API), all the preprocessing will be done on the fly. During training, however, it may be preferable to perform preprocessing ahead of time. Let’s see why we’d want to do that and how we’d go about it.

TF Transform

If preprocessing is computationally expensive, then handling it before training rather than on the fly may give you a significant speedup: the data will be preprocessed just once per instance before training, rather than once per instance and per epoch during training. As mentioned earlier, if the dataset is small enough to fit in RAM, you can use its cache() method. But if it is too large, then tools like Apache Beam or Spark will help. They let you run efficient data processing pipelines over large amounts of data, even distributed across multiple servers, so you can use them to preprocess all the training data before training.

This works great and indeed can speed up training, but there is one problem: once your model is trained, suppose you want to deploy it to a mobile app. In that case you will need to write some code in your app to take care of preprocessing the data before it is fed to the model. And suppose you also want to deploy the model to TensorFlow.js so that it runs in a web browser? Once again, you will need to write some preprocessing code. This can become a maintenance nightmare: whenever you want to change the preprocessing logic, you will need to update your Apache Beam code, your mobile app code, and your JavaScript code. This is not only time-consuming, but also error-prone: you may end up with subtle differences between the preprocessing operations performed before training and the ones performed in your app or in the browser. This training/serving skew will lead to bugs or degraded performance.

One improvement would be to take the trained model (trained on data that was preprocessed by your Apache Beam or Spark code) and, before deploying it to your app or the browser, add extra preprocessing layers to take care of preprocessing on the fly. That’s definitely better, since now you just have two versions of your preprocessing code: the Apache Beam or Spark code, and the preprocessing layers’ code.

But what if you could define your preprocessing operations just once? This is what TF Transform was designed for. It is part of TensorFlow Extended (TFX), an end-toend platform for productionizing TensorFlow models. First, to use a TFX component such as TF Transform, you must install it; it does not come bundled with TensorFlow. You then define your preprocessing function just once (in Python), by using TF Transform functions for scaling, bucketizing, and more. You can also use any TensorFlow operation you need. Here is what this preprocessing function might look like if we just had two features:

import tensorflow_transform as tft

def preprocess(inputs): # inputs = a batch of input features

median_age = inputs["housing_median_age"]

ocean_proximity = inputs["ocean_proximity"

standardized_age = tft.scale_to_z_score(median_age)

ocean_proximity_id = tft.compute_and_apply_vocabulary(ocean_proximity)

return {

"standardized_median_age": standardized_age,

"standardized_median_age": standardized_age,

"ocean_proximity_id": ocean_proximity_id

} Next, TF Transform lets you apply this preprocess() function to the whole training set using Apache Beam (it provides an AnalyzeAndTransformDataset class that you can use for this purpose in your Apache Beam pipeline). In the process, it will also compute all the necessary statistics over the whole training set: in this example, the mean and standard deviation of the housing_median_age feature, and the vocabulary for the ocean_proximity feature. The components that compute these statistics are called analyzers.

Importantly, TF Transform will also generate an equivalent TensorFlow Function that you can plug into the model you deploy. This TF Function includes some constants that correspond to all the all the necessary statistics computed by Apache Beam (the mean, standard deviation, and vocabulary).

With the Data API, TFRecords, the Keras preprocessing layers, and TF Transform, you can build highly scalable input pipelines for training and benefit from fast and portable data preprocessing in production.

But what if you just wanted to use a standard dataset? Well in that case, things are much simpler: just use TFDS!

The TensorFlow Datasets (TFDS) Project

The TensorFlow Datasets project makes it very easy to download common datasets, from small ones like MNIST or Fashion MNIST to huge datasets like ImageNet (you will need quite a bit of disk space!). The list includes image datasets, text datasets (including translation datasets), and audio and video datasets. You can visit https:// homl.info/tfds to view the full list, along with a description of each dataset.

TFDS is not bundled with TensorFlow, so you need to install the tensorflow-datasets library (e.g., using pip). Then call the tfds.load() function, and it will download the data you want (unless it was already downloaded earlier) and return the data as a dictionary of datasets (typically one for training and one for testing, but this depends on the dataset you choose). For example, let’s download MNIST:

import tensorflow_datasets as tfds

dataset = tfds.load(name="mnist")

mnist_train, mnist_test = dataset["train"], dataset["test"] You can then apply any transformation you want (typically shuffling, batching, and prefetching), and you’re ready to train your model. Here is a simple example:

mnist_train = mnist_train.shuffle(10000).batch(32).prefetch(1)

for item in mnist_train:

images = item["image"]

labels = item["label"]

[...]

The load() function shuffles each data shard it downloads (only for the training set). This may not be sufficient, so it’s best to shuffle the training data some more.

Note that each item in the dataset is a dictionary containing both the features and the labels. But Keras expects each item to be a tuple containing two elements (again, the features and the labels). You could transform the dataset using the map() method, like this:

mnist_train = mnist_train.shuffle(10000).batch(32)

mnist_train = mnist_train.map(lambda items: (items["image"], items["label"]))

mnist_train = mnist_train.prefetch(1)

But it’s simpler to ask the load() function to do this for you by setting as_super vised=True (obviously this works only for labeled datasets). You can also specify the batch size if you want. Then you can pass the dataset directly to your tf.keras model:

dataset = tfds.load(name="mnist", batch_size=32, as_supervised=True)

mnist_train = dataset["train"].prefetch(1)

model = keras.models.Sequential([...])

model.compile(loss="sparse_categorical_crossentropy", optimizer="sgd")

model.fit(mnist_train, epochs=5)This was quite a technical chapter, and you may feel that it is a bit far from the abstract beauty of neural networks, but the fact is Deep Learning often involves large amounts of data, and knowing how to load, parse, and preprocess it efficiently is a crucial skill to have. In the next chapter, we will look at convolutional neural networks, which are among the most successful neural net architectures for image processing and many other applications.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

[ad_2]

SourMce link