A Visual Guide For Humans

Transformers are a familiar name in NLP related tasks. If you search over the internet for the term NLP the only neural network architecture that you would find would be Transformers based or at-least that’s what my experience have been.

It’s finally a proud moment for Optimus prime.

Yet, Transformers have only been showing off their powers in the NLP area and their impact on computer vision problem was next to none. At least till the month of May’20.

This was the time when the researchers at Facebook AI flexed their brain muscles and finally combined the power of Transformers with regular CNN based architecture to the problem of Object detection.

This story is a visual summary of the research.

Object Detection In Modern Detectors

Modern day detectors define surrogate regression and classification problem on large set of proposals , anchors , or window centers.

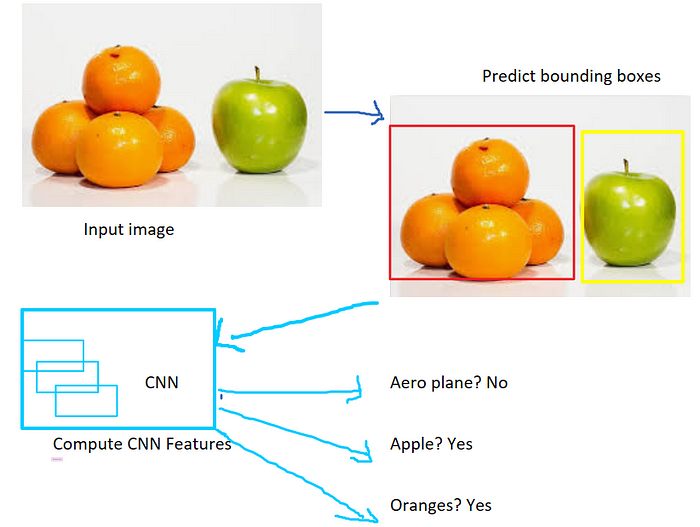

For example a overly simplified version of a object detector would be like the following.

- Given an input image predict the bounding boxes around different objects.

- Compute the features of different objects bounded by the boxes.

- Classify the object.

This method works, but the success of such systems depend on the post-processing steps to collapse near-duplicate predictions by the design of the anchor sets and by the heuristics that assign target boxes to the anchors

To simplify the object detection pipeline and to remove the shortcomings of the surrogate tasks, the authors of DETR paper propose a system using transformers to predict the bounding boxes directly.

The DETR

The DETR recipe has two main ingredients →

- A set prediction loss that forces unique matching between predicted and ground truth.

- An architecture that predicts (in a single pass) a set of objects and models their relation.

The Loss

The main component of the DETR system is it’s loss function which again has two parts.

- The matching cost. This is the pair-wise matching cost between the predicted values and the ground truth.

- The second part of the loss function is the bounding box loss. DETR directly predicts the bounding box coordinates, so the bounding box loss compares between the predicted coordinates and the ground truth.

There are two hyperparameters used in DETR .

- One is the generalized IoU loss.

- l1 loss.

The Architecture🏗️

Here we are finally. Now the part that you were waiting for. The architecture of the network.

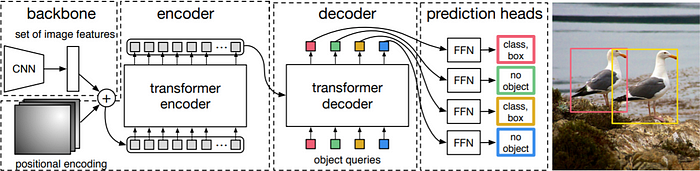

If you refer to the architecture in the figure provided in the previous section then you can quickly notice how simple the model is.

The architecture has the following sections.

- A CNN — The CNN does what it’s best at. It flattens the 3D image into it’s 2D representation.

- A positional encoding component- A transformer by design takes positional embedding. So, the positional encoding component does that.

- Then comes the transformer encoder. Each encoder layer has a standard architecture and consists of a multi-head self-attention module and a feed forward network (FFN). The encoder learns a knowledge model of the image.

- Next is the transformer decoder.A transformer decoder then takes as input a small fixed number of learned positional embedding, which we call object queries, and additionally attends to the encoder output.

- Finally, the output of the decoder is passed to a shared feed forward network. This predicts either a detection(class and bounding box) or a “no-class”.

Conclusion

The DETR paper is a very interesting paper to read. What I like about research papers from Facebook AI is that their papers are so detailed and to the point that they look more like stories than research papers.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link