Greg Lavender, CTO of Intel, explained how the company’s developer-first, open ecosystem concept is enabling broad access to prospects in artificial intelligence (AI) on the second day of the Intel Innovation 2023 conference.

Lavender explained Intel’s strategy, which is based on openness, choice, trust, and security, and stated that the company intends to address the difficulties developers encounter when adopting AI solutions.

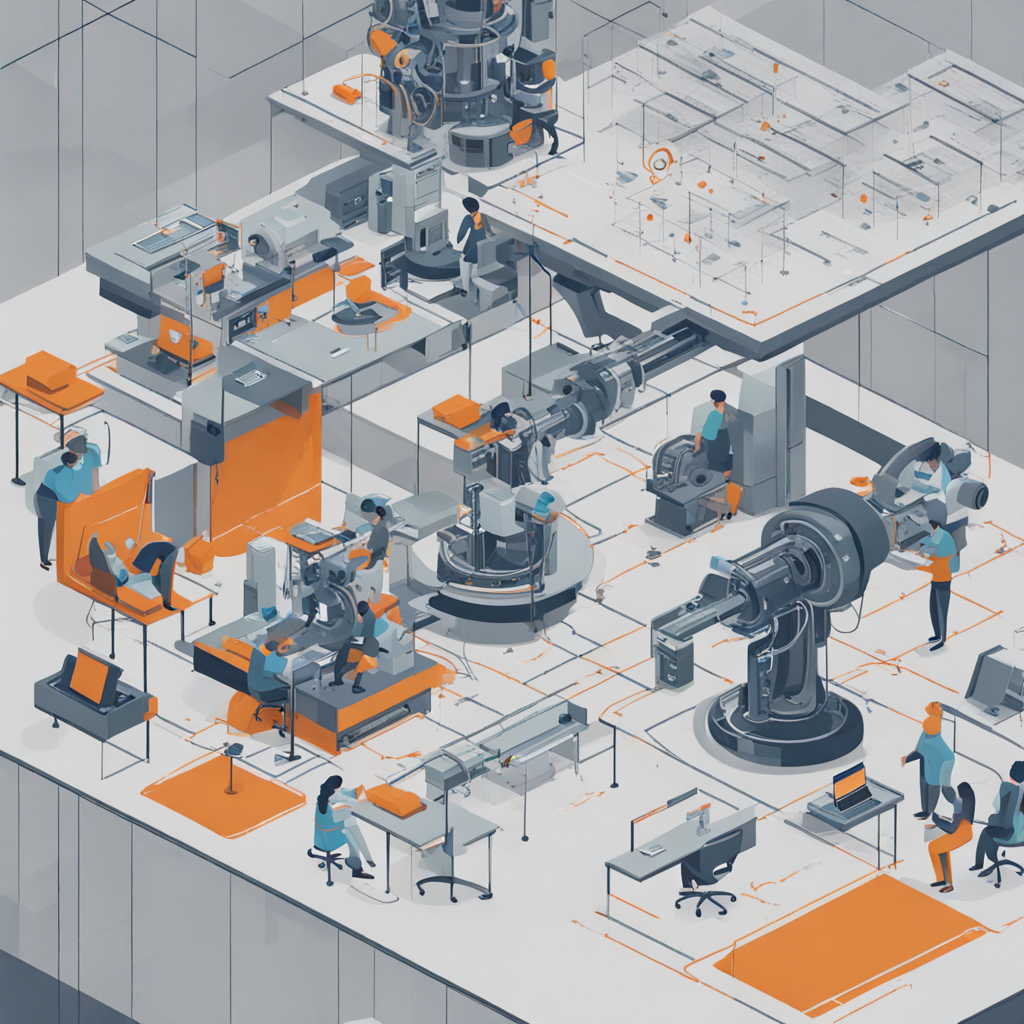

In order to use AI to address various industrial demands both now and in the future, he noted that developers play a critical role. Intel is of the mindset that software and hardware should be freely selected by developers in order to ensure that AI is usable by everyone.

According to Lavender, the developer community is the spark for assisting industries in leveraging AI to suit their varied demands both now and in the future. Everyone should have access to AI and be able to use it responsibly. The number of use cases for the adoption of AI on a global scale will be constrained and probably limited in the societal value they may provide if developers are restricted in their choice of hardware and software.

Intel wants to enable developers to bring AI everywhere by offering tools that simplify the creation of safe AI applications and lower the investment necessary to sustain and scale those solutions.

Regarding graphics, Lavender stated that the Aurora supercomputer from Argonne National Laboratory will feature 63,744 Intel Max Series GPUs, making it the largest GPU cluster in the world.

In his keynote speech, Lavender emphasized the importance of end-to-end security to Intel. This includes Intel’s Transparent Supply Chain, which checks the integrity of the hardware and firmware, and confidential computing, which protects delicate data in memory.

On a panel yesterday, Lavender cautioned that phishing scammers will soon be able to set up a Zoom call where a person pretending to be you and speaking in your voice asks your parents to send money right away to a bank account. Your parents wouldn’t be able to tell the difference, she said. The obvious drawback is that.

On the plus side, according to Pat Gelsinger, CEO of Intel, PCs will have enough edge computing power for AI to be processed on a single device or small group of devices. Additionally, they may enable an AI assistant to instantly access and look through all of your calls and recordings to assist you whenever you need it.

As the first product in its portfolio of security software and services, Intel Trust Authority, the company has announced the broad availability of a new attestation service. In order to improve security in multi-cloud, hybrid, on-premises, and edge settings, this solution offers an impartial audit of trusted execution environment integrity and policy enforcement.

Addressing challenges

According to Lavender, Intel is aware of the challenges organizations encounter when implementing AI solutions, such as a lack of resources, resource limitations, and expensive proprietary platforms. He claimed Intel is dedicated to promoting an open ecosystem that enables simple deployment across several architectures in order to address these difficulties.

As a founding member of the Unified Acceleration Foundation (UXL) of the Linux Foundation, Intel seeks to streamline the creation of applications for cross-platform deployment. The UXL Foundation will benefit from Intel’s oneAPI programming approach, which enables code to be written once and run on a variety of computing systems.

Additionally, in order to create enterprise software distributions that are optimized for Intel, Red Hat, Canonical, and SUSE are working together with Intel. Performance for the newest Intel architectures is optimized because to this partnership. Intel also keeps making contributions to frameworks and tools for AI and machine learning, like PyTorch and TensorFlow.

Auto Pilot for Kubernetes pod resource rightsizing is a new feature that Intel Granulate is releasing to assist developers in scaling performance effectively. This capacity-optimization tool provides Kubernetes users with automatic and continual capacity management advice. Additionally, Intel Granulate is enhancing Databricks workloads with autonomous orchestration capabilities, resulting in considerable cost savings and processing speed gains.

Intel intends to create an application-specific integrated circuit (ASIC) accelerator to lessen the performance overhead associated with fully homomorphic encryption (FHE), as it is necessary to safeguard AI models, data, and platforms against alteration and theft. The beta release of an encrypted computing software toolkit, which will allow academics, developers, and user communities to experiment with FHE coding, was also announced by Intel.