Machine-learning systems could help flag hateful, threatening or offensive language

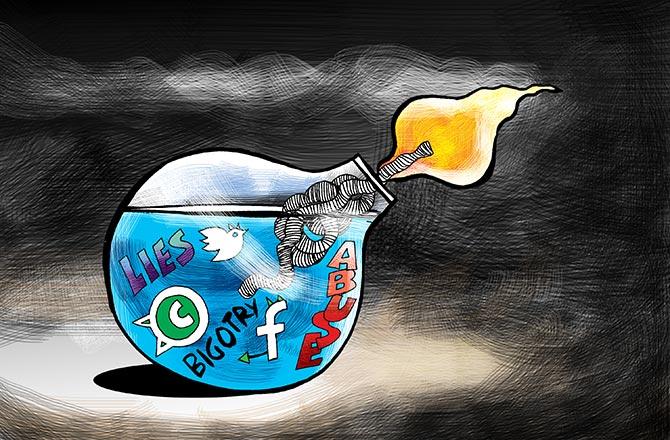

Social platforms large and small are struggling to keep their communities safe from hate speech, extremist content, harassment and misinformation. Most recently, far-right agitators posted openly about plans to storm the U.S. Capitol before doing just that on January 6. One solution might be AI: developing algorithms to detect and alert us to toxic and inflammatory comments and flag them for removal. But such systems face big challenges.

The prevalence of hateful or offensive language online has been growing rapidly in recent years, and the problem is now rampant. In some cases, toxic comments online have even resulted in real life violence, from religious nationalism in Myanmar to neo-Nazi propaganda in the U.S. Social media platforms, relying on thousands of human reviewers, are struggling to moderate the ever-increasing volume of harmful content. In 2019, it was reported that Facebook moderators are at risk of suffering from PTSD as a result of repeated exposure to such distressing content. Outsourcing this work to machine learning can help manage the rising volumes of harmful content, while limiting human exposure to it. Indeed, many tech giants have been incorporating algorithms into their content moderation for years.

One such example is Google’s Jigsaw, a company focusing on making the internet safer. In 2017, it helped create Conversation AI, a collaborative research project aiming to detect toxic comments online. However, a tool produced by that project, called Perspective, faced substantial criticism. One common complaint was that it created a general “toxicity score” that wasn’t flexible enough to serve the varying needs of different platforms. Some Web sites, for instance, might require detection of threats but not profanity, while others might have the opposite requirements.

Following these concerns, the Conversation AI team invited developers to train their own toxicity-detection algorithms and enter them into three competitions (one per year) hosted on Kaggle, a Google subsidiary known for its community of machine learning practitioners, public data sets and challenges. To help train the AI models, Conversation AI released two public data sets containing over one million toxic and non-toxic comments from Wikipedia and a service called Civil Comments. The comments were rated on toxicity by annotators, with a “Very Toxic” label indicating “a very hateful, aggressive, or disrespectful comment that is very likely to make you leave a discussion or give up on sharing your perspective,” and a “Toxic” label meaning “a rude, disrespectful, or unreasonable comment that is somewhat likely to make you leave a discussion or give up on sharing your perspective.” Some comments were seen by many more than 10 annotators (up to thousands), due to sampling and strategies used to enforce rater accuracy.

The goal of the first Jigsaw challenge was to build a multilabel toxic comment classification model with labels such as “toxic”, “severe toxic”, “threat”, “insult”, “obscene”, and “identity hate”. The second and third challenges focused on more specific limitations of their API: minimizing unintended bias towards pre-defined identity groups and training multilingual models on English-only data.

Although the challenges led to some clever ways of improving toxic language models, our team at Unitary, a content-moderation AI company, found none of the trained models had been released publicly.

For that reason, we decided to take inspiration from the best Kaggle solutions and train our own algorithms with the specific intent of releasing them publicly. To do so, we relied on existing “transformer” models for natural language processing, such as Google’s BERT. Many such models are accessible in an open-source transformers library.