Here’s the Visual Edition of this Tutorial:

pip3 install wikipedia

Open up a Python interactive shell or an empty file and follow along.

Let’s get the summary of what Python programming language is:

import wikipedia

# print the summary of what python is

print(wikipedia.summary("Python Programming Language"))In [2]: wikipedia.summary("Python programming languag", sentences=2)

Out[2]: "Python is an interpreted, high-level, general-purpose programming language. Created by Guido van Rossum and first released in 1991, Python's design philosophy emphasizes code readability with its notable use of significant whitespace."Notice that I misspelled the query intentionally, it still gives me an accurate result.

Search for a term in wikipedia search:

In [3]: result = wikipedia.search("Neural networks")

In [4]: print(result)

['Neural network', 'Artificial neural network', 'Convolutional neural network', 'Recurrent neural network', 'Rectifier (neural networks)', 'Feedforward neural network', 'Neural circuit', 'Quantum neural network', 'Dropout (neural networks)', 'Types of artificial neural networks']This returned a list of related page titles, let’s get the whole page for “Neural network” which is “result[0]”:

# get the page: Neural network

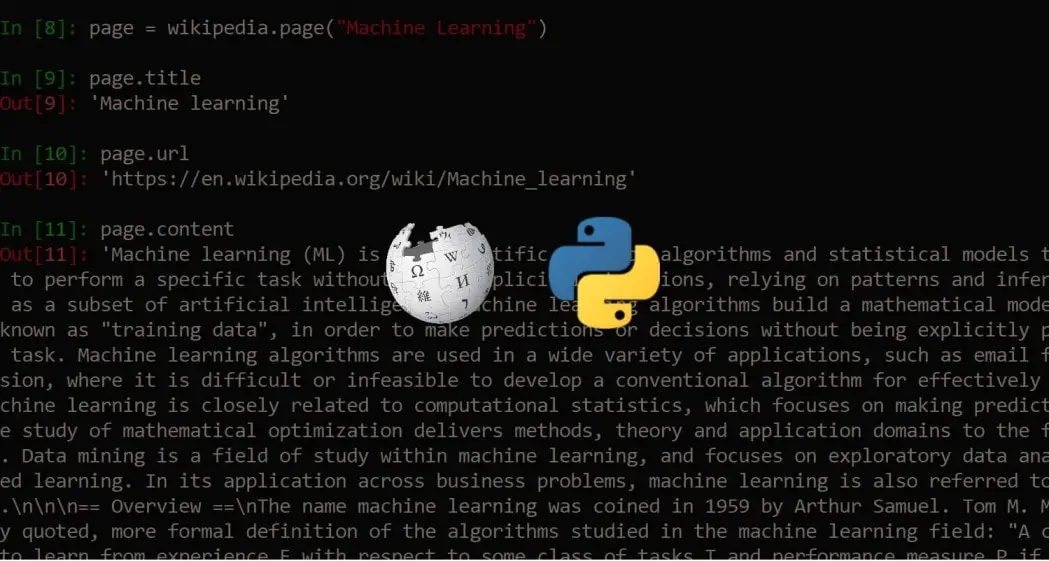

page = wikipedia.page(result[0])Extracting the title:

# get the title of the page

title = page.titleGetting all the categories of that Wikipedia page:

# get the categories of the page

categories = page.categoriesExtracting the text after removing all HTML tags (this is done automatically):

# get the whole wikipedia page text (content)

content = page.contentAll links:

# get all the links in the page

links = page.linksThe references:

# get the page references

references = page.referencesFinally, the summary:

# summary

summary = page.summaryLet’s print them out:

# print info

print("Page content:\n", content, "\n")

print("Page title:", title, "\n")

print("Categories:", categories, "\n")

print("Links:", links, "\n")

print("References:", references, "\n")

print("Summary:", summary, "\n")Try it out !

Alright, we are done, this was a brief introduction on how you can extract information from Wikipedia in Python. This can be helpful if you want to automatically collect data for language models, make a question answering chatbot, making a wrapper application around this and much more! The possibilities are endless.

This article has been published from the source link wirthout modifications to the text. Only the headline has been changed.

Source link