A classic use of artificial intelligence is robotics, which has recently seen a boost from the very new and trendy field of generative AI. Examples of such programmes are large language models from OpenAI that can interact with natural language statements.

For instance, this year, Google’s DeepMind division unveiled RT-2, a sizable language model that can be given an image and a command, and then spit out the coordinates required to carry out the command as well as a plan of action.

However, generative programmes are limited in what they can do. While they can perform “high-level” tasks like routing a robot to a destination, they are unable to perform “low-level” tasks like fine motor control tasks such as manipulating a robot’s joints.

This month’s release of new Nvidia research indicates that language models might be getting closer to overcoming that gap. When directing robots at a low level, such as getting them to perform fine-motor tasks like manipulating objects with their hands, a programme called Eureka uses language models to set goals.

As of now, Eureka only controls a computer simulation of robotics; it does not yet have the ability to control a physical robot in the real world. This means that it is just the first of many attempts to bridge the gap.

In the paper Eureka: Human-level, reward design via coding large language, models, published this month on the arXiv pre-print server, lead author Yecheng Jason Ma and colleagues at Nvidia, the University of Pennsylvania, Caltech, and the University of Texas at Austin state that harnessing [large language models] to learn complex low-level manipulation tasks, like dexterous pen spinning, remains an open problem.

The observation made by Ma and colleagues aligns with the perspective of seasoned robotics researchers. Language models are not a good fit for the last inch, or the portion of the task where the robot actually touches objects in the real world, according to Sergey Levine, an associate professor in the Department of Electrical Engineering at the University of California, Berkeley. This is because the task “is mostly bereft of semantics.”

Although it’s unclear if it will truly be helpful, it might be able to refine a language model to predict grasps as well. After all, what does language tell you about where to put your fingers on an object? Levine stated. It may provide you with some information, but maybe not enough to really change anything.

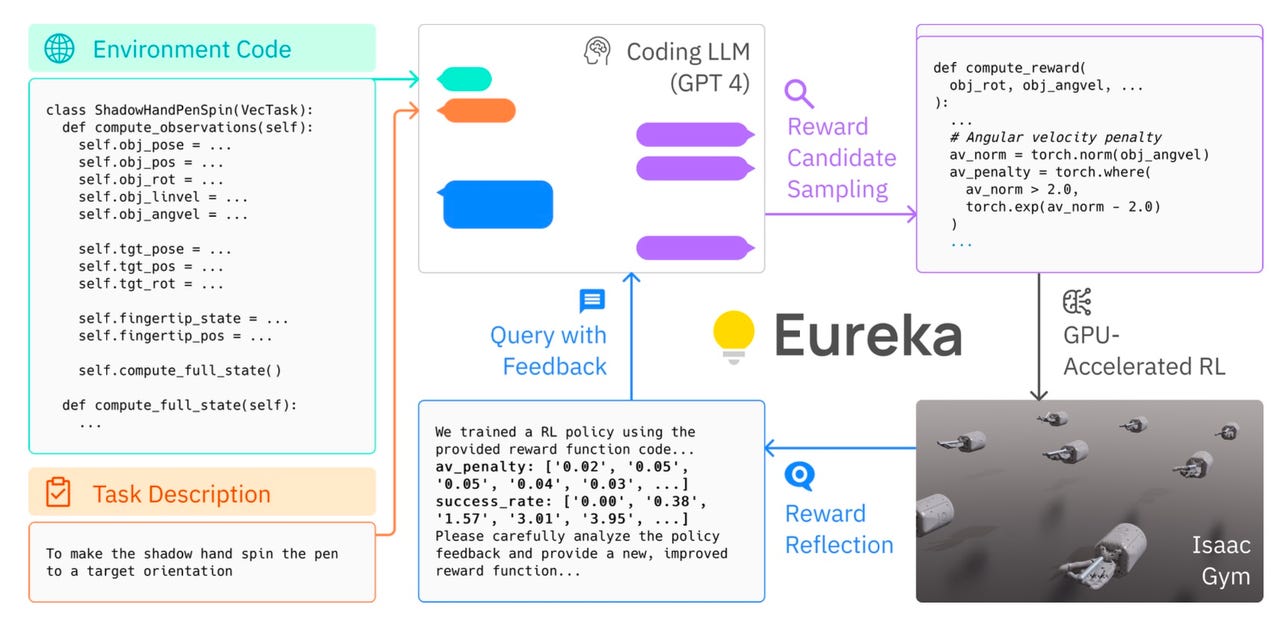

The Eureka paper addresses the issue in a roundabout way. Rather than providing instructions to the robot simulation, the language model is used to create “rewards,” or ideal states that the robot can aim for. In reinforcement learning, a branch of machine learning AI that Berkeley’s Levine and other roboticists use for robot training, rewards are a well-proven technique.

Ma and colleagues hypothesis that a large language model is more capable than a human AI programmer at creating those reinforcement learning rewards.

In an approach called reward evolution, the programmer formulates the problem in detail, provides GPT-4 with information about the robotic simulation (e.g., environmental constraints on robot capabilities), lists all of the tried rewards, and asks GPT-4 to refine the solution. After that, GPT-4 creates new rewards and tests them repeatedly.

The program’s name, Eureka, stands for Evolution-driven Universal REward Kit for Agents.

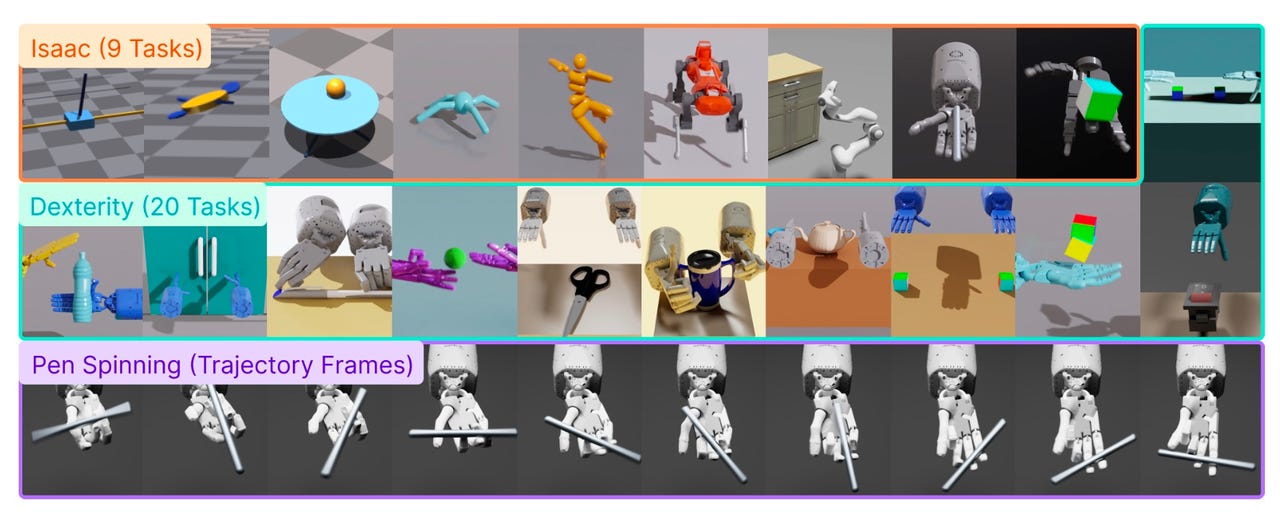

In numerous simulations of tasks, including having a robot arm open a drawer, Ma and colleagues tested their invention. According to them, Eureka performs reward design at a level comparable to human ability in a wide range of 29 publicly available reinforcement learning environments. These environments comprise 10 different robot morphologies, such as quadruped, quadcopter, biped, manipulator, and several hands with dexterity.

In 83% of the tasks, Eureka autonomously generates rewards that outperform expert human rewards without task-specific prompting or reward templates, realizing an average normalized improvement of 52%, according to their report.

Getting a simulated robot hand to spin a pen like a bored student in class is one of the more impressive examples of what they’ve accomplished. We examine pen spinning, in which a hand with five fingers must quickly spin a pen in predetermined configurations for the greatest number of cycles. In order to achieve this, they take Eureka and a machine learning technique known as curriculum learning, which was created a few years ago and involves breaking a task down into manageable pieces.

They explain, “We show off quick pen spin techniques on a simulated anthropomorphic Shadow Hand for the first time.”

Surprisingly, the authors also find that when they combine their enhanced Eureka rewards with human rewards, the combination outperforms both human and Eureka rewards separately in tests. They speculate that the explanation is because humans possess a piece of the puzzle—knowledge of the current situation—that the Eureka programme does not.

According to their writing, human designers are generally aware of the pertinent state variables but are not as skilled at creating rewards that make use of them. This makes intuitive sense because selecting pertinent state variables for the reward function primarily requires common sense reasoning, whereas creating rewards calls for specific training and experience in reinforcement learning.

This suggests a potential collaboration between humans and AI, similar to GitHub Copilot and other assistance apps: Taken as a whole, these findings highlight Eureka’s potential as a reward assistant, perfectly balancing the human designers’ limited expertise in reward design with Eureka’s understanding of useful state variables.