When diagnosing brain tumors, biopsies are often the first port of call. Surgeons begin by removing a thin layer of tissue from the tumor and examining it under a microscope to look closely for signs of the disease. However, not only are biopsies highly invasive, but the samples obtained only represent a fraction of the overall tumor site. MRI offers a less intrusive approach, but radiologists must manually delineate the area of the tumor to be scanned before it can be classified, which is time consuming. The United States has developed a model that is able to classify numerous types of intracranial tumors without the need for a scalpel; these tumors on magnetic resonance imaging based on hierarchical characteristics such as location and morphology. The team’s CNN was able to accurately classify various brain tumors without manual interaction.

“This network is the first step toward developing an artificial intelligence-augmented radiology workflow that can support image interpretation by providing quantitative information and statistics,” says first author Satrajit Chakrabarty.

Predicting tumour type

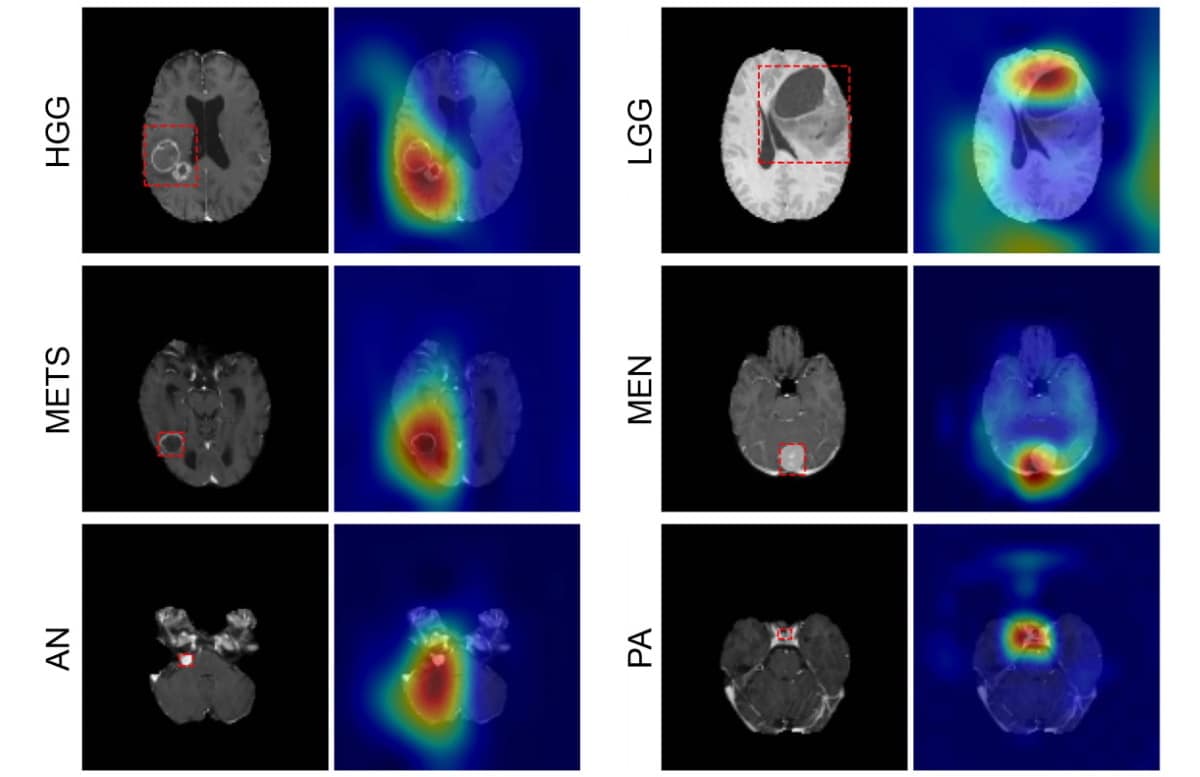

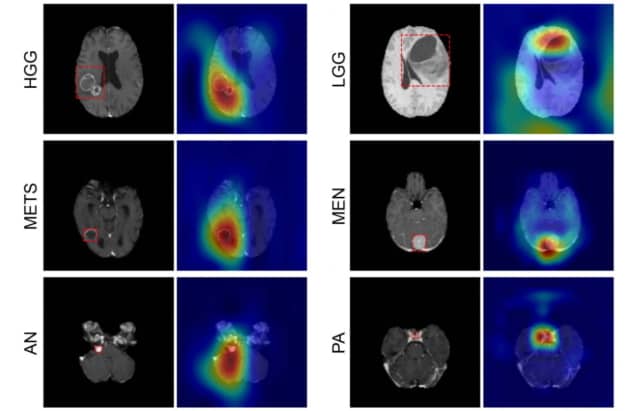

CNN can detect six common types of intracranial tumors: high and low grade gliomas, meningiomas, pituitary adenomas, acoustic neuromas, and brain metastases. Writing in Radiology: Artificial Intelligence, the team of Aristeidis Sotiras and Daniel Marcus at the University of Washington School of Medicine. (WUSM) – says this neural network is the first to determine tumor class directly from a 3D MRI volume and detect the absence of a tumor. To determine the accuracy of their CNN, the researchers created two cross-agency preoperative and post-contrasting MRI datasets from four publicly accessible databases along with the data obtained at the WUSM.

The first, the internal dataset, contained 1,757 scans in seven image classes: all six tumor classes and one healthy class. Of those scans, 1,396 were training data that the team used to teach CNN how to differentiate between classes. Then 361 models were used to test the model’s performance (internal test data). The CNN correctly identified the tumor type with an accuracy of 93.35 %, which was confirmed by the radiological reports on each scan. The specific cancer that CNN detected (instead of being healthy or having any other type of tumor) was 85-100%.

Few false negative results were observed in all image classes; the likelihood that patients who tested negative in a given class did not have this disease (or were not healthy) was 98 to 100%. The researchers then tested their model with a second external data set that contained only high and low grade gliomas. These scans were sourced separately to those in the internal dataset.

“As deep-learning models are very sensitive to data, it has become standard to validate their performance on an independent dataset, obtained from a completely different source, to see how well they generalize [react to unseen data],” explains Chakrabarty.