“I’m a great believer that any tool that enhances communication has profound effects in terms of how people can learn from each other, and how they can achieve the kind of freedoms that they’re interested in.”

— Bill Gates

T his chapter is about language. In particular, it is about the power of language in communication and the role of language in intelligent systems. The focus is on the two ways in which machines deal with language: understanding and generation. I also look at why machines need to use natural language to successfully partner with us in the workplace.

Understanding Language

The main aspect of intelligence separating humans from other animals is our use of language. We are unique in our ability to articulate complex ideas, explanations, and series of events as well as understand them when told to us by others. Thousands of other abilities also contribute to our intelligence, but the use of language as a means of communicating complicated ideas trumps them all.

For intelligent systems to partner with us in the workplace or elsewhere, they must genuinely understand us and then communicate what they are doing back to us in a way that we can comprehend.

Unsurprisingly, the one method proposed to test for intelligence in machines — the Turing Test — uses language at its core. The Turing Test is designed around an intelligent system’s ability to have a convincing conversation with us. Although passing the test does not require all that much more than we would expect of a dinner companion, it has become the gold standard for evaluating AI.

Our willingness to accept Siri and other personal assistants as intelligent is based almost entirely on their ability to interact with us using spoken language. The fact that their ability to do things for us is somewhat limited is far less important to us (or even noticeable to us) than the fact that they use language.

Processing Language

The goal of natural language understanding (NLU) systems is to figure out the meaning of language inputs: the words, sentences, stories, and so on. Systems aimed at this problem use a combination of three different kinds of information:

✓ Pragmatics: Contextual information such as lists or descriptions.

✓ Semantics: The meaning of words and how those meanings can be combined.

✓ Syntax: The structural relationships among types of language elements such as nouns, verbs, adjectives, prepositions, and phrases.

Navigating deep and shallow waters

Establishing the syntax rules that make up the grammar of a particular language is fairly straightforward. However, the semantics and pragmatics of doing so make things tricky.

“Tricky” is perhaps understating the challenge faced, in that the complexity related to these two issues forces a trade‐off when building language understanding systems. You can do either of the following:

✓ Build a system that is extremely narrow but fairly deep with regard to what it can understand

✓ Build a system that is broad but very shallow in what it can determine from the words it is given

A deep system understands beyond the information explicitly in the text. For example, being able to read “Bob hit a car because he was drinking coffee on the way to work,” and determining that happened in the morning is an example of deep understanding. The time of day wasn’t stated but people tend to drink coffee and start the workday in the morning. Here, syntax, semantics, and pragmatics are all working together.

However, most language processing systems encountered in both consumer and enterprise systems tend to be broad and shallow. For example, Siri doesn’t understand what you really mean when you talk with her but is able to identify some basic needs that you might be expressing.

Getting started with extraction, tagging, and sentiment analysis

Natural language processing (NLP) systems pull out specific pieces of information rather than figuring out how those pieces of information are connected. They focus on extracting company names, people, and organizations, tagging text as to the topic (for example, politics, finance, or sports), and evaluating sentiment. These systems tend to be used for analysis of news and for social media tracking of attitude and opinion. Combining entity extraction with sentiment assessment, these systems provide companies, politicians, and brands with a sense of what people are saying about them.

Extraction is usually the result of combining syntactic rules (proper names tend to be capitalized, names follow titles, and so on) with actual lists of the people, places, and things that can be recognized, including terms culled from Wikipedia and similar websites. Both topic tagging and sentiment analysis are accomplished by calculating the probability of documents falling into particular topic and sentiment categories based on the probabilities associated with the words within the documents.

The language understanding systems associated with applications, while narrow in scope, provide us with the ability to articulate our needs directly to these systems. Because the systems themselves are narrow in scope, they provide the level of functionality we need. Siri and Watson don’t need to understand us when we ask them to do things that they simply cannot do.

Generating Language

While systems that understand language are limited at the time of writing, the level of understanding they provide is aimed directly at the systems they support. So the systems that we see entering the workplace can understand language well enough to work with us. The question now is whether they can explain themselves to us.

The goal of natural language generation (NLG) systems is to figure out how to best communicate what a system knows. The trick is figuring out exactly what the system is to say and how it should say it.

Unlike NLU, NLG systems start with a well‐controlled and unambiguous picture of the world rather than arbitrary pieces of text. Simple NLG systems can take the ideas they are given and transform them into language. This is what Siri and her sisters use to produce limited responses. The simple mapping of ideas to sentences is adequate for these environments.

Explaining how advanced NLG systems explain

In the workplace, where we are now surrounded by petabytes of raw data as well as the additional data sets created by business intelligence tools, machine learning, and predictive analytics systems (as well as other AI systems), we need more than the simple generation of words for every fact that is now at the machine’s disposal. For this world, we need advanced NLG systems, such as Narrative Science Quill.

The job of advanced NLG is to examine all the facts that they have access to and establish what they are going to include in the report or message they are producing. They have to then decide on the order and organization of the communication. At the end of this filtering and organization process, they then have to map the ideas that they want to communicate into language that is easy to understand and tailored to a specific audience.

Advanced NLG systems have to determine what is true, what it means, what is important, and then how to say it.

For example, in writing a report for a fund manager focused on the performance of his fund against a benchmark, you would want to first figure out how the fund performed in the absolute (3 percent return this quarter), how this compares to the benchmark (beat the S&P 500 by 1.3 percent), and then which decisions had the most impact on this performance (heavily weighted toward technology, and Apple stock in particular). By focusing on large changes, the greatest impact, and the most significant differences, you end up with the most meaningful set of ideas. Other facts that have less impact (for example, the fund and the benchmark had exactly the same levels of investment and balance of stocks in the retail category) are mentioned only if the document being produced is designed to be exhaustive.

After this kind of analysis is complete, the language part of advanced NLG kicks in and the idea and its organization are mapped onto language.

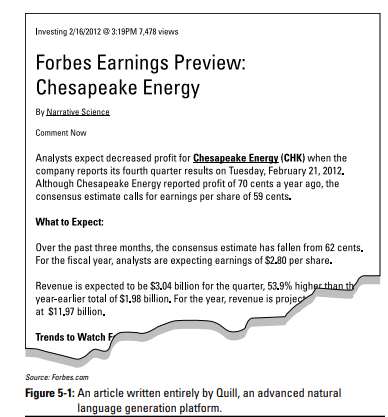

The output of these systems is pretty remarkable. For example, Quill wrote the article in Figure 5-1!

Visualizing data is not enough

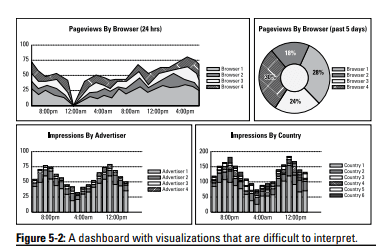

Although other methods of communication, such as dashboards and visualizations, have made great strides, these approaches are simply other ways to expose data and the results of analytics. But graphs showing costs, sales, performance of competitors, and macro‐economic factors cannot file a report telling you that you have beaten the pack by reducing prices at the beginning of an economic upswing. If all you have is the graphs, you have to figure that out by yourself.

By their nature, visualizations of data do not explain what is happening but primarily give you a different take on the data. They often require someone who is data savvy to do it for you. As Figure 5-2 attests, visualizations still require interpretation.

Advanced NLG is aimed at reducing, or even eliminating, the time needed to analyze data and communicate the results. The results can be very useful to people who either don’t know how to analyze the data or simply don’t have time.

These systems combine the power of data analysis with the focus it provides for communication. For example, if you want to know if a company is doing better or worse over time, you need to do a time series analysis of the metrics you care about — probably revenue, costs, and profits. The analysis is exactly the same, although with different metrics, for the performance of an athlete, students, FitBit user, or power plant. When a system knows what to say, how to say it is the easy part. This is what gives advanced NLG the ability to scale.

Question “black boxes.” As new technologies move into the workplace, we will be confronted with an interesting problem. We will have systems in place, including AI systems that can provide us with answers. But if we are to partner with these systems, we need more than answers. We need explanations.

The role of language in intelligent systems is crucial if we are to work with them as partners in the workplace. Systems that demand blind faith in their answers or require that we all develop skills in data analytics and the interpretation of dashboards are not enough. They force us to come to the machine and deal with it on its terms. We need intelligent systems that come to us on our terms by understanding what we need and then explaining themselves and their thinking to us. These systems are possible through language understanding and advanced NLG.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

[ad_2]

Source link