[ad_1]

Plug and play design of orchestration layer and conversation design in Watson Assistant for end-to-end automation scenarios

Purpose

The purpose of this story is to present a mechanism of plug-n-play of dialog topics for end-to-end automation where external services may not be and should not be available to the conversation engine for updates. The orchestration layer should have that responsibility and there should be predefined contracts between layers for zero code change, only configuration-based inclusion of new dialog topics.

Audience

Technical community members like IT Architects, IT Specialist and especially cognitive & automation practitioners. Knowledge about Watson services is nice to have but the concepts are equally applicable in non-Watson technologies.

Introduction

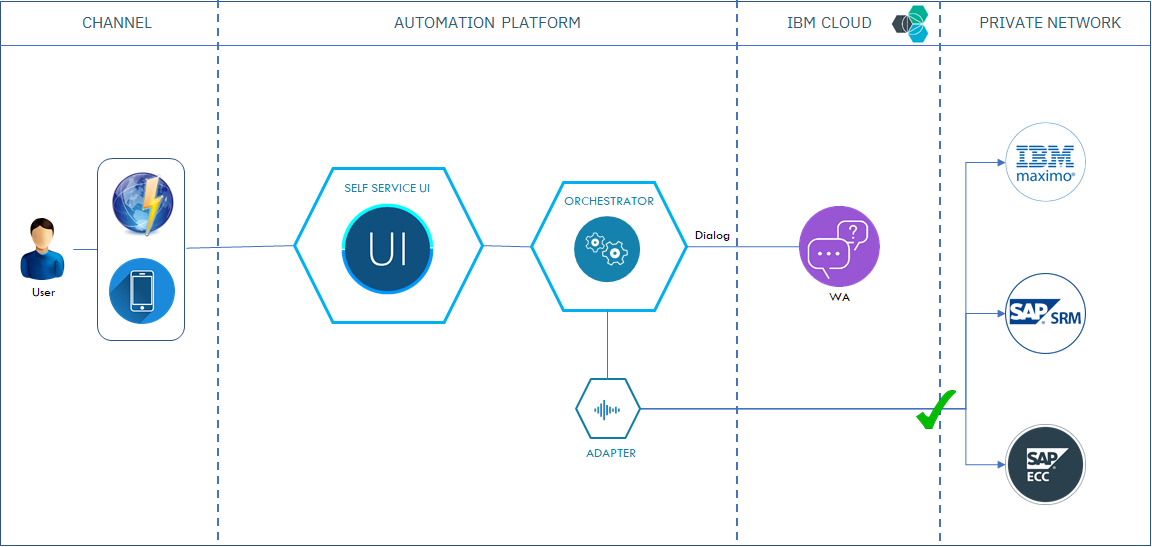

The focus of this story is end-to-end automation with Watson conversation and plug and playability of newer dialogs in framework. In majority of the engagements the automation framework/ solution maybe on cloud but, the end systems are on the client premises. There will be suggested control of not allowing public services (Watson falls under that) should not be allowed to intrude in. The story shows a mechanism to obey the same principle and use the existing open path of automation solution (our orchestration will be part of it) and on-premise end systems.

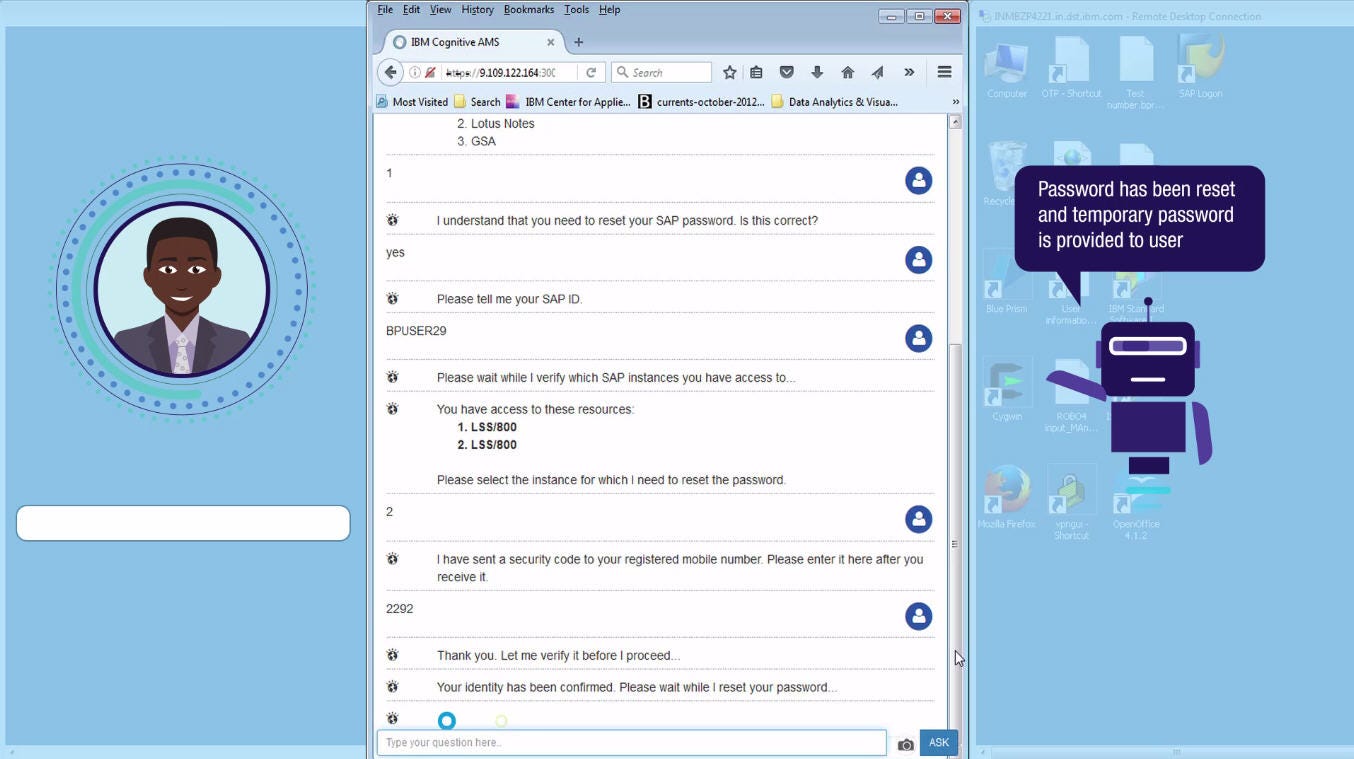

End-to-end Conversational Automation

So, what is end-to-end automation? A user at one end and the end system at the other end. In today’s scenarios, there are call centers to carry out the requests — please upgrade my plan, please add a subscription, please reset my password etc. When the end user is enabled to carry out the jobs himself, it is automated, no need for any agent or helpdesk to intervene. And when the system gathers required information in a dialog and at the end carries out the task, it is conversational automation.

A Sample Use Case

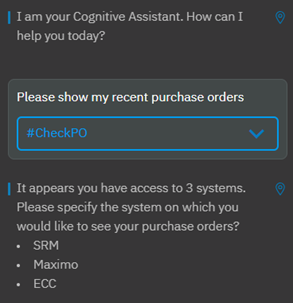

Let us take a sample use case of interacting with end systems in canceling or changing a purchase order I created. There may be a need for updating quantity of a line item or two or canceling the whole order.

- The system checks for the end systems I have access to

- Asks me the time frame to search for

- Searches the purchase orders I created in that system

- Offers me what action to take — Change or Cancel?

- What to change/ cancel?

- And carries out the job……………phew! DONE!!!

Possible integrations

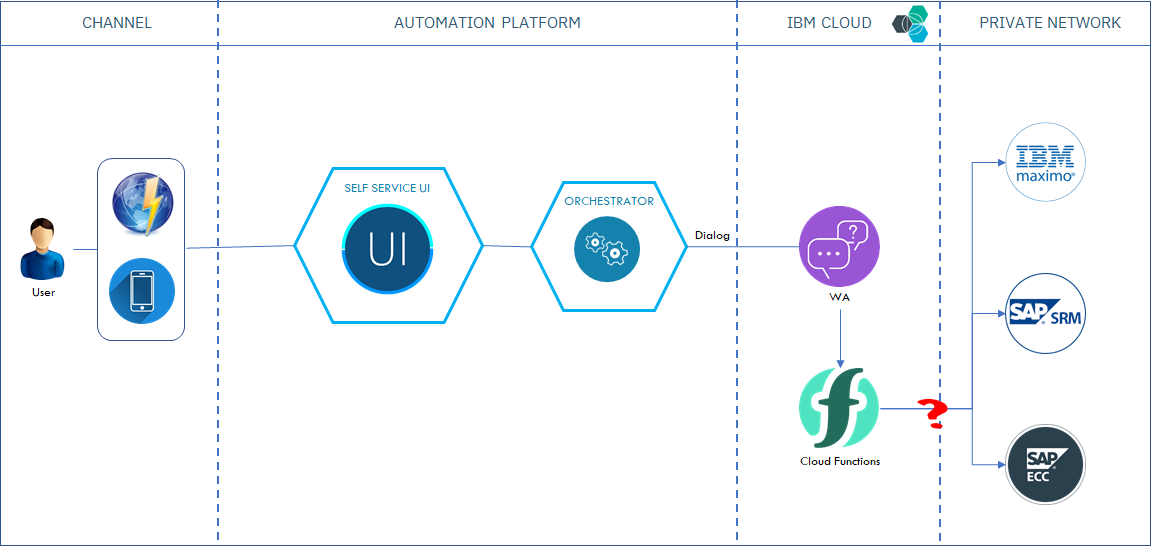

Watson Assistant allows making programmatic calls to external APIs through IBM Cloud Functions. In most of the automation cases (if not all) the client end systems lie inside client premises. To have cloud function access the APIs of those systems either a secured VPN must be established to the end systems or the APIs need to be exposed in the public network.

The Challenge & The Solution

The challenge with the above integration mechanism is that most of the customers do not wish to expose their enterprise systems to public network or allow someone to intrude in from public domain and which they should not.

The solution would be to use the connectivity already established between automation platform and client premises, to call the enterprise systems APIs.

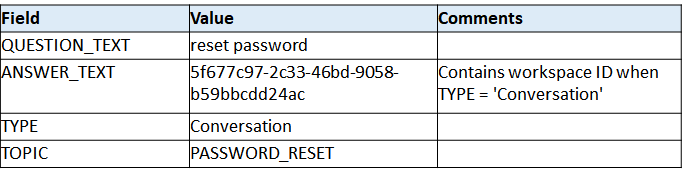

Routing to intended Dialog Topic (Conversation workspace)

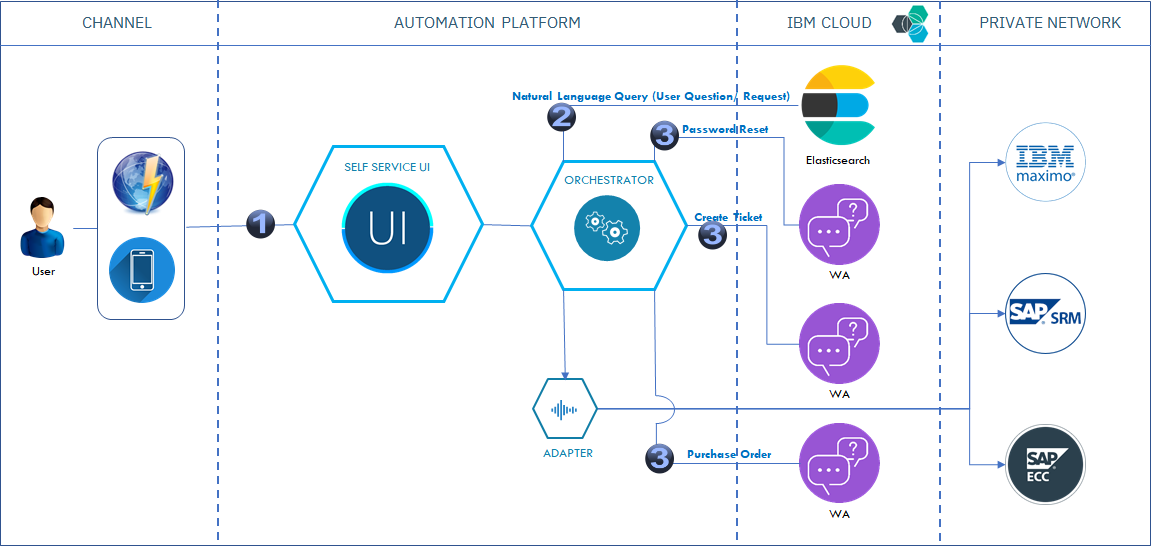

Before describing how new conversation topics are included in the framework, let us see how routing is done among multiple conversation workspaces (topics) present. In the MVP solution we have elastic search as the main component. We have used same store to contain workspace IDs corresponding to conversation topics.

Below schematic shows how topic routing is done to intended workspace.

- User requests for an end-to-end conversation topic, e.g., “How can I reset my password?”.

- Orchestrator makes a natural language query to elastic search and gets the intended row with top relevancy score. The TYPE = “Conversation” in this row and the ANSWER_TEXT contains the Watson Assistant workspace ID.

- The orchestrator instantiates the intended conversation with the workspace ID and continues the session to the same.

NOTE: A parent conversation workspace, or any other natural language search module, can be used as the router in place of elastic search.

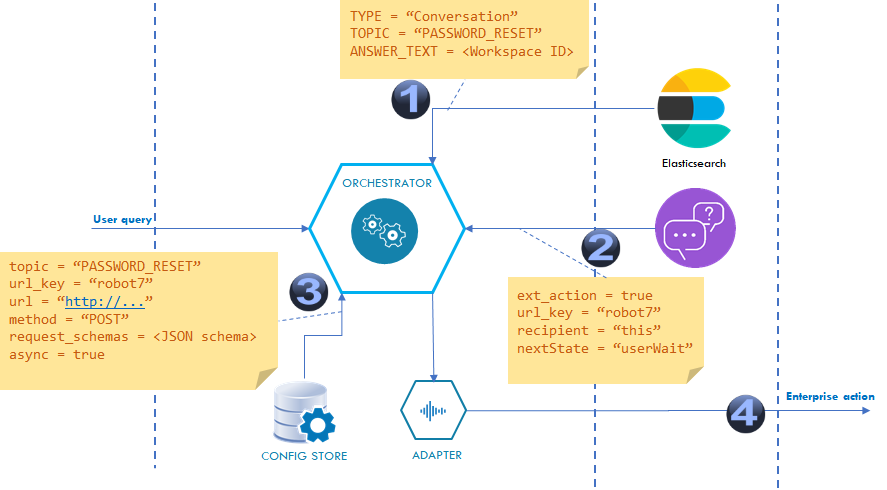

Plug and play conversations with enterprise systems interactions

Now that we have overcome the challenge of exposing enterprise systems to public internet, we want zero updates to the orchestrator code when a new dialog topic is included in the system. We want to carry out some configurations only. Following sections describe the mechanism and the hand shaking configurations needed.

Below schematic shows how different configurations handshake to complete the flow.

- Elastic returns TOPIC = “PASSWORD_RESET” and workspace ID of the intended dialog topic

- Conversation returns ext_action = true and required URL key [url_key = “robot7”] and other parameters.

- Config store returns the actual URL and related information for calling the API for topic = “PASSWORD_RESET” and url_key = “robot7”.

- Orchestrator calls the (adapter) URL for the enterprise API to be called.

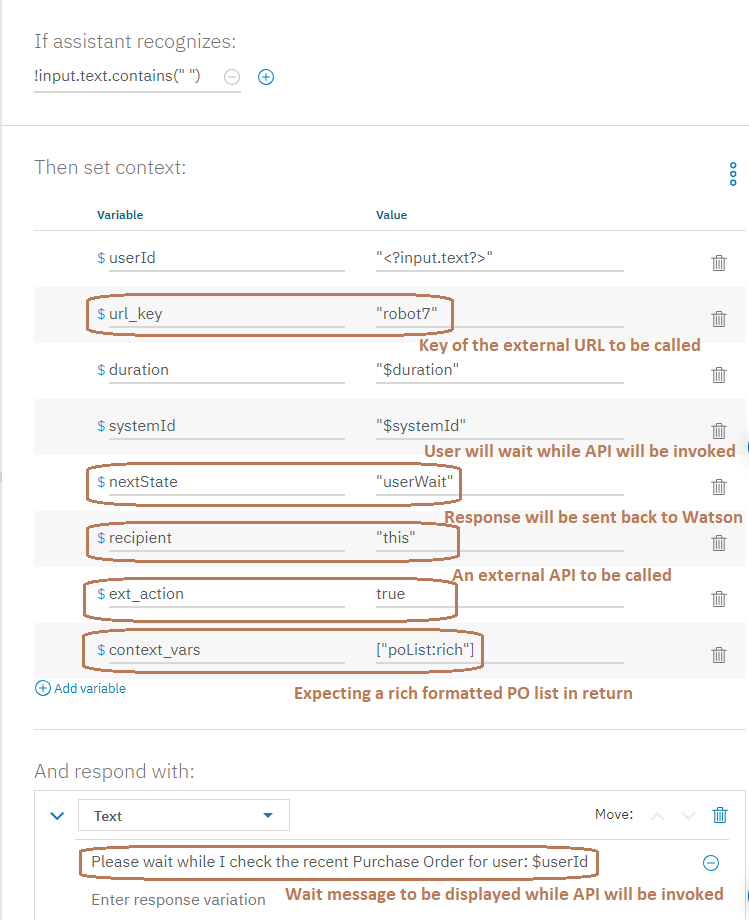

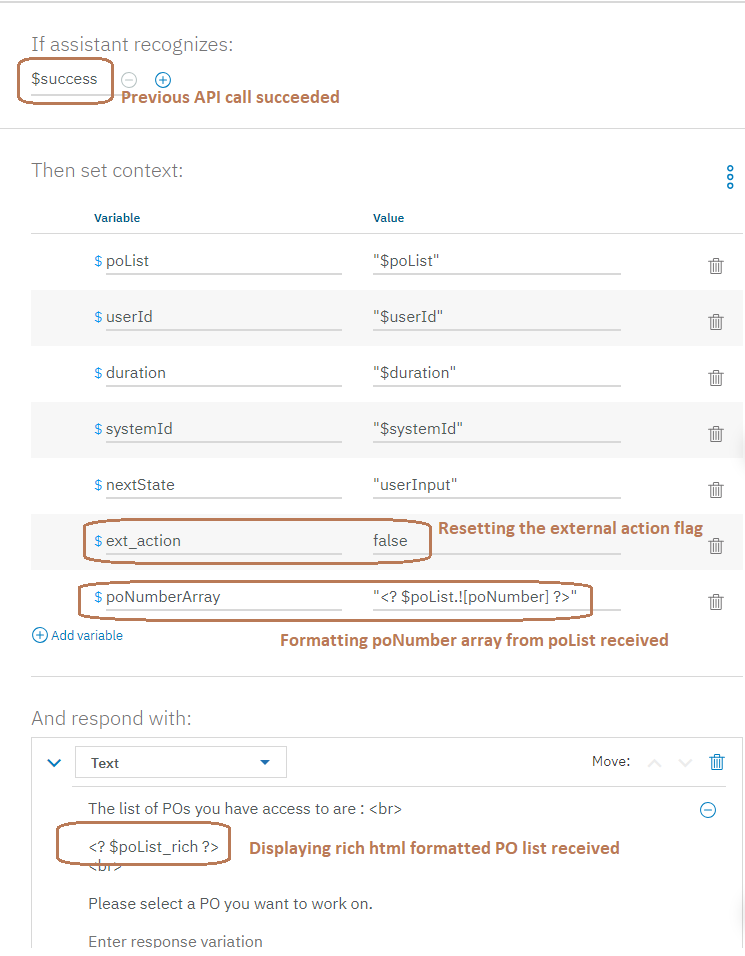

Design at Conversation (Watson Assistant)

Watson Assistant will populate some key values in context, so that the orchestrator will determine actions based on those values.

ext_action — *Required.

Values: — true, if external action API must be called

— false, if no external action calls needed

e.g.,

ext_action = true.

url_key — *Required, when ext_action is true. Orchestrator will search for external API configuration based on the value of this field.

Values: — A unique alphanumeric value.

e.g.,

url_key = “robot7”

recipient — *Required, when ext_action is true. Orchestrator will send the response from the external API to the recipient mentioned in this field.

Values: — “client”, if response is needed to be directed to UI.

— “this, if response is needed to be directed to WA.

e.g.,

recipient = “this”

context_vars — Optional. Contains list of variables in an array passed by external API call. The name should exactly match the field name in external action response.

Sometimes there is need to format the result in rich html like table for multiple field data-set or list for columnar data. This type of field is indicated by “:rich” at the end. The orchestrator will populate two context variables for each “rich” variable.

e.g.,

context_vars = [“poLineItems:rich”], the orchestrator will populate, poLineItems_rich containing the html formatted response and poLineItems containing the list for additional use with conversation like parsing etc.

success — The value will be whether the external call is succeeded.

Values: — true, if external API call is succeeded

— false, otherwise

e.g.,

success = true

nextState — The value will indicate whether the user to wait for long running invocation.

Values: — userWait, external API is called asynchronously, and a wait message is sent to UI. The UI will poll for the response in regular interval. Details explained in the below section User Wait when enterprise API takes longer time.

— userInput, API is called synchronously, and response sent to the recipient.

e.g.,

nextState = “userWait”

Sample context variables before and after the API call

Configuration at orchestration

When Watson Assistant activates external call, the actual call to API is done by the orchestrator. This call requires details like URL to call or methods (GET, POST etc.) to be used for that call.

topic — *Required. This field is used to search configuration details based on current conversation. There is a master data of all dialog topics such as “Password_Reset” or “Purchase_Order”. The value of this field should exactly match with the master data.

e.g.,

topic = “Purchase_Order”

url_key — *Required. Should be same as url_key value to be passed by Watson Assistant.

e.g.,

url_key = “robot7”

url — *Required. URL to external API.

e.g.,

url = “http://localhost:3000/api/v1/robotservice/robot7”

method — *Required. HTTP method to use while consuming the external API.

e.g.,

method = “POST”

request_schemas — Optional. Only required if it is needed to pass request body to the API.

Values: — JSON string for the request schema. The field names must match with the context variable name. If not found in context field will be left blank.

e.g.,

“{\”short_description\”: \”test ticket\”, \”u_description\”: \”test ticket\”,\”state\”: \”string\”,\”comments\”: \”string\”,\”severity\”: \”string\”,\”assignment_group\”: \”string\”,\”category\”: \”string\”,\”u_root_cause\”: \”string\”,\”u_application\”: \”string\”}”

query_params — Optional. The array of field names indicates the query parameters to be passed in the request. The field name matches with the field in Watson context for the value to pass.

e.g.,

query_params = [“userId”]http://localhost:3000/api/v1/robotservice/robot8?userId=SODA

async — Whether the external URL will be called asynchronously or not.

Values: — true means asynchronous.

— false means synchronous.

e.g.,

async = true

<any> — Any additional parameter values needed to be passed into the URL service being called and not provided in Watson Assistant context, e.g., adapter_id. See the configurations for Password_Reset topic in orchestration_config.json file.

e.g.,

adapter_id = 9

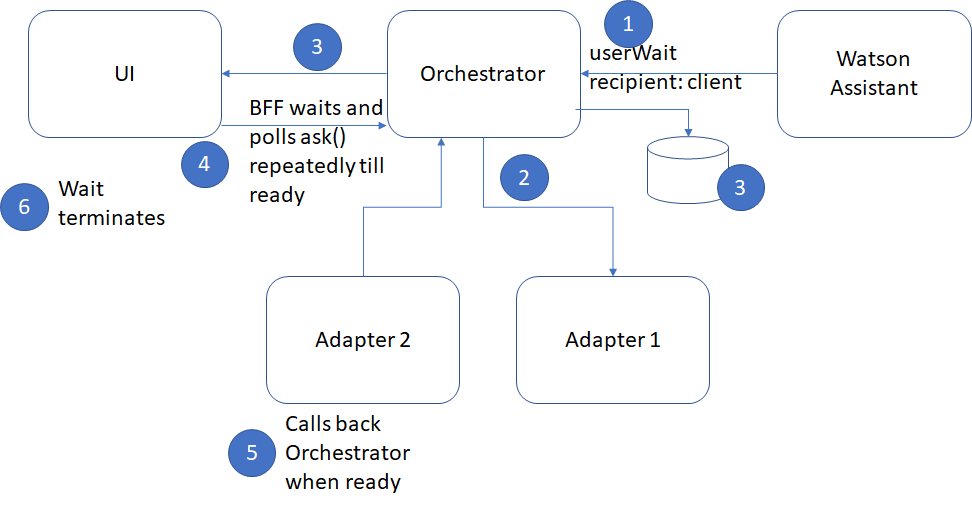

User wait when enterprise API takes longer time

User wait and async relation

We already understood that async is the way to specify at the orchestration layer that whether the API should be called asynchronously. Also, nextState = userWait is to instruct the orchestration to let the UI (user) wait while API is called (asynchronously). Let us see their relationship in the form of a truth table.

1. async = FALSE, userWait = FALSESynchronous call, the user does not wait.2. async = FALSE, userWait = TRUESynchronous call, but the user has to wait. This is INVALID condition.3. async = TRUE, userWait = FALSEAsync call, but user will NOT wait. Orchestrator polls for response readiness. User gets a synchronous calling behavior.4. async = TRUE, userWait = TRUEAsync call and user MUST wait. The UI polls for the response readiness. User sees waiting message until complete.

Code References

See this git repository for below sample code files uploaded.

- skill-purchase_order-pnp.json — JSON file is the export of the Watson Assistant sample skill.

- orchestration_config,json — JSON file is the respective sample configuration at orchestration layer for the above skill.

This article has been published from the source link without modifications to the text. Only the deadline has been changed.