Introduction

For the past year or two, we have talked a lot about how we can understand the properties of Deep Neural Networks by examining the spectral properties of the layer weight matrices . Specifically, we can form the correlation matrix

and compute the eigenvalues

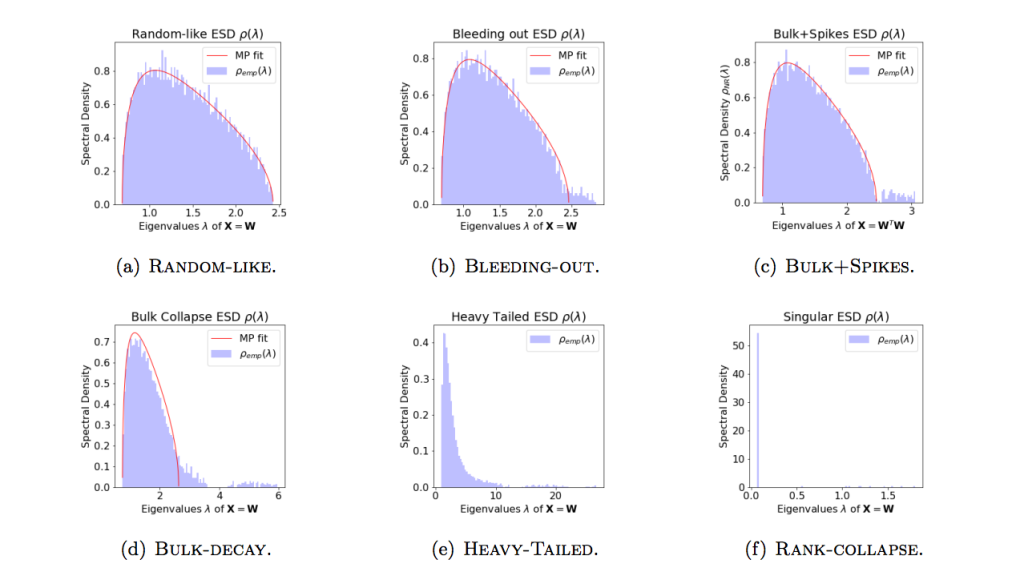

By plotting the histogram of the eigenvalues (i.e the spectral density ), we can monitor the training process and gain insight into the implicit regularization and convergence properties of DNN. Indeed, we have identified

5+1 Phases of Training

Each of these phases roughly corresponds to a Universality class from Random matrix Theory (RMT). And as we shall see below, we can use RMT to develop a new theory of learning.

First, however, we note that for nearly every pretrained DNNs we have examined (over 450 in all) , the phase appears to be in somewhere between Bulk-Decay and/or Heavy-Tailed .

Heavy Tailed Implicit Regularization

Moreover, for nearly all DNNs, the spectral density can be fit to a truncated power law, with exponents frequently lying in the Fat Tailed range [2-4], and the maximum eigenvalue no larger than say 100

Most importantly, in 80-90% of the DNN architectures studied, on average, smaller exponents correspond to smaller test errors.

DNN Capacity Metrics in Practice

Our empirical results suggest that the power law exponent can be used as (part of) a practical capacity metric. This led us to propose the metric for DNNs:

where we compute the exponent and maximum eigenvalue

for each layer weight matrix (and Conv2D feature maps), and then form the total DNN capacity as a simple weighted average of the exponents. Amazingly, this metric correlates very well with the reported test accuracy of pretrained DNNs (such as the VGG models, the ResNet models, etc)

WeightWatcher

We have even built a open source, python command line tool–weightwatcher–so that other researchers can both reproduce and leverage our results

pip install weightwatcher

And we have a Slack Channel for those who want to ask questions, dig deeper, and/or contribute to the work. Email me, or ping me on LinkedIn, to join our vibrant group.

All of this leads to a very basic question:

Why does this even work ?

To answer this, we will go back to the foundations of the theory of learning, from the physics perspective, and rebuild the theory using in both our experimental observations, some older results from Theoretical Physics, and (fairly) recent results in Random Matrix Theory.

Statistical Mechanics of Learning

Here, I am going to sketch out the ideas we are currently researching to develop a new theory of generalization for Deep Neural Networks. We have a lot of work to do, but I think we have made enough progress to present these ideas, informally, to flush out the basics.

What do we seek ? A practical theory that can be used to predict the generalization accuracy of a DNN solely by looking at the trained weight matrices, without looking at the test data.

Why ? Do you test a bridge by driving cars over it until it collapses ? Of course not! So why do we build DNNs and only rely on brute force testing ? Surely we can do better.

What is the approach ? We start with the classic Perceptron Student-Teacher model from Statistical Mechanics of the 1990s. The setup is similar, but the motivations are a bit different. We have discussed this model earlier here Remembering Generalization in DNNs. from our paper Understanding Deep Learning Requires Rethinking Generalization.

Here, let us review the mathematical setup in some detail:

the Student-Teacher model

We start with the simple model presented in chapter 2, Engel and Van der Brock, interpreted in a modern context.

Here, we want to do something a little different, and use the formalism of Statistical Mechanics to both compute the average generalization error, and to interpret the global convergence properties of DNNs in light of this , giving us more insight into and to provide a new theory of Why Deep Learning Works (as proposed in 2015).

Suppose we have some trained or pretrained DNN (i.e. like VGG19). We want to compute the average / typical error that our Teacher DNN could make, just by examining the layer weight matrices. Without peeking at the data.

Conjecture 1: We assume all layers are statistically independent, so that the average generalization capacity (i.e. 1.0-error) is just the product of the contributions of from each layer weight matrix

.

Example: The Product Norm is a Capacity measure for DNNs from traditional ML theory.

The Norm may be Frobenius Norm, the Spectral Norm, or even their ratio, the Stable Rank.

This independence assumption is probably not a great approximation but it gets us closer to a realistic theory. Indeed, even traditional ML theory recognizes this, and may use Path Norm to correct for this. For now, this will suffice.

Caveat 1: If we take the logarithm of each side, we can write the log Capacity as the sum of the layer contributions. More generally, we will express the log Capacity as a weighted average of some (as yet unspecified) log norm of the weight matrix.

Capacity for a Single Layer: Perceptron model

We now set up the classic Student-Teacher model for a Perceptron–with a slight twist. That is, from now on, we assume our models have 1 layer, like a Perceptron.

Let’s call our trained or pretrained DNN the Teacher T. The Teacher maps data to labels. Of course, there could be many Teachers which map the same data to the same labels. For our specific purposes here, we just fix the Teacher T. We imagine that the learning process is for us to learn all possible Student Perceptrons J that also map the data to the labels, in the same way as the Teacher.

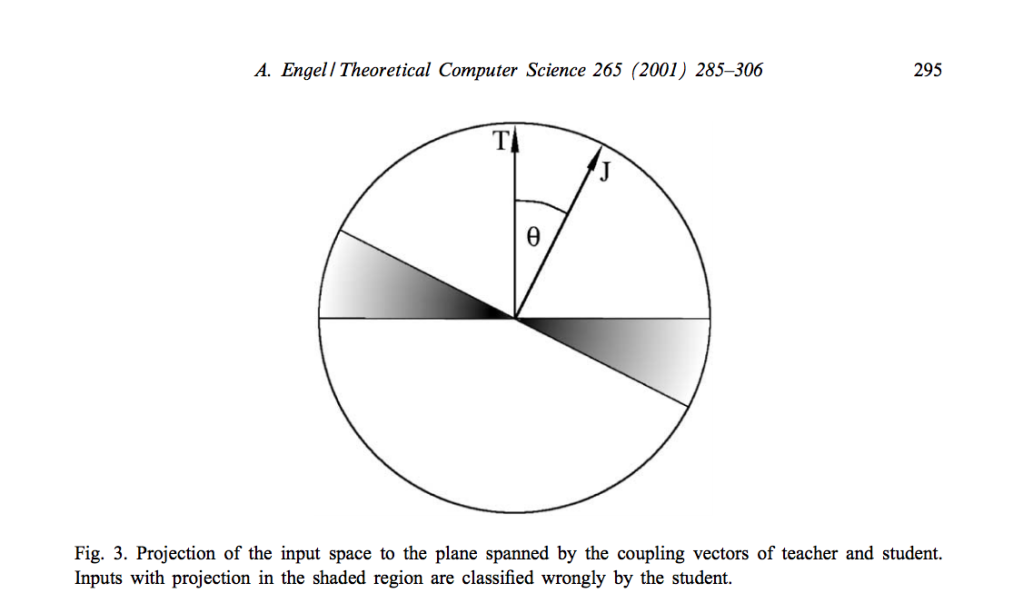

But for a pretrained model, we have no data, and we have no labels. And that’s ok. Following Engle and Van der Brock (and also Engle’s 2001 paper ), consider the following Figure, which depicts the vector space representations of T and J.

To compute the average generalization error, we write total error is the sum of all the errors over all possible Students J for a given Teacher T. And we model this error with the inverse (arc cosine) of the vector dot product between J and T:

For our purposes, if instead of N-dim vectors, if we let T and J be NxM weight matrices, then the dot product becomes the Solid Angle . (Note: the error is no longer

since J and T are matrices, not vectors, but hopefully this detail won’t matter here since we are going to integrate this term out below. This remains to be worked out)

This formalism lets us use the machinery of Statistical Mechanics to write the total error as an integral over all possible Student vectors J, namely, the phase space volume of our model:

where the first delta function enforces the normalization condition, or spherical constraints, on the Student vectors J, and the second delta function

is a kind of Energy potential..

The normalization can be subsumed into a general measure, as

which actually provides us a more general expression for the generalization error (where we recall is not quite correct for matrices)

Now we will deviate from the classic Stat Mech approach of the 90s. In the original analysis, one wants to compute the phase space volume as a function of the macroscopic thermodynamic variables, such as the size of the training set, and study the learning behavior. We have reviewed this classic results in our 2017 paper.

We note that, for the simple Perception, the Student and Teachers,: , are represented as N-dimensional vectors, and the interesting physics arises in the Ising Perception, when the elements are discrete:

Continuous Perception: (unintersting behavior)

Ising Perception: (phase transitions, requires Replica theory, …)

And in our early work, we propose how to interpret the expected phase behavior in light of experimental results (at Google) that seem to require Rethinking Generalization. Here, we want to reformulate the Student-Teacher model in light of our own recent experimental studies of the spectral properties of real-world DNN weight matrices from production quality, pretrained models.

Our Proposal: We let be strongly correlated (NxM) real matrices, with truncated, Heavy Tailed ESDs. Specifically, we assume that we know the Teacher T weight matrices exactly, and seek all Student matrices J that have the same spectral properties as the Teacher.

We can think of the class of Student matrices J as all matrices that are close to T. What we really want is the best method for doing this, that hasd been tested experimentally. Fortunately, Hinton and coworkers have recently revisited Similarity of Neural Network Representations, and found the best matrix similarity method is

Canonical Correlation Analysis (CCA):

Using this, we generalize the Student-Teacher vector-vector overlap., or dot-product, to be the Solid-Angle between the J and T matrices:and plug this directly into our expression for the phase space volume . (and WLOG, we absorb the normalization N into the matrices, and now have

We now take the Laplace Transform of which allows us to integrate over all possible errors

that all possible Students might make:

Note: This is different than the general approach to Gibbs Learning at non-zero Temperature (see ENgle and Van den Broeck, chapter 4). The Laplace Transform converts the delta function to a exponential, giving

Conjecture 2: We can write the layer matrix contribution to the total average generalization error as an integral over all possible (random) matrices J that resemble the actual (pre-)trained weight matrices T (as given above).

Notice this expression resembles a classical partition function from statistical field theory: ,. Except instead of integrating over the vector-valued p and q variables, we have to integrate over a class of random matrices J. The new expression for the generalization error is a like weighted average over all possible errors, (where the effective inverse Temperature

is set by the scale of the empirical weight matrices |W|). This is the key observation, and requires some modern techniques to perform

RMT and HCIZ Integrals

These kinds of integrals traditionally appeared in Quantum Field Theory and String Theory, but also in the context of Random Matrix applied to Levy Spin Glasses, And it is this early work on Heavy Tailed Random Matrices that has motivated our empirical work. Here, to complement and extend our studies, we lay out an (incomplete) overview of the Theory.

These integrals are called Harish Chandra–Itzykson–Zuber (HCIZ) integrals. A good introductory reference on both RMT and HCIZ integrals the recent book “A First Course in Random Matrix Theory”, although we will base our analysis here on the results of the 2008 paper by Tanaka,

First, we need to re-arrange a little of the algebra. We will call A the Student correlation matrix:

and let W, X be the original weight and correlation matrices for our pretrained DNN, as above:

,

and then expand the CCA Similarity metric as

We can now express the log HCIZ integral, in using Tanaka’s result, as an expectation value of all random Student correlations matrices A that resemble X.

And this can be expressed as a sum over Generating functions that depends only the statistical properties of the random Student weight matrices A. Specifically

where is the R-Transform from RMT.

The R Transform is like an inverse Green’s function (i.e a Contour Integral), and is also a cumulant generating function. As such, we can write as a series expansion

where are Generalized Cumulants from RMT.

Now, since we expect the best Students matrices resemble the Teacher matrices, we expect the Student correlation matrix A to have similar spectral properties as our actual correlation matrices X. And this where we can use our classification of the 5+1 Phases of Training. Whatever phase X is in, we expect all the A to be in as well, and we therefore expect the R-Transform of A to have the same functional form as X.

That is, if our DNN weight matrix has a Heavy Tailed ESD

then we expect all of the students to likewise have a Heavy Tailed ESD, and with the same exponent (at least for now).

Quenched vs Annealed Averages

Formally, we just say we are averaging over all Students A. More technically, what really want to do is fix some Student matrix (i.e. say A = diagonal X), and then integrate over all possible Orthogonal transformations O of A (see 6.2.3 of Potters and Bouchaud)

Then, we integrate over all possible A~diag(X) , which would account for fluctuations in the eigenvalues. We conceptually assume this is the same as integrating over all possible Students A, and then taking the log.

The LHS is called the Quenched Average, and the RHS is the Annealed. Technically, they are not the same, and in traditional Stat Mech theory, this makes a big difference. In fact, in the original Student-Teacher model, we would also average over all Teachers, chosen uniformly (to satisfy the spherical constraints)

Here, we are doing RMT a little differently, which may not be obvious until the end of the calculation. We do not assume a priori a model for the Student matrices. That is, instead of fixing A=diag(X), we will fit the ESD of X to a continuous (power law) distribution , and then effectively sample over all A as if we had drawn the eigenvalues of A from

. (In fact, I suppose we could actually do this numerically instead of doing all this fancy math–but what fun is that?).

The point is, we want to find an expression for the HCIZ integral (i.e the layer / matrix contribution to the Generalization Error) that only depends on observations of W, the weight matrix of the pretrained DNN (our Teacher network). The result only depends on the eigenvalues of X, and the R-transform of A , which is parameterized by statistical information from X.

In principle, I supposed we could measure the generalized cumulants of X,. and assume we can plug these in for

. We will do something a little easier.

Let us consider 2 classes of matrices as models for X.

Gaussian (Wigner) Random Matrix: Random-Like Phase

The R-Transform for Gaussian Random matrix is well known:

Taking the integral and plugging this into the Generating function, we get

So when X is Random-Like , the layer / matrix contribution is like the Frobenius Norm (but squared), and thus average Generalization Error is given by a Frobenius Product Norm (squared).

Levy Random Matrix: Very Heavy Tailed Phase–but with

We don’t have results (yet) for the Very Heavy Tailed Phase with , but, as we have argued previously, due to finite size effects, we expect that the Very Heavy Tailed matrices appearing in DNNs will more resemble Levy Random matrices that the Random-Like Phase. So for now, we will close one eye and extend the results for

to

.

The R-Transform for a Levy Random Matricx has been given by Burda

Taking the integral and plugging this into the Generating function, we get

Towards our Heavy Tailed Capacity Metric

1. Let us pull the power law exponent out of the Trace, effectively ignoring cross terms in the sum over

2. We also assume we can replace the Trace of with its largest eigenvalue

, which is actually a good approximation for very heavy tailed Levy matrices, when

This gives an simple expression for the HCIZ integral expression for the layer contribution to the generalization error

Taking the logarithm of both sides, gives our expression

We have now derived the our Heavy Tailed Capacity metric using a matrix generalization of the classic Student Teacher model, with the help of some modern Random Matrix Theory.

QED

I hope this has convince you that there is still a lot of very interesting theory to develop for AI / Deep Neural Networks. And that you will stay tuned for the published form of this work. And remember…

pip install weightwatcher

A big thanks to Michael Mahoney at UC Berkeley for collaborating with me on this work , and to Mirco Milletari’ (Microsoft), who has been extremely helpful. And to my good friend Matt Lee (formerly managing director at BGI/Blackrock) for long discussions about theoretical physics, RMT, quant finance, etc., for encouraging us to publish.

This article has been published from the source link without modifications to the text. Only the headline has been changed.