Stable Diffusion – a text-to-image generator is now available for the public and can be put to the test.

Stability AI developed Stable Diffusion and released it for researchers initially earlier this month. The image generator claims to provide a leap in speed and quality that can run on user GPUs.

The model is based on the inherent diffused model developed by CompVis and Runway, but it has been enhanced with insights from conditional diffusion models developed by Katherine Crowson, Stable Diffusion’s lead generative AI developer, Open AI, Google Brain, and others.

This model is based on the work of many excellent researchers, and we anticipate that this as well as similar models will have a positive impact on society and science in the coming years as they are used by billions globally, stated Emad Mostaque, CEO of Stability AI.

The core dataset was trained on LAION-Aesthetics, which filters the 5.85 billion images in the LAION-5B dataset based on how “beautiful” an image was, using ratings from Stable Diffusion alpha testers.

Stable Diffusion runs on computers with less than 10GB of VRAM and generates images with 512×512 pixel resolution in a matter of seconds.

We are delighted that cutting-edge text-to-image models are being developed openly, and we look forward to working with CompVis and Stability.AI towards securely and ethically releasing the models to the public and assisting in democratizing the potential of ML with the entire community, explained Apolinário, ML Art Engineer at Hugging Face – an AI community.

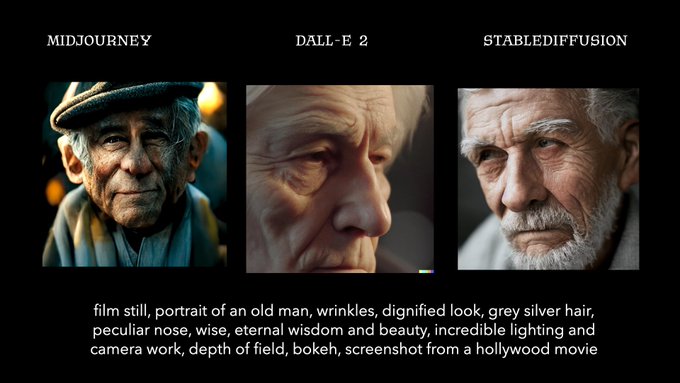

Stable Diffusion competes with other text-to-image models such as Midjourney, DALL-E 2, and Imagen.