With no well-specified rewards and state transitions that take place in a myriad of ways, training a robot via reinforcement learning represents perhaps the most complex arena for machine learning.

The seemingly simple task of grasping an object from a large cluster of different kinds of objects is “one of the most significant open problems in robotics,” according to Sergey Levine and collaborators. Grasping is a good example of problems that bedevil real-world machine learning, including latency that throws off the expected order of events, and goals that may be difficult to specify.The vast majority of artificial intelligence has been developed in an idealized environment: a computer simulation that dodges the bumps of the real world. Be it DeepMind’s MuZero program for Go and chess and Atari or OpenAI’s GPT-3 for language generation, the most sophisticated deep learning programs have all benefitted from a pruned set of constraints by which software is improved.

For that reason, the hardest and perhaps the most promising work of deep learning may lie in the realm of robotics, where the real world introduces constraints that cannot be fully anticipated.

That is one takeaway from a recent report by researchers at the University of California at Berkeley and Google who have summarized several years of experiments with robots using what’s known as reinforcement learning.

“I think real-world tasks in general present the greatest challenge — but also the greatest opportunities — for reinforcement learning,” Sergey Levine, assistant professor with Berkeley’s department of electrical engineering and computer science, told ZDNet in an email exchange.

Levine, who also holds an appointment with the Robotics at Google program, this month published, along with fellow researchers Julian Ibarz, Jie Tan, Chelsea Finn, Mrinal Kalakrishnan, and Peter Pastor, a review titled How to Train Your Robot with Deep Reinforcement Learning — Lessons We’ve Learned, which is posted on the arXiv pre-print server.

The paper describes several experiments Levine and the others have carried out over the years using reinforcement learning and summarizes where those experiments ran into obstacles.

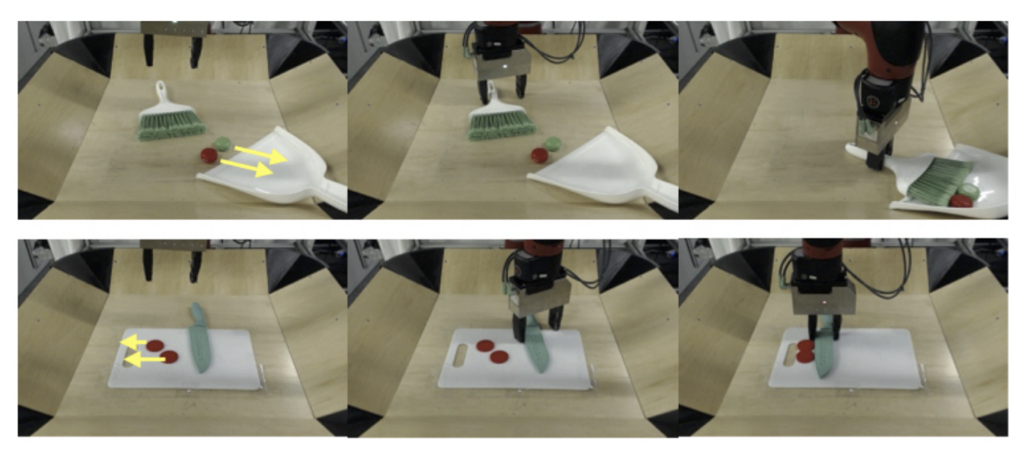

The experiments involve the most basic tasks of robotics, things such as having a robot arm grasp an object and move it from one location on a table to another. Even this very simple task reveals fascinating challenges.

Reinforcement learning is an approach to machine learning that has been around for decades. It is most famous for being used by Google’s DeepMind unit to develop AlphaZero, the program that was able to beat the world’s top Go players, and top chess and Shogi players, without any information on human play, just repeated games played against itself. DeepMind has extended the program to MuZero, now able to master Atari games with the same approach.

The basic idea of reinforcement learning is that a search of possible actions and consequences is carried out and then stored in memory, and two algorithms called a value function and a policy function combine to select the next move at any point in a task based on what was most fruitful in that search history. All the calculations are based on the notion of an ultimate reward, such as winning the game of chess.

Levine and colleagues point out that robotics breaks some of the most basic assumptions in that reinforcement learning paradigm.

For one, states of affairs in robotics don’t progress cleanly the way they do in a strategy game such as Go or chess. The traditional model of reinforcement learning is what’s called a Markov Decision Process, where one state is followed in an orderly fashion by another state depending on an action taken. All reinforcement learning assumes you can measure how action leads from one discrete state to another.

In the world of robotics, however, there is latency, the lag time between one state of affairs and another. As Levine and co-authors describe it, “Latency means that the next state of the system does not directly depend on the measured state, but instead on the state after a delay of latency after the measurement, which is not observable.”

As a result, “Latency violates the most fundamental assumption of MDP [the Markov Decision Process], and thus can cause failure to some RL [reinforcement learning] algorithms,” the authors point out. They give examples where successful reinforcement learning programs break when latency throws off the expected Markov state transitions.

There’s another perhaps greater problem that crops up in robotics, and that is the notion of goals and rewards.

Traditionally, reinforcement learning assumes the goal is clearly defined, and every available action can be assessed by the program’s value function as clearly taking the program closer to or farther from the goal. In chess or Go or Atari games, the goal of victory in the game is clear, and moves advance the player in measurable ways toward that goal.

“In simulation or video game environments, the reward function is typically easy to specify, because one has full access to the simulator or game state, and can determine whether the task was completed or access the score of the game,” as Levine and collaborators write.

“In the real world, however, assigning a score to quantify how well a task was completed can be a challenging perceptual problem of its own.”

Think about a robotic arm reaching to open a door, the authors relate. It’s possible that a learning robot will try to optimize by moving closer to the doorknob. But getting too close to the doorknob, so that the knob is at a poor angle to be grasped, will actually frustrate the end goal. That’s an example of how optimizing a sub-task like approaching the object can actually set back the larger goal.

Various unsupervised learning approaches can lead to strategies by which a robot grasps objects, where specifying a goal and a policy is hard to do.And it’s an example of how reinforcement learning suffers when rewards are “sparse,” meaning, very few clues are provided for a robot.

“This is definitely a major challenge,” Levine told ZDNet in email, “and it’s one of the places where the standard RL [reinforcement learning] problem statement, which assumes the reward is simply ‘provided’ somehow to the agent (e.g., with a piece of code), deviates from the requirements in the real world.”

Solutions, as discussed in the article, include approaches such as, for example, to demonstrate a task with a human performing the action. Standard reinforcement learning is not set up to accommodate that kind of goal-by-demonstration specification.

“We need to extend them to be able to process this kind of ‘natural’ task specification,” Levine told ZDNet. Another approach is to build up lots of data in a simulation beforehand, and then feed that to a robot. But, again, real-world complexities evade the reductive nature of a simulation, what Levine and company refer to as the “reality gap.” That means simulations can be helpful, but only up to a point.

All these challenges are made more poignant by the fact that they exist in many, many domains of life.

The complexities of robotics embody many “real-world problems,” Levine told ZDNet, “including control of power grids, regulating road networks, HVAC control, and even more complex applications in logistics, inventory management, and economics.”

“Robotics is simply the most physically tangible instantiation of these challenges, and the one that we as humans can relate to most readily, because we all share the experience of controlling our own bodies, hence we can relate more easily with a robot trying to control its body,” he said.

Ultimately, Levine is inclined to view necessity as a virtue.

“Reinforcement learning is both a challenge and an opportunity here,” he told ZDNet. By not being bound by the simulator, it is possible for robots to learn a richer vocabulary of skills, he said.

“In a game like chess or Go, the RL policy will only be as good as the ‘simulator’ that it inhabits,” Levine observed. “It may be able to play the game of chess, but it can never learn anything else in that world because its ‘world’ doesn’t contain anything other than chess.”

By contrast, in the real world, “a robot can experience many of the same things that we experience, it can confront the world in all its complexity, and maybe even learn things that might surprise us.”

“I think this is really exciting,” said Levine.