Machine Learning models are probability engines. They will guess wrong sometimes, and that’s how you know they’re working correctly.

When you train AI models, you are teaching by example. You show a model different pieces of data, and it learns what that data represents. If you show it pictures of dogs and pictures of cats, you have to tell the model “this is a dog” and “this is a cat.” If you want to train a model to tell a positive review from a negative one, you have to tell it, “this is a positive review,” and “this is a negative review.”

The values you are training the model to recognize are your prediction targets, and they are often called tags. It is frequently the case for business data that it is already tagged – because the key values that matter to your business are carefully watched and optimized. If you are tackling a new problem, you might have to first tag (or classify) the training data before building your model. Prediction targets can be categories or numbers.

Once you train a model, you want to know how well it will work.

It’s standard practice when training AI models to withhold a portion of the data from the training process. This withheld data is then used to validate the performance of the model. The first-pass look at performance is model accuracy. And, of course, the common objective is to try and make the model as good as possible. The truth is that a model can only ever be as good as the data used to train it.

One common issue that happens is a first-time modeler will upload the combined set of company data and then predict a KPI somewhere in the middle of the customer journey. The model performance report will return 100% accuracy against the test set. Great right? Actually not, and it’s really important to understand the following point: if your machine learning model is always right, it’s almost certainly because it’s cheating – you have trained it on causal data.

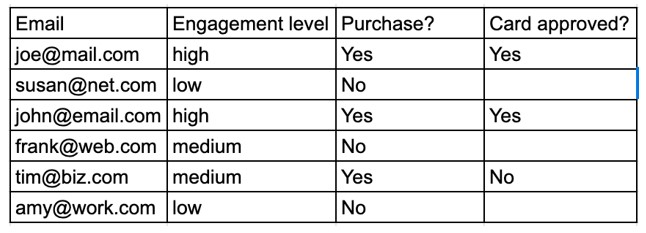

Let’s look at an example where you want to predict if any given customer will purchase a product. In the dataset below, we have some information about a sales funnel. We have email addresses; “engagement level,” which is a sum of actions the user has taken (downloading white papers, speaking with sales, etc.); purchase, which records if they bought a product; and the last column which records if their credit card has been approved.

Understanding how models learn

Let’s look at an example where you want to predict if any given customer will purchase a product. In the dataset below, we have some information about a sales funnel. We have email addresses; “engagement level,” which is a sum of actions the user has taken (downloading white papers, speaking with sales, etc.); purchase, which records if they bought a product; and the last column which records if their credit card has been approved.

Looking at this simple dataset we can draw some conclusions:

- Users with low engagement are unlikely to purchase

- Users with high engagement are more likely to purchase

- Users with medium engagement may or may not purchase

- When purchases are made, the credit card will get approved two out of 3 times

If we had more data in the set, the resolution of those conclusions would get better. We would probably learn a more exact percentage of the time each level of engagement results in a purchase, a more accurate value for credit card approvals, etc.

So what happens if we take this dataset and use it to train machine learning or AI models to predict if a user will purchase or not? The model will look at all of the available data and report that it can predict “purchase” with 100% accuracy. And that should be your first flag that something is wrong.

It’s called machine learning for a reason – models will use every piece of information that’s made available to them at training time; they do not apply any common sense. Did you catch how the model is cheating yet? It’s using the “card approved” variable to determine if a user will purchase. The model has observed and learned a causal relationship in the data:

If the field “card approved” is blank, then “purchase” = no

And if the field “card approved” is not blank, then “purchase” = yes

The model trained in this example would be broken if deployed in the real world. Because you would call the model prior to purchase, it would never see a value for “card approved” and would therefore never predict that a user would purchase.

Fortunately, it is a simple fix – remove the “card approved” variable from the training data, and you will train a model that is reasonably accurate. It will be forced to learn the relationship between engagement and purchase. Because it only has a small set of data to work with, the model will learn the following rules:

- Users with high engagement = purchase (it has two examples of this state)

- Users with low engagement = not purchase (it has two examples of this state)

- Users with medium engagement are a toss-up, so pick if the email is closest to [email protected] (not purchase) or [email protected] (purchase).

The new model will function correctly but will sometimes guess wrong. Is the user’s email a big contributor to purchase behavior for the medium-engaged users? Actually, it might be – many businesses in the B2B space find that prospective customers with work emails are more likely to buy than those with personal emails like @gmail.

Key takeaways about training AI models

Models are prediction engines – they forecast probabilities. They can and should be wrong some of the time. It means they are working. There is still immense value to be captured by betting on probable outcomes (even those with the slimmest of margins – just look at vegas casinos).

Models will learn whatever the most efficient pattern is in their training data. You should keep this in mind because sometimes you will find the model learning something you didn’t intend (that causes it to work less well as a real-world tool).

If your model performance is 100%, it’s almost certain that you have included a causal input in the training set. You will need to identify what that variable is, remove it and re-train.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link