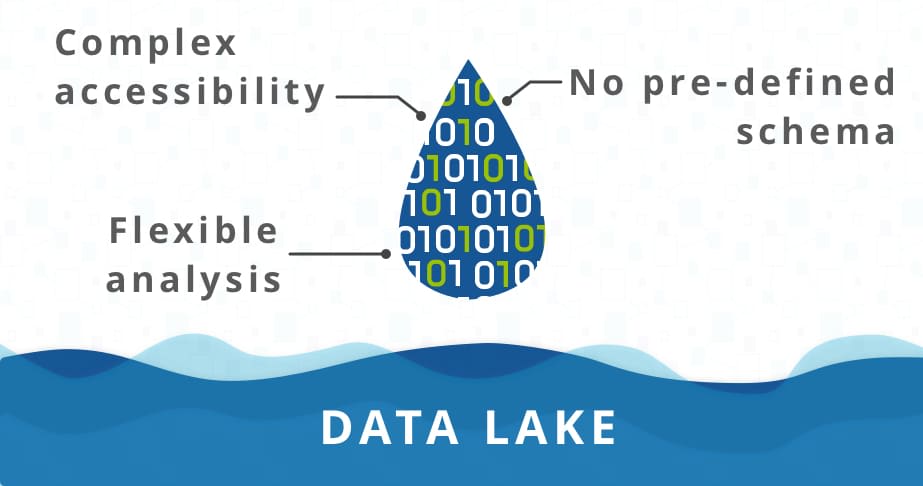

Data lakes offer the versatility and scalability of data storage that traditional data storage solutions have long lacked. The flexibility of schema in a data lake enables users from various operational silos of a business to store, retrieve, and use multi-structured data from various sources. This means that customer purchasing data, supply chain metrics, product behavior on portals, and marketing insights can all be studied comprehensively in an integrated manner.

Furthermore, cloud-based data lakes can power the connectivity and understanding of cross-functional data, enabling real-time decision analytics and cutting-edge machine learning algorithms. Without a doubt, the data lake serves as the foundation for analytics and computing data, but it won’t be long before your data lake becomes a data swamp.

And, as Nishant Nishchal, Partner at Kearney, puts it, the biggest risk is that the ‘data lake’ will become a ‘data swamp.’ Poor master data management, a lack of adequate data quality rules, and poor governance all play a role.

Inadequate management and organization can turn your data lake into a data swamp. To scale and use a data lake for the long term, businesses must achieve data quality and ensure organizational standards are implemented early in the lake’s setup. Because inherent edit/update features are not part of the data lake design, data lakes can cause significant data duplication and redundancy. Management of such issues can be aided by the use of versioning tools and partitioned schemas.

A data lake was invented to collect raw data from various sources and store it in a single repository to build various data layers to meet the needs of various use cases. As they become more complex with vast amounts of data, it may take too long to create new data products that adhere to the standards of the concerned organization, explained Prashanth Kaddi, Partner, Deloitte India.

According to Kaddi, the concept of a “data mesh” supported by central and self-service data infrastructure emerges as a solution to the challenges of data lakes.

Create metadata – When gathering and centralizing data sources, raw data is frequently stored with insufficient context information, limiting its usefulness. To derive value, it is necessary to create metadata that can be used to locate, make sense of, and draw relationships between the raw data stored within.

Data security – Because data lakes typically contain a large amount of information, organizations must control which users have access to which parts of the data. Organizations will be better positioned to meet increasingly stringent compliance requirements if they implement appropriate security and controls like role-based access.

Data governance – For obtaining a reliable output, it is usually necessary to cleanse, consolidate, and standardize the data coming in; and with a data lake, the responsibility for preparing the data falls primarily on the business users.

Once again, data lake solutioning provides numerous benefits; however, to maximize the benefits of this technology, some tips and tricks should be kept in mind.

According to Sonu Somapalan, co-founder of Tenovia, it is critical to identify the data sources as well as the frequency with which data is added to the lake. You must consider the cost structure and scalability of recurring data stored in a cloud data lake. According to Somapalan, it is also critical to establish data quality processes and automation for efficient data management and governance.

Once the data is on the lake, it will need data pipelines for various processes such as ETL, analytics, and visualization, Somapalan added.