According to AI pioneer Geoffrey Hinton, machine-learning types of artificial intelligence will result in a revolution in computer systems, a new kind of hardware-software union that can put AI in your toaster.

According to Hinton, who gave the final keynote address on Thursday at the NeurIPS conference in New Orleans, the machine learning research community has been slow to appreciate the implications of deep learning for how computers are created.

What he believes is that we’re going to see a very different form of computer, not in a few years, but there are plenty of good reasons to look at this totally other type of computer, he continued.

To date, every digital computer has been designed to be “immortal,” with hardware that is dependable enough to execute the same software anywhere. The knowledge is immortal. We can execute the same programs on various physical hardware.

According to Hinton, this restriction prevents digital computers from taking advantage of all kinds of variable, stochastic, flaky, analog, unstable qualities of the hardware, which might be very beneficial to us. To allow two different bits of hardware to perform exactly the same way at the level of the instructions would require too many unreliable factors.

According to Hinton, future computer systems will adopt a different strategy: they will be “neuromorphic” and “mortal,” which means that each computer will be closely linked to software that simulates neural nets and messy hardware, in the sense that it has analog rather than digital components and can incorporate elements of uncertainty as well as evolve over time.

The alternative to that, though, is to say that they are going to give up on the separation of hardware and software, which computer scientists strongly dislike since it attacks one of their fundamental ideas, said Hinton.

They are going to perform what he refers to as mortal computation, in which the hardware and the knowledge that the system has gained are intertwined.

He claimed that it was possible to “grow” these mortal computers rather than using expensive chip manufacturing facilities.

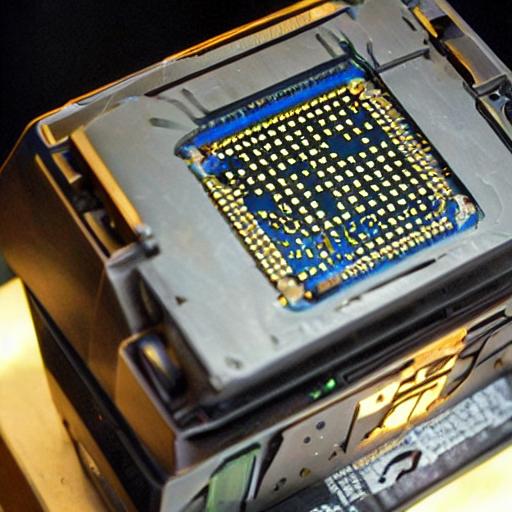

If they achieve that, he continued, referring to a decades-old type of experimental chip that is based on non-linear circuit components, we can use extremely low-power analog computation, you can have trillion-way parallelism utilizing things like memristors for the weights.

You could likewise grow hardware without understanding the precise quality of the particular behaviour of various bits of hardware.

Hilton informed the NeurIPS audience that the new mortal computers will not replace traditional digital computers. It won’t be the computer that controls your bank account and knows exactly how much money you have, Hinton explained.

It will be used to install something else in your toaster for a dollar, such as GPT-3, so that it can run on a few watts and converse with you.

As a result of his research from a decade ago, “ImageNet Classification with Deep Convolutional Neural Networks,” which he co-authored with his graduate students Alex Krizhevsky and Ilya Sutskever, Hinton was asked to present at the conference. The article received the “Test of Time” award from the conference for having “a major impact” on the field. The study, which was released in 2012, was the first time a convolutional neural network took part in the ImageNet image recognition competition at a human level. It also served as the catalyst for the present AI era.

Hinton co-founded the Deep Learning Conspiracy with two other ACM Turing award winners, Yann LeCun of Meta, and Yoshua Bengio of Montreal’s MILA institute for AI. The Deep Learning Conspiracy revived the dormant field of machine learning. Hinton received the award, which is equivalent to the Nobel Prize in the field of computer science.

Hinton is considered to be AI royalty in this regard.

In the majority of his invited session, Hinton discussed a novel method for building neural networks termed a forward-forward network, which does away with the backpropagation method employed in nearly all neural networks. He suggested that by removing back-prop, forward-forward nets might more accurately mimic what actually occurs in the brain.

Hinton, an emeritus professor at the University of Toronto, has a draught article on the forward-forward work available online (PDF).

According to Hinton, the forward-forward strategy might work well with the hardware for mortal computation.

In order for something similar to happen, Hinton said, we need a learning method that will operate in a specific piece of hardware, and we learn to make advantage of the specific qualities of that particular piece of hardware without understanding what those properties are. However, the forward-forward algorithm strikes me as a strong contender for what that tiny method might be.

People’s attachment to the dependability of running a piece of software on millions of devices, he claimed, is a barrier to developing the new analog mortal computers.

Instead, he advised, you’d replace that by requiring each of those cell phones to start out as a baby cell phone and learn how to be a cell phone. And that hurts a lot.

Even the most skilled engineers in the field will be reluctant to abandon the paradigm of perfect, identical everlasting computers due to their fear of the unknown.

There are still very few people who are willing to give up on immortality among those who are interested in analog computation, he said. According to him, this occurs as a result of the attachment to consistency and predictability. You’ve got a huge problem with all these stray electric gadgets and stuff. if you want your analog devices to always do the same action.