The machine learning community, particularly in the fields of computer vision and language processing, has a data culture problem. That’s according to a survey of research into the community’s dataset collection and use practices published earlier this month.

What’s needed is a shift away from reliance on the large, poorly curated datasets used to train machine learning models. Instead, the study recommends a culture that cares for the people who are represented in datasets and respects their privacy and property rights. But in today’s machine learning environment, survey authors said, “anything goes.”

The work also calls for more rigorous data management and documentation practices. Datasets made this way will undoubtedly require more time, money, and effort but will “encourage work on approaches to machine learning that go beyond the current paradigm of techniques idolizing scale.”

“We argue that fixes that focus narrowly on improving datasets by making them more representative or more challenging might miss the more general point raised by these critiques, and we’ll be trapped in a game of dataset whack-a-mole rather than making progress, so long as notions of ‘progress’ are largely defined by performance on datasets,” the paper reads. “Should this come to pass, we predict that machine learning as a field will be better positioned to understand how its technology impacts people and to design solutions that work with fidelity and equity in their deployment contexts.”

Events over the past year have brought to light the machine learning community’s shortcomings and often harmed people from marginalized communities. After Google fired Timnit Gebru, an incident Googlers refer to as a case of “unprecedented research censorship,” Reuters reported on Wednesday that the company has started carrying out reviews of research papers on “sensitive topics” and that on at least three occasions, authors have been asked to not put Google technology in a negative light, according to internal communications and people familiar with the matter. And yet a Washington Post profile of Gebru this week revealed that Google AI chief Jeff Dean had asked her to investigate the negative impact of large language models this fall.

In conversations about GPT-3, coauthor Emily Bender previously told VentureBeat she wants to see the NLP community prioritize good science. Bender was co-lead author of a paper with Gebru that was brought to light earlier this month after Google fired Gebru. That paper examined how the use of large language models can impact marginalized communities. Last week, organizers of the Fairness, Accountability, and Transparency (FAccT) conference accepted the paper for publication.

Also last week, Hanna joined colleagues on the Ethical AI team at Google and sent a note to Google leadership demanding that Gebru be reinstated. The same day, members of Congress familiar with algorithmic bias sent a letter to Google CEO Sundar Pichai demanding answers.

The company’s decision to censor AI researchers and fire Gebru may carry policy implications. Right now, Google, MIT, and Stanford are some of the most active or influential producers of AI research published at major annual academic conferences. Members of Congress have proposed regulation to guard against algorithmic bias, while experts called for increased taxes on Big Tech, in part to fund independent research. VentureBeat recently spoke with six experts in AI, ethics, and law about the ways Google’s AI ethics meltdown could affect policy.

Earlier this month, “Data and its (dis)contents” received an award from organizers of the ML Retrospectives, Surveys and Meta-analyses workshop at NeurIPS, an AI research conference that attracted 22,000 attendees. Nearly 2,000 papers were published at NeurIPS this year, including work related to failure detection for safety-critical systems; methods for faster, more efficient backpropagation; and the beginnings of a project that treats climate change as a machine learning grand challenge.

Another Hanna paper, presented at the Resistance AI workshop, urges the machine learning community to go beyond scale when considering how to address systemic social issues and asserts that resistance to scale thinking is needed. Hanna spoke with VentureBeat earlier this year about the use of critical race theory when considering matters related to race, identity, and fairness.

In natural language processing in recent years, networks made using the Transformer neural network architecture and increasingly large corpora of data have racked up high performance marks in benchmarks like GLUE. Google’s BERT and derivatives of BERT led the way, followed by networks like Microsoft’s MT-DNN, Nvidia’s Megatron, and OpenAI’s GPT-3. Introduced in May, GPT-3 is the largest language model to date. A paper about the model’s performance won one of three best paper awards given to researchers at NeurIPS this year.

The scale of massive datasets makes it hard to thoroughly scrutinize their contents. This leads to repeated examples of algorithmic bias that return obscenely biased results about Muslims, people who are queer or do not conform to an expected gender identity, people who are disabled, women, and Black people, among other demographics.

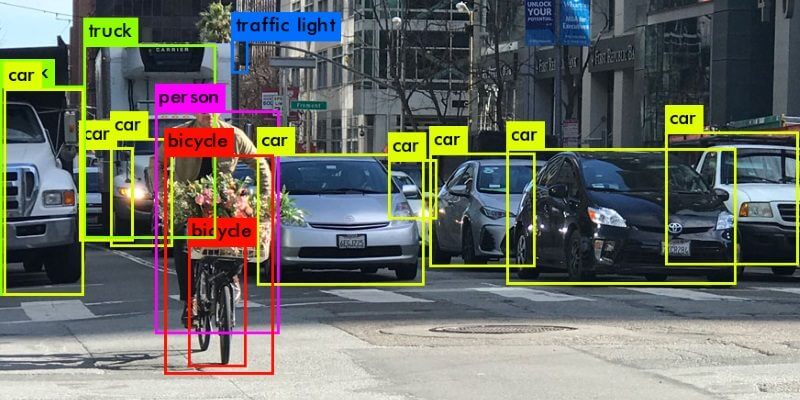

The perils of large datasets are also demonstrated in the computer vision field, evidenced by Stanford University researchers’ announcement in December 2019 they would remove offensive labels and images from ImageNet. The model StyleGAN, developed by Nvidia, also produced biased results after training on a large image dataset. And following the discovery of sexist and racist images and labels, creators of 80 Million Tiny Images apologized and asked engineers to delete and no longer use the material.

This article has been published from a wire agency feed without modifications to the text. Only the headline has been changed.