[ad_1]

Researchers from the Sri Lanka’s University of Moratuwa and the University of Sydney in Australia have proposed a technique for generating new handwritten character training samples from existing samples. The technique leverages Capsule Networks (CapsNets) to tackle the scarcity problem of labeled training data for non-mainstream language character recognition systems.

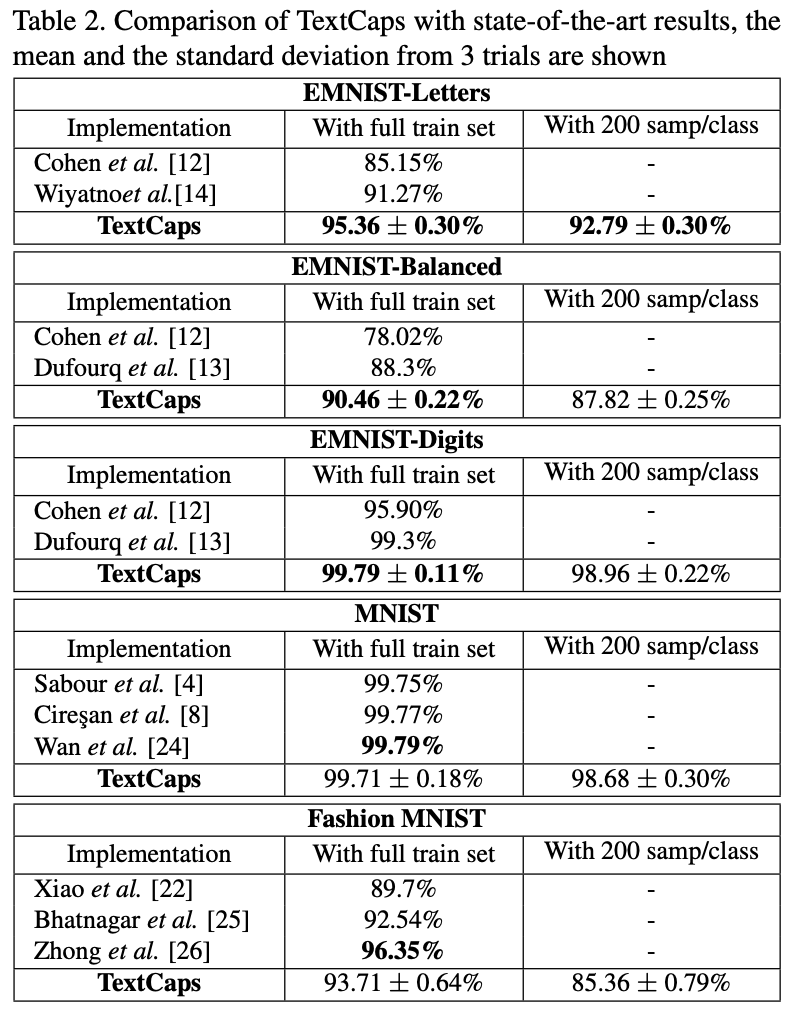

Handwritten character recognition is a well-developed technique for mainstream languages thanks to recent advancements in deep learning and plentiful training data. For many local languages however handwritten digit recognition remains problematic due to the lack of substantial labeled datasets for deep learning model training. The new technique can achieve character recognition from only 200 samples, surpassing the previous comparable character recognition systems on the EMNIST Dataset which requires at least 2400 data points per class.

The principal methodologies of this novel technique are character recognition with capsule networks and perturbation of instantiation parameters for image data generation.

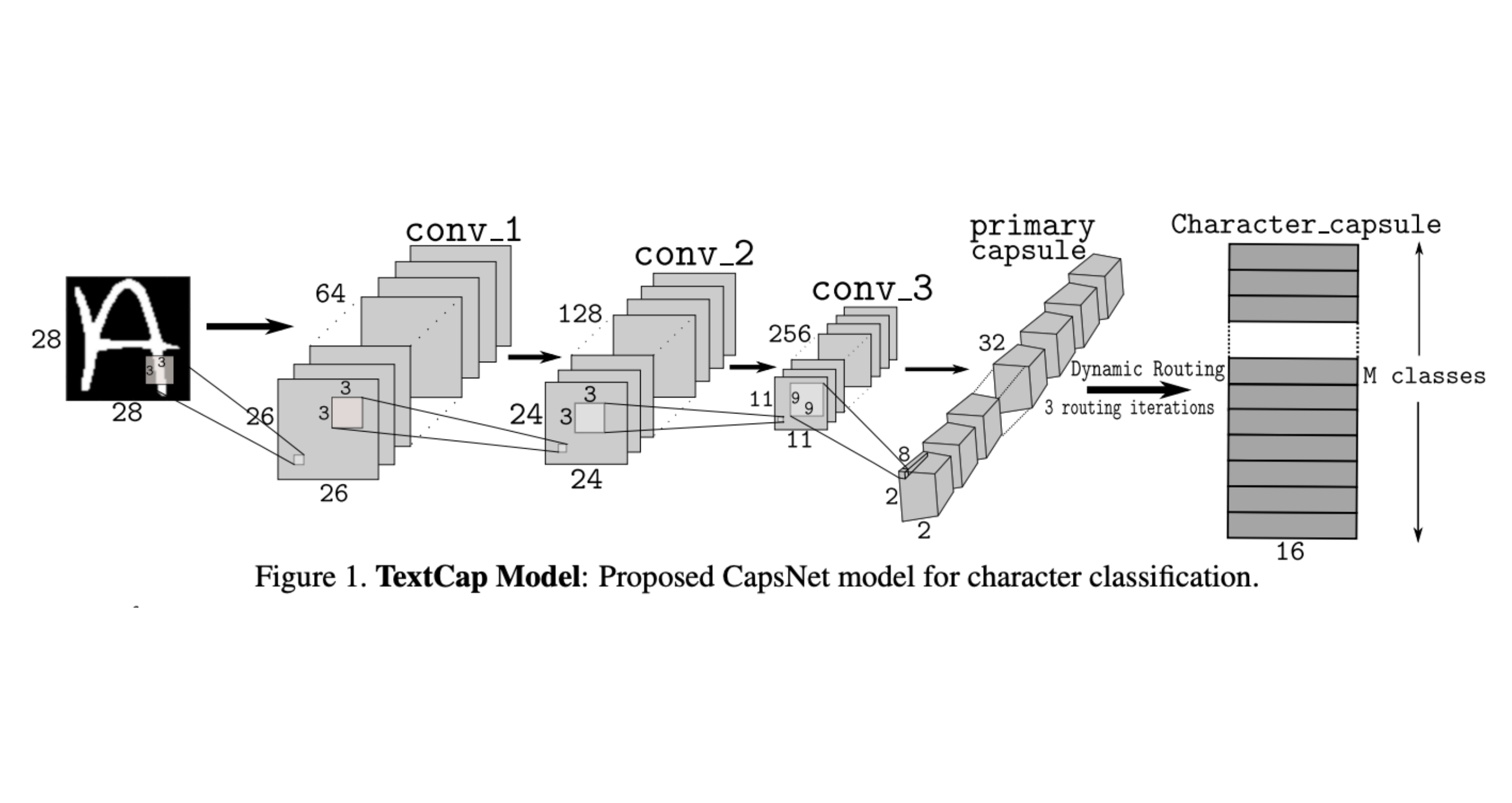

To achieve character recognition, researchers introduce an architecture consisting of a capsule network and a decoder network, as shown in Fig. 1 and Fig. 2.

The capsule network contains five layers. The first three layers are convolutional layers while the fourth layer is a primary capsule layer and the fifth a fully connected character capsule layer. The decoder network comprises a fully connected layer and five deconvolutional layers with parameters as illustrated in Fig. 2.

While the capsule network performance met the standard, the decoder network failed to achieve acceptable reconstruction, and so researchers turned to the instantiation parameters in the capsule network to augment the original training samples.

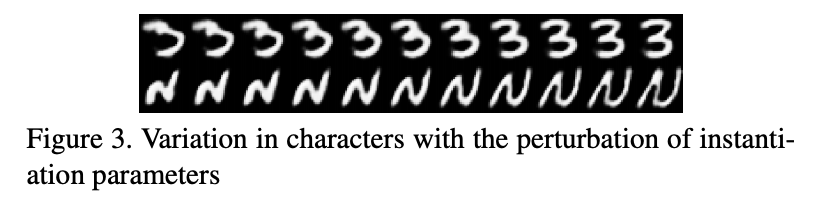

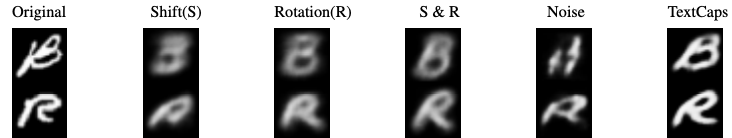

The inner logic of the perturbation algorithm is to add controlled random noise to the values of the instantiation vector to generate new images, which effectively enlarges the training dataset. Fig. 3 demonstrates how the variation of particular instantiation results in the image change.

Since each instantiation parameter leads to a particular image property, researchers developed an innovative technique for generating a new dataset with a limited amount of training samples, as Fig. 4 illustrates.

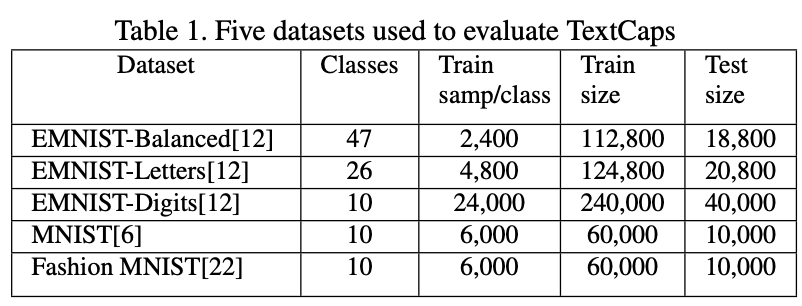

To evaluate handwritten character classification accuracy researchers trained TextCaps on both the 200 training samples and the full training data sets for comparison with other character recognition systems on the EMNIST Dataset. Table 1 and Table 2 illustrate the test results.

Researchers also evaluated the advantages and limitations of the decoder retraining technique and new image data generation by perturbation technique. The results are shown in Fig.5 and Fig.6.

The paper TextCaps : Handwritten Character Recognition with Very Small Datasets is on arXiv.

Author: Hongxi Li | Editor: Michael Sarazen

[ad_2]

This article has been published from the source link without modifications to the text. Only the headline has been changed.