Growing attention on QML

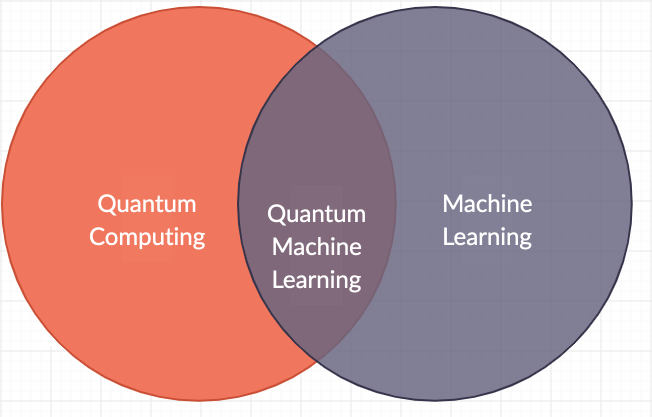

Recently, there has been a lot of interest in the scientific community and among tech businesses about QML, or quantum machine learning, which is possible when two cutting edge technologies, quantum computing and machine learning (ML), are combined. By taking advantage of the special qualities of the subatomic world, sufficiently large-scale quantum computers have the ability to tackle a number of issues more successfully than conventional digital electronics.

Machine learning (ML) is a type of artificial intelligence (AI) where computers identify patterns in data and learn rules to draw conclusions in novel or unfamiliar scenarios. The focus on QML has increased with the development of Chat generative pre-trained transformer (GPT), a highly advanced AI system that uses machine learning (ML) to drive its human-like interactions. It has also increased due to the rapid growth in power and scale of quantum computers.

For example, start-ups like Rigetti and IonQ and tech giants like Google and IBM are researching the potential of QML. There has been a lot of interest in QML from the scientific community as well. For example, CERN scientists are mainly interested in applying quantum computing to enhance or accelerate classical machine learning models. The specific application contexts where QML outperforms classical ML have not yet been determined with certainty.

While it is theoretically possible for quantum computers to speed up calculations for specific computing jobs, such as modeling molecules, there is currently insufficient data to support this claim. Even when it is not faster than classical counterparts, QML has also been proposed to discover patterns more effectively that classical computers overlook.

Though there is no doubt that QML has advantages in this application field, more and more academics are coming to the conclusion that this technique has little chance of success for near-term uses. As a result, many of them are now concentrating on using QML algorithms to analyze phenomena that are intrinsically quantum.

Challenges in QML

Several quantum algorithms that have been created in the past 20 years have the potential to improve machine learning efficiency. For example, in 2008, a quantum method was developed that solves massive linear equation sets remarkably quicker than classical computers—one of the main hurdles in machine learning. On the other hand, quantum algorithms haven’t always worked.

For example, in 2018 an algorithm was designed that can operate on a standard computer. This algorithm’s performance was comparable to that of a QML algorithm developed in 2016 to offer recommendations to customers of online retailers based on their past selections at a rate that is tenfold faster than classical algorithms.

This breakthrough showed that a careful assessment of the claims of notable quantum speed-up in real-world machine learning applications is necessary. Efficiently merging classical data and quantum computation in every situation is a major task. A quantum computing application comprises three main steps: first, the quantum computer must be initialized; then, it must execute a series of operations; and lastly, it must perform a read-out.

In certain applications, the first and last steps might be extremely sluggish and offset the benefits of using quantum algorithms, even though the established quantum algorithms can speed up the quantum operations and second step. In particular, the initialization phase of the quantum computer involves loading classical data, which is an inefficient procedure, and then converting the data into a quantum state.

Furthermore, as quantum physics is inherently probabilistic, the read-out may contain some randomness as well. This is because the computer must repeat each step multiple times and average the results to determine the final response. As a result, no solid proof of the necessity for quantum effects on classical data or the viability of employing quantum computers on it has been discovered to yet.

The way forward

Using QML algorithms on quantum data allows one to sidestep the problems related to using classical data. By employing solely quantum apparatus, the quantum sensing technique makes it possible to measure the quantum properties of a system. This method uses QML to identify patterns without interacting with a classical system, putting quantum states straight onto the qubits of a quantum computer. Given that the world is intrinsically quantum-mechanical, the method can therefore provide notable benefits over systems in which quantum measurements are gathered as classical data points in machine learning.

The method is exponentially faster than classical data analysis and measurement, according to a proof-of-principle experiment carried out on one of Google’s Sycamore quantum computers. When physicists analyze and gather data exclusively in the quantum domain, they can successfully address topics that are only partially addressed by classical measurements. Quantum sensing, for example, can be used by particle physicists to handle data from upcoming particle colliders.

In a similar vein, remote astronomical observatories can use quantum sensors to gather data and send it to a central lab, where a quantum computer will process it to produce incredibly sharp photos. In conclusion, whether quantum computers will help machine learning instead of mathematical proofs can only be determined by experimentation. Therefore, further study on QML is needed, but it shouldn’t be unduly focused on the “quantum speed-up” element.