XGBoost is probably one of the most widely used libraries in data science. Many data scientists around the world are using it. It’s a very versatile algorithm that can be use to perform classification, regression as well as confidence intervals as shown in this article. But how many really understand its underlying principles?

You might think that a Machine Learning algorithm that performs as well as XGBoost might be using very complex and advanced mathematics. You probably imagine that it’s a masterpiece of software engineering.

And you’re partly right. The XGBoost library is a pretty complex one, but if you consider only the mathematical formulation of gradient boosting applied to decision trees, it’s not that complicated.

You will see below in detail how to train decision trees for regression using the gradient boosting method with less than 200 lines of code.

Decision Tree

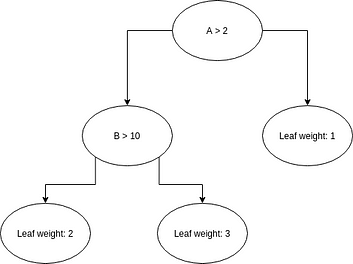

Before getting into the mathematical details, let’s refresh our memory regarding decision trees. The principle is fairly simple: associate a value to a given set of features traversing a binary tree. Each node of the binary tree is attached to a condition; leaves contain values.

If the condition is true, we continue the traversal using the left node, if not, we use the right one. Once a leaf is reached, we have our prediction.

As often, a picture is worth a thousand words:

The condition attached to a node can be regarded as a decision, hence the name Decision Tree.

This kind of structure is pretty old in the history of computer science and has been used for decades with success. A basic implementation is given by the following lines:

class DecisionNode:

"""

Node decision class.

This is a simple binary node, with potentially two children: left and right

Left node is returned when condition is true

False node is returned when condition is false

"""

def __init__(self, name, condition, value=None):

self.name = name

self.condition = condition

self.value = value

self.left = None

self.right = None

def add_left_node(self, left):

self.left = left

def add_right_node(self, right):

self.right = right

def is_leaf(self):

"""

Node is a leaf if it has no child

"""

return (not self.left) and (not self.right)

def next(self, data):

"""

Return next node depending on data and node condition

"""

cond = self.condition(data)

if cond:

return self.left

else:

return self.right

class DecisionTree:

"""

A DecisionTree is a model that provides predictions depending on input.

A Prediction is the sum of the leaves' values, for those leaves that were activated by the input

"""

def __init__(self, root):

"""

A DecisionTree is defined by an objective, a number of estimators and a max depth.

"""

self.root = root

def predict(self, data):

child = root

while child and not child.is_leaf():

child = child.next(data)

return child.value

root = DecisionNode('root', lambda d : d['A'] > 2.0)

root_left = DecisionNode('root_left', lambda d : d['B'] > 10.0, None)

root_right = DecisionNode('root_right', None, 1)

left_left = DecisionNode('left_left', None, 2)

left_right = DecisionNode('left_right', None, 3)

root.add_left_node(root_left)

root.add_right_node(root_right)

root_left.add_left_node(left_left)

root_left.add_right_node(left_right)

tree = DecisionTree(root)

print(tree.predict({'A' : 1, 'B' : 1})) # 1

print(tree.predict({'A' : 1, 'B' : 10})) # 1

print(tree.predict({'A' : 3, 'B' : 11})) # 2

print(tree.predict({'A' : 3, 'B' : 9})) # 3

Stacking multiple trees

Even though Decision Trees have been used with some success in a number of applications, like expert systems (before the AI winter) it remains a very basic model that cannot handle the complexity usually encountered in real-world data.

We usually refer to this kind of estimators as weak models.

To overcome this limitation, the idea emerged in the nineties to combine multiple weak models to create a strong model: ensemble learning.

This method can be applied to any kind of model, but as Decision Trees are simple, fast, generic and easily interpretable models, they are commonly used.

Various strategies can be deployed to combine models. We can for instance use a weighted sum of each model prediction. Or even better, use a Bayesian approach to combine them based on learning.

XGBoost and all boosting methods use another approach: each new model tries to compensate for the errors of the previous one.

Optimizing decision trees

As we have seen above, making predictions using a decision tree is straightforward. The job is hardly more complicated when using ensemble learning: all we have to do is sum contributions of each model.

What’s really complicated is building the tree itself! How can we find the best condition to apply at each node of our training dataset? This is where math helps us. A full derivation can be found in the XGBoost documentation. Here, we will focus only on the formulas of interest for this article.

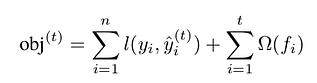

As always in machine learning, we want to set our model parameters so that our model’s predictions on the training set minimize a given objective:

Please note that this objective is made of two terms:

- One to measure the error made by the prediction. It’s the famous loss function l(y, y_hat).

- The other, omega, to control model complexity.

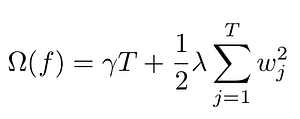

As stated in the XGBoost documentation, complexity is a very important part of the objective that allows us to tune the bias/variance trade-off. Many different functions can be used to define this regularization term. XGBoost use:

Here T is the total number of leaves, whereas w_j are the weights attached to each leaf. This means that a large weight and a large number of leaves will be penalized.

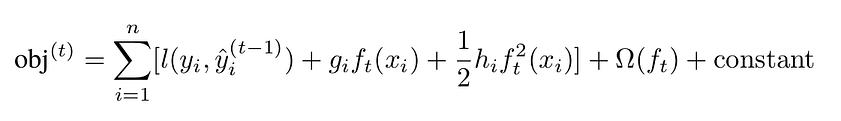

As the error is often a complex, non-linear function, we linearized it using second-order Taylor expansion:

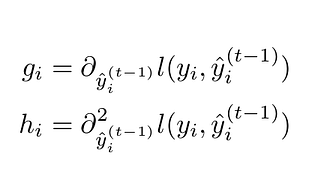

where:

The linearization is computed with respect to the prediction term, as we want to estimate how the error changes when the prediction changes. Linearization is essential, as it will ease the minimization of the error.

What we want to achieve with gradient boosting, is to find the optimal delta_y_i that will minimize the loss function, i.e. we want to find how to modify the existing tree so that the modification improves the prediction.

When dealing with tree models, there are two kinds of parameters to consider:

- The ones defining the tree in itself: the condition for each node, the depth of the tree

- The values attached to each leaf of the tree. These values are the predictions themselves.

Exploring every tree configurations would be too complex, therefore gradient tree boosting methods only consider splitting one node into two leaves. This means that we have to optimize three parameters:

- The splitting value: on which condition do we split data

- The value attached to the left leaf

- The value attached to the right leaf

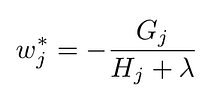

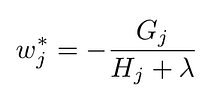

In the XGBoost documentation, the best value for a leaf j with respect to the objective is given by:

where G_j is the sum of the gradient of the training points attached to the node j, and H_j is the sum of the hessian of the training points attached to the node j.

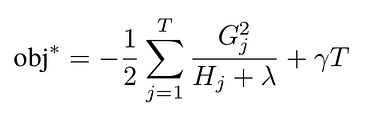

The reduction in the objective function obtained with this optimal param is:

Choosing the right split value is done using brut force: we compute the improvement for each split value, and we keep the best one.

We now have all the required mathematical information to boost an initial tree performance with respect to a given objective by adding new leaves.

Before doing it concretely, let’s take some time to understand what these formulas mean.

Grokking gradient boosting

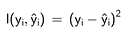

Let’s try to get some insight on how weight is computed, and what G_j and H_i equal to. As they are respectively the gradient and hessian of the loss function with respect to the prediction, we have to pick a loss function.

We will focus on the squared error, which is commonly used and is the default objective for XGBoost:

It’s a pretty simple formula, whose gradient is:

and hessian:

Hence, if we remember the formulas for the optimal weight that maximize error reduction:

We realize that the optimal weight, i.e. the value that we add to the previous prediction is the opposite of the average error between the previous prediction and the real value (when regularization is disabled, i.e. lambda = 0). Using the squared loss to train Decision Trees with gradient boosting just boils down to updating the prediction with the average error in each new node.

We also see that lambda has the expected effect, that is ensuring that weights are not too large, as weight is inversely proportional to lambda.

Training decision trees

Now comes the easy part. Let’s say that we have an existing decision tree that ensures predictions with a given error. We want to reduce the error and improve the attached objective by splitting one node.

The algorithm to do so is pretty straightforward:

- Pick a feature of interest

- Order data points attached to the current node using values of the selected feature

- pick a possible split value

- Put data points below this split value in the right node, and the one above in the left node

- compute objective reduction for the parent node, the right node and the left one

- If the sum of the objective reduction for left and right nodes is greater than the one of the parent node, keep the split value as the best one

- Iterate for each split value

- Use the best split value if any, and add the two new nodes

- If no splitting improved the objective, don’t add child nodes.

The resulting code creates a DecisionTree class, that is configured by an objective, a number of estimators, i.e. the number of trees, and a max depth.

As promised the code takes less than 200 lines:

from asciitree import LeftAligned

from collections import OrderedDict as OD

import networkx as nx

import matplotlib.pyplot as plt

import pandas as pd

from jax import grad, jacfwd, jacrev, jit

import jax.numpy as jnp

import numpy as np

from drawtree import draw_level_order

import random

def hessian(fun):

return jit(jacfwd(jacrev(fun)))

class DecisionNode:

"""

Node decision class.

This is a simple binary node, with potentially two childs: left and right

Left node is returned when condition is true

False node is returned when condition is false<

"""

def __init__(self, name, condition, value=None):

self.name = name

self.condition = condition

self.value = value

self.left = None

self.right = None

def add_left_node(self, left):

self.left = left

def add_right_node(self, right):

self.right = right

def is_leaf(self):

"""

Node is a leaf if it has no child

"""

return (not self.left) and (not self.right)

def next(self, data):

"""

Return next code depending on data and node condition

"""

cond = self.condition(data)

if cond:

return self.left

else:

return self.right

class DecisionTree:

"""

A DecisionTree is a model that provides predictions depending on input.

Prediction is the sum of the values attached to leaf activated by input

"""

def __init__(self, objective, nb_estimators, max_depth):

"""

A DecisionTree is defined by an objective, a number of estimators and a max depth.

"""

self.roots = [DecisionNode(f'root_{esti}', None, 0.0) for esti in range(0, nb_estimators)]

self.objective = objective

self.lbda = 0.0

self.gamma = 1.0 * 0

self.grad = grad(self.objective)

self.hessian = hessian(self.objective)

self.max_depth = max_depth

self.base_score = None

def _create_condition(self, col_name, split_value):

"""

Create a closure that capture split value

"""

return lambda dta : dta[col_name] < split_value

def _pick_columns(self, columns):

return random.choice(columns)

def _add_child_nodes(self, node, nodes,

node_x, node_y,

split_value, split_column,

nb_nodes,

left_w, right_w, prev_w):

node.name = f'{split_column} < {split_value}'

node.condition = self._create_condition(split_column, split_value) # we must create a closure to capture split_value copy

node.add_left_node(DecisionNode(f'left_{nb_nodes} - {split_column} < {split_value}',

None, left_w + prev_w))

node.add_right_node(DecisionNode(f'right_{nb_nodes} - {split_column} >= {split_value}',

None, right_w + prev_w))

mask = node_x[split_column] < split_value

# Reverse order to ensure bfs

nodes.append((node.left,

node_x[mask].copy(),

node_y[mask].copy(),

left_w + prev_w))

nodes.append((node.right,

node_x[~mask].copy(),

node_y[~mask].copy(),

right_w + prev_w))

def fit(self, x_train, y_train):

"""

Fit decision trees using x_train and objective

"""

self.base_score = y_train.mean()

for tree_idx, tree_root in enumerate(self.roots):

# store current node (currenly a lead), x_train and node leaf weight

nodes = [(tree_root, x_train.copy(), y_train.copy(), 0.0)]

nb_nodes = 0

# Add node to tree using bfs

while nodes:

node, node_x, node_y, prev_w = nodes.pop(0)

node_x['pred'] = self.predict(node_x)

split_column = self._pick_columns(x_train.columns) # XGBoost use a smarter heuristic here

best_split, split_value, left_w, right_w = self._find_best_split(split_column,

node_x, node_y)

if best_split != -1:

self._add_child_nodes(node, nodes,

node_x, node_y,

split_value, split_column,

nb_nodes,

left_w, right_w, prev_w)

nb_nodes += 1

if nb_nodes >= 2**self.max_depth-1:

break

def _gain_and_weight(self, x_train, y_train):

"""

Compute gain and leaf weight using automatic differentiation

"""

pred = x_train['pred'].values

G_i = self.grad(pred, y_train.values).sum()

H_i = self.hessian(pred, y_train.values).sum()

return -0.5 * G_i * G_i / (H_i + self.lbda) + self.gamma, -G_i / (H_i + self.lbda)

def _find_best_split(self, col_name, node_x, node_y):

"""

Compute best split

"""

x_sorted = node_x.sort_values(by=col_name)

y_sorted = node_y[x_sorted.index]

current_gain, _ = self._gain_and_weight(x_sorted, node_y)

gain = 0.0

best_split = -1

split_value, best_left_w, best_right_w = None, None, None

for split_idx in range(1, x_sorted.shape[0]):

left_data = x_sorted.iloc[:split_idx]

right_data = x_sorted.iloc[split_idx:]

left_y = y_sorted.iloc[:split_idx]

right_y = y_sorted.iloc[split_idx:]

left_gain, left_w = self._gain_and_weight(left_data, left_y)

right_gain, right_w = self._gain_and_weight(right_data, right_y)

if current_gain - (left_gain + right_gain) > gain:

gain = current_gain - (left_gain + right_gain)

best_split = split_idx

split_value = x_sorted[col_name].iloc[split_idx]

best_left_w = left_w

best_right_w = right_w

return best_split, split_value, best_left_w, best_right_w

def predict(self, data):

preds = []

for _, row in data.iterrows():

pred = 0.0

for tree_idx, root in enumerate(self.roots):

child = root

while child and not child.is_leaf():

child = child.next(row)

pred += child.value

preds.append(pred)

return np.array(preds) + self.base_score

def show(self):

print('not yet implemented')

def squared_error(y_pred, y_true):

diff = y_true - y_pred

return jnp.dot(diff, diff.T)

x_train = pd.DataFrame({"A" : [3.0, 2.0, 1.0, 4.0, 5.0, 6.0, 7.0]})

y_train = pd.DataFrame({"Y" : [3.0, 2.0, 1.0, 4.0, 5.0, 6.0, 7.0]})

tree = DecisionTree(squared_error, 1, 3)

tree.fit(x_train, y_train['Y'])

pred = tree.predict(pd.DataFrame({'A': [1., 2., 3., 4., 5., 6., 7.]}))

print(pred) #-> [1. 2. 3. 4. 5. 6. 7.]

tree = DecisionTree(squared_error, 2, 3)

tree.fit(x_train, y_train['Y'])

pred = tree.predict(pd.DataFrame({'A': [1., 2., 3., 4., 5., 6., 7.]}))

print(pred) #-> [1. 2. 3. 4. 5. 6. 7.]

tree = DecisionTree(squared_error, 4, 2)

tree.fit(x_train, y_train['Y'])

pred = tree.predict(pd.DataFrame({'A': [1., 2., 3., 4., 5., 6., 7.]}))

print(pred) # -> [1. 2. 3. 4. 5. 5.9999995 7. ]

x_train = pd.DataFrame({'A': [1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0,

1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0,],

'B': [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0,

1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0,]})

y_train = pd.DataFrame({"Y" : [1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0,

1.5, 2.5, 3.5, 4.5, 5.5, 6.5, 7.5]})

tree = DecisionTree(squared_error, 1, 6)

tree.fit(x_train, y_train['Y'])

pred = tree.predict(pd.DataFrame({'A': [1., 2., 3., 4., 5., 6., 7.],

'B': [0., 1., 0., 1., 0., 1., 0.]}))

print(pred) #-> [1. 2.5 3. 4.5 5. 6.5 7. ]

The core of the training is coded in the function _find_best_split. It essentially follows the steps detailed above.

Note that to support any kind of objective, without the pain of manually calculating the gradient and the hessian, we use automatic differentiation and the jax library to automate computations.

Initially, we start with a tree with only one node whose leaf value leaf is zero. As we have mimic XGBoost, we also use a base score, that we set to be the mean of the value to predict.

Also, note that at line 126 we stop tree building if we reach the max depth defined when initializing the tree. We could have used other conditions like a minimum number of sample for each leaf or a minimum value for the new weight.

Another very important point is the choice of the feature used for the splitting. Here, for the sake of simplicity, features are selected randomly, but we could have been using smarter strategies, like using the feature having the greatest variance.

Conclusion

In this article, we have seen how gradient boosting works to train decision trees. To further improve our understanding, we have written the minimal set of lines required to train an ensemble of decision trees and use them for prediction.

Having a deep understanding of the algorithms we use for machine learning is absolutely critical. It not only helps us building better models but more importantly allows us to bend these models to our needs.

For instance, in the case of gradient boosting, playing with loss functions is an excellent way to increase precision when predicting.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link