The term “big data” has been around since the 1990s and companies have certainly placed value on investing in big data for almost as long. However, according to a recent NewVantage Partners 2021 survey of Fortune 1000 executives, companies continue to struggle to derive value from their big data investments. Only 48.5% are innovating with data. Only 41.2% compete in the analysis. And only 24% have created a data-driven organization. In the last ten years, the focus of enterprise data analytics attention has shifted from the data warehouse architecture to the data lake architecture. There are various schools of thought on what the modern data lake stack and data lake architecture ought to look like. Many organizations have failed to realize ROI from their data initiatives, largely due to unplanned and unsustainable costs of modeling data and DataOps.

Despite the challenges, there are important reasons for organizations to worry that their data lake investments can generate huge returns on ROI. Think about pharmaceutical companies looking for the next vaccine candidate or financial services companies struggling to stay ahead of market volatility. Media firms want to discover which pieces of content each user is likely to go through next, and security teams want to perform analysis of the security data lake faster and more accurately. The common thread between all of these strategic initiatives is the ability to analyze as much data as possible with optimal flexibility and agility. Data users demand to run any query whenever they need it. In this case, the use of data storage solutions. Data does not provide the agility and flexibility required; the ability to quickly move to a data lake architecture provides these advantages as well as a strategic competitive advantage.

Unlocking more powerful insights from data analysis is at the heart of the paradigm shift in data lake architecture. The ongoing demand for agile, more flexible data analytics to leverage big data investments has fueled the rise of data lakes and distributed SQL query engines like Presto and Trino. The power of data lakes to store large amounts of raw data in native formats until the business needs it, combined with the agility and flexibility of distributed engines to query that data, promises businesses the opportunity to maximize their data driven growth. Given the promise of data lake architecture analysis coupled with the ability to provide cost effectiveness and efficiency, many organizations have yet to take advantage of the power of the data lake architecture and instead use it as little more than an aggregated storage layer.

The main value organizations derive from the data lake stack has three aspects:

- It enables instant ease of access to their wealth of data, regardless of where it resides, with near zero time-to-market (no need for IT or data teams to prepare or move data).

- It will create a extensive data-driven culture.

- It transforms data into the digital intelligence that is a prerequisite for achieving a competitive advantage in today’s data-driven ecosystem.

In order to create a modern data lake architecture that maximizes the return on investment, future-oriented data organizations use new virtualization, automation and data acceleration strategies and benefit from the advantages. How can data organizations ensure that their modern data lake stack is analytics-ready? The following are key questions that data organizations need to ask themselves whether they are getting the most out of their big data investments.

How explorable is your data lake?

The biggest advantage of data lakes is flexibility. Since the data remains in its native, raw and granular format, it means that the data is not modeled in advance, it is not transformed on the fly or in the target storage. This is an updated data stream that is available for analysis at any time and for any business purpose. However, data lakes are only important to a company’s vision if they help solve business problems through the democratization, reuse, and exploration of data with the help of agile and flexible analytics. The access to the data lake provides a real force multiplier if it is used extensively by companies in all business areas.

Is your data lake strategy living up to its potential?

Most organizations have the best of intentions to get the most out of their data lake architecture. But even after a successful implementation, many companies use the data lake on the sidelines and run limited queries for high-quality ad hoc queries, drastically underutilize their data lake and achieve a low ROI as a result. There are several barriers preventing organizations from taking advantage of the power of their data lake stack, Organizations need to rethink their data lake architecture to capitalize on their big data and analytics investments.

Are you using compute resources effectively?

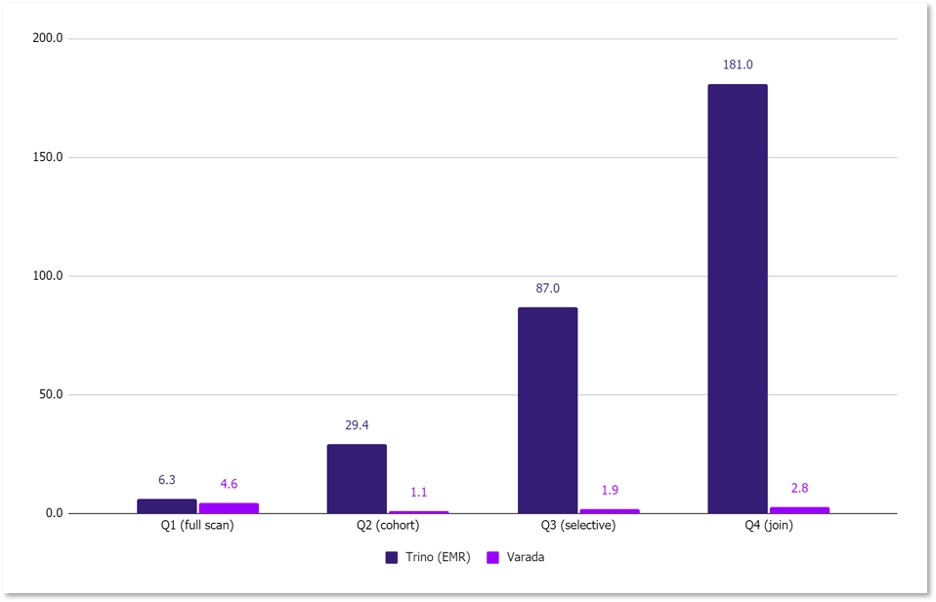

Research shows that 90 percent of computer resources are “wasted” on extensive scans. Traditional data lake query engines rely on brute force query processing and select all of the data to return the set of results required for application responses or analysis. The result is that SLAs are not enough to support interactive use cases and realistically only support ad hoc analytics or experimental queries. To effectively support a wide range of analytical use cases, data teams have no choice but to resort to lean data silos and traditional data warehouses query. The unnecessary use of excessively excessive resources creates significant costs.

Are you minimizing your DataOps and achieving observability?

Today’s businesses need deep, actionable observations at the workload level to gain a full understanding of how resources are distributed among different workloads and users, how and why bottlenecks occur, and how budgets are allocated. The workload perspective enables data teams to uniquely focus engineering efforts on meeting business requirements. To efficiently manage the cost and performance of data analytics, data teams must look for solutions that learn autonomously and continuously, and adapt to the users, the queries they run, and the data they use.

Does your data lake stack have what it takes to be analytics-ready?

Manual query optimization is time consuming, and backlog optimizations are increasing every day, creating a vicious circle that undermines the promise of data lake agility. A lack of workload-level observability prevents data teams from identifying which workloads need priority based on business needs rather than on the needs of an individual user or query. Overcoming these hurdles to harness the power of the data lake requires a transition to an analytics-ready data lake stack that includes:

- Scalable and massive storage (petabyte to exabyte scale) such as AWS S3

- Data virtualization layer that provides access to many data sources and formats

- Distributed SQL query engine such as Trino (PrestoSQL) or PrestoDB

- Query acceleration and workload optimization engine for performance and cost balance, to eliminate the disadvantages of brute force approach and their implications

By using these tools, a company can take advantage of agile data lake analytics that utilize near-perfect data, with performance and costs comparable to traditional data warehousing. As a result, a company no longer has to adapt to its existing data architecture, which restricts the queries that can be executed. Instead, the data architecture is tailored to specific business needs that are highly elastic and dynamic.

Are you leveraging indexing technology?

Indexing has traditionally been used on relatively small data sets. Recent innovations extend the use of indexing to huge amounts of data. Indexing technology eliminates the need for full scans and can automatically speed up queries without the need for query processing or background data maintenance. This reduces the amount of data scanned by orders of magnitude. As an example, look at this benchmarking data:

The missing link in the data lake stack

Data lake query acceleration platforms are the missing link in your data lake stack and act as a smart layer of acceleration in your data lake on top of your data lake which remains the single source of truth. The company’s leading data analytics platform, serving a wide variety of use cases, enabling organizations to transform them into a strategic competitive advantage and achieve a data lake ROI. Data is also becoming a strategic asset as companies can leverage it to innovate in an agile way to respond to opportunities that drive business growth and competitive advantage.