“But Mr. Holmes, how did you know I had been in China?”

“The fish that you have tattooed immediately above your right wrist could only have been done in China. That trick of staining the fishes’ scales of a delicate pink is quite peculiar to that country. When, in addition, I see a Chinese coin hanging from your watch‐chain, the matter becomes even more simple.”

Mr. Jabez Wilson laughed heavily. “Well, I never!” said he. “I thought at first that you had done something clever, but I see that there was nothing in it after all.”

— The Red‐Headed League, Arthur Conan Doyle

The aim of this chapter is to demystify the AI systems in use today to build a framework for understanding new systems that will enter the workplace tomorrow. I look at how AI systems reason, and in particular, how they look at the world (assess), draw conclusions (infer), and make guesses about what is going to happen next (predict). I also consider how these systems use data to paint a picture of a situation (transactions, links clicked, network connections, queries, and so on), use that picture to draw conclusions (these people are the same; these products are related; this situation falls into this category), and then project that reasoning into the future (this customer will like this book; this customer is about to leave us; this machine is going to crash tomorrow).

Many AI systems make use of three major components of human reasoning: assessing, inferring, and predicting. Every second of every day, we need to answer the question of what is happening around us, what it means, and what is going to happen next. When we walk up to an elevator, push the call button, and wait for the next car to show up, we are assessing, inferring, and predicting. AI systems are doing exactly the same, although they’re less likely to be using an elevator to move between floors.

Thinking Hard about Big Data

In today’s world of Big Data, where 2.5 quintillion bytes of data are produced every day, knowing how AI systems capture data, synthesize it, and use it to drive reasoning is most important. The application of these systems to issues of Big Data is what allows us to transform the world of numbers that the machines control into a world of knowledge and insight that we can use.

The trick in understanding these systems is to see that the processes underlying intelligence are not themselves smart. Intelligent systems are built on a foundation of processes that are simple and absolutely understandable. There is no “smart box” in the middle of these systems. AI is not magic, but is instead the application of a collection of algorithms powered by data, scale, and processing power.

Big Data makes it possible for fairly simple learning systems to process the volume of examples needed to pull a signal out of the noise. Given billions of English/French pairings of sentences expressing the same idea, learning how to translate one to the other is possible. Processing and parallelism combine to enable things such as taking a thousand little pieces of evidence, testing them independently, and adding up the results. IBM Watson (which is discussed more thoroughly in Chapter 4) works because it quickly looks for evidence in thousands of documents using thousands of rules. Watson can accomplish such a huge search in so little time because it runs on 90 machines in parallel.

Assessing Data with AI

Most of the consumer systems in use are assessing us. Amazon, for example, puts together a detailed a picture of who we are so that it can match us against similar customers and create a source of predictions about us. The data for this assessment is transactional: what we touch and what we buy. Amazon’s recommendation engines can use this information in their reasoning in order to build up profiles and then make recommendations.

But profile data is only part of the picture. Added to this is information about categories that cluster objects together (“cookbooks”), categories of customers (“people who are handy”), and information based on other users (“people like you”). How much you tend to spend and where you live can be pulled in to refine the snapshots of both you and the things with which you interact.

In this instance, the result is a set of characterizations, such as:

✓ Given the entire collection of things you like, you are similar to this person who also reads cookbooks, science fiction, and modern biographies.

✓ Given a particular subset of the things you look at or what you just bought or looked at, you seem to like cooking.

✓ You just bought a SousVide Supreme™ Demi Temperature Controlled Water Oven In Red.

For retailers, the key pieces of information are transactions, clusters of people, and product categories. For social networks (such as Facebook), the important details are “Likes,” the people in your friends network, and the information you provide in your “About” profile. For search engines, the crucial bits of data are the history of terms you have searched, items you have clicked on, your location, and any other information that can be gleaned from your interactions with the engine and its applications.

The sheer volume of the number of transactions often drives these systems. Even with very weak models of the world, systems can sift out relevant data from the noise because they have so much to work with. And the more we interact with these systems, the more they can learn about us. For example, by analyzing 3.5 billion searches a day, Google learns which words show up together and which ordering of them makes the most sense. The Google Instant service uses the history of not just you, but everyone, to create a predictive model: When you type “what is good for,” Google provides helpful suggestions such as “a hangover.”

Systems aimed at predicting specific outcomes such as customer churn and equipment down time almost always make use of historical information and rules related to those issues. However, incorporating a variety of data, including interactional data (what was customer sentiment during a conversation with a call center agent?) and environmental data (what was the weather like when the machine broke down?) makes the learning algorithms more accurate than relying on the volume of data alone.

Inferring Knowledge with AI

Once you know a little about the world, you can start thinking about extending that knowledge and start making inferences.

Inference is perhaps the most misunderstood aspect of artificial intelligence because inference is usually thought of as consisting of “if‐then” rules. While this is a fine characterization of the basic layer of intelligent systems, it’s like describing human reasoning as “just a bunch of chemical reactions.”

A more powerful approach is to start with the idea of relationships between things; objects and actions, profiles and categories, people and other people, and so on. Inference is the process of making the step from one thing to the other.

Sometimes this process can look like a simple “if‐then” rule. A captain outranks a sergeant, so Captain Douglas outranks Sergeant Philips. This is simple deduction, where anyone — human or machine — has no choice but to make this inference.

But the world is usually not so clear cut. This sort of inference is rare and you can do very little with it. To go beyond such purely deductive reasoning, you have to step into the world of evidence‐based reasoning, which includes assessing similarity, categorizing, and amassing points of evidence, as outlined in the next section.

Checking similarity

When Amazon’s engine recommends a book, it first considers who I am similar to and what category of reader I might fall into. The engine bases this consideration on how close my profile is to that of other customers or to a generic profile that defines a category. The match is rarely perfect, so the system needs to judge how well my profile lines up with others. It has to come up with a score indicating how similar the profiles are. It considers which features in the profiles match (providing evidence for the inference) and which features don’t match (providing evidence against the inference). Each feature that matches — or doesn’t match — adds or subtracts support for the inference I want to make.

Categorizing

For categorization, techniques such as Naïve Bayes (using the likelihood associated with each feature that it implies membership in a group) can be used to calculate the likelihood that an object with a particular set of features is a member of a particular group. This technique adds “walks like a duck,” “quacks like a duck,” and “looks like a duck” to determine that a thing is, in fact, a duck. The power of Naïve Bayes is that the systems that use it do not require any prior knowledge of how the features interact. Naïve Bayes takes advantage of the assumption that the features it uses as predictors are independent. This means systems making use of the technique can be implemented easily without having to first build a complex model of the world.

Amassing evidence

While rules drive inference for most AI systems, almost all the rules include some notion of evidence. These rules can both collaborate and compete with each other, making independent arguments for the truth of an inference that has to be mediated by a higher‐order process. At the core of these arguments are quantitative scores and thresholds, but the conclusions that are drawn using them are more qualitative in feel. For example, an advanced natural language generation system can take in data and generate the following: “Joe’s Auto Garage has engaged in suspicious activity by making multiple deposits in amounts that are just below the federal reporting thresholds. There are ten deposits of $9,999 over a six-week period.”

Predicting Outcomes with AI

One focus of reasoning is particularly useful: prediction. Making guesses about what is going to happen next is important so that we can deal with predicted events and actions appropriately. Unsurprisingly, AI systems have the same focus.

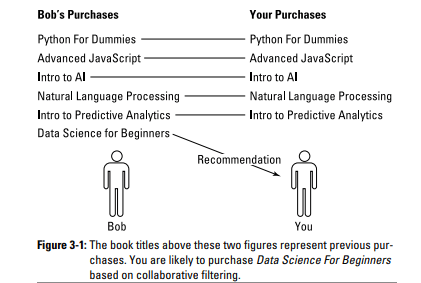

How do retailers guess at what we will want to buy next? In part, they look at what people similar to us have bought or watched before and project their actions onto ours. In essence, as shown in Figure 3-1, they are reasoning in the following way: “Both you and Bob like snowboarding, kick boxing, and skydiving. I know Bob likes water skiing. I bet you would too.” Now multiply Bob by more than 270 million users, and that’s how a company can predict what you might like.

eHarmony is smarter than you think!

Interested in finding a mate? eHarmony is smarter than you think. Some dating sites use your profile to predict compatibility based on overall similarity and have remarkable success rates, resulting in one‐third of married couples in the United States meeting online. While these profiles contain explicitly expressed preferences, the core concept of using features to characterize you and evaluate your similarity to others and make predictions is used everywhere. And no one wants to think that a machine that introduced him or her to the love of one’s life is anything less than brilliant.

This combination of calculating similarity and projecting forward based on that similarity is called collaborative filtering and is at the center of most transactional recommendation systems. They are based on the intuition that people who seem similar along a set of dimensions will be similar across others as well.

A somewhat different take on predictions is to use profiles to classify individuals (and their behaviors) into groups that have similar behaviors. Target used this approach to map shopping behaviors onto groups such as “pregnant women” — and caught some flack about it. The company used this mapping to aggressively advertise to women whom they inferred as pregnant with products they predicted these women would want. Although the technique was fairly accurate, Target quickly discovered that very few customers wanted their pregnancies to be announced to their families by a retail store.

Actions or features are often so tightly linked that strongly predicting one from the other becomes possible. People who buy coffee makers will buy filters. Viewing Star Wars suggests that you will view The Empire Strikes Back. And opening a checking account implies that you will be making deposits. Such predictions leapfrog the need to find similar individuals or categorize things into groups.

The goal of prediction is often not to recommend things to an individual but instead to anticipate a problem to be avoided. The target is the prediction itself, rather than the person who is receiving it.

The systems that focus explicitly on outcomes rather than recommendations tend to make use of data mining or machine learning. These systems build rules that connect visible features at one point in time (too many dropped calls) with events that need to be predicted (we’re going to lose this customer) by looking at the frequency of the initial features compared to examples of what the system is trying to predict. They ask, “What is going to predict this?” — where this can be, for example, someone buying a product, canceling a service, or even starting a cyber attack. Using techniques such as regression analysis (a statistical capability that estimates the relationship among variables), they build rules that can be used to predict the events we care about before they occur.

The dynamic of assessing, inferring, and predicting is at the core of many intelligent systems and certainly at the core of most of those with which we interact. The reason for this is clear. For any intelligent system — including us — the ability to understand what is happening right now, make inferences about it, and predict what is going to happen next is crucial to the ability to anticipate the future and plan for it.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

[ad_2]

Source link