Organizations need to consider many factors when building or enhancing an artificial intelligence infrastructure to support AI applications and workloads .

These are not trivial issues. A company’s ultimate success with AI will likely depend on how suitable its environment is for such powerful applications. While the cloud is emerging as a major resource for data-intensive AI workloads, enterprises still rely on their on-premises IT environments for these projects.

Big data storage: Infrastructure requirements for AI

One of the biggest considerations is AI data storage, specifically the ability to scale storage as the volume of data grows. As organizations prepare enterprise AI strategies and build the necessary infrastructure, storage must be a top priority. That includes ensuring the proper storage capacity, IOPS and reliability to deal with the massive data amounts required for effective AI.

Figuring out what kind of storage an organization needs depends on many factors, including the level of AI an organization plans to use and whether it needs to make real-time decisions.

For example, for advanced, high-value neural network ecosystems, traditional network-attached storage architectures might present scaling issues with I/O and latency. Similarly, a financial services company that uses enterprise AI systems for real-time trading decisions may need fast all-flash storage technology.

Many companies are already building big data and analytics environments designed to support enormous data volumes, and these will likely be suitable for many types of AI applications.

Another factor is the nature of the source data. AI applications depend on source data, so an organization needs to know where the source data resides and how AI applications will use it. For instance, will applications be analyzing sensor data in real time, or will they use post-processing?

You also need to factor in how much AI data applications will generate. AI applications make better decisions as they’re exposed to more data. As databases grow over time, companies need to monitor capacity and plan for expansion as needed.

AI networking infrastructure

Networking is another key component of an artificial intelligence infrastructure. To provide the high efficiency at scale required to support AI and machine learning models, organizations will likely need to upgrade their networks.

Deep learning algorithms are highly dependent on communications, and enterprise networks will need to keep stride with demand as AI efforts expand. That’s why scalability must be a high priority, and that will require high-bandwidth, low-latency and creative architectures.

Companies should automate wherever possible. For example, they should deploy automated infrastructure management tools in their data centers.

Network infrastructure providers, meanwhile, are looking to do the same. Software-defined networks are being combined with machine learning to create intent-based networks that can anticipate network demands or security threats and react in real time.

Artificial intelligence workloads

Also critical for an artificial intelligence infrastructure is having sufficient compute resources, including CPUs and GPUs.

A CPU-based environment can handle basic AI workloads, but deep learning involves multiple large data sets and deploying scalable neural network algorithms. For that, CPU-based computing might not be sufficient.

Creating an AI-optimized hardware stack starts with analyzing CPU and GPU needs.Meanwhile, more recently established companies, including Graphcore, Cerebras and Ampere Computing, have created chips for advanced AI workloads.

Preparing AI data

Organizations have much to consider. Not only do they have to choose where they will store data, how they will move it across networks and how they will process it, but they also have to choose how they will prepare the data for use in AI applications.

One of the critical steps for successful enterprise AI is data cleansing. Also called data scrubbing, it’s the process of updating or removing data from a database that is inaccurate, incomplete, improperly formatted or duplicated.

Any company, but particularly those in data-driven sectors, should consider deploying automated data cleansing tools to assess data for errors using rules or algorithms. Data quality is especially critical with AI. If the data feeding AI systems is inaccurate or out of date, the output and any related business decisions will also be inaccurate.

AI data management and governance

Another important factor is data access. Does the organization have the proper mechanisms in place to deliver data in a secure and efficient manner to the users who need it?

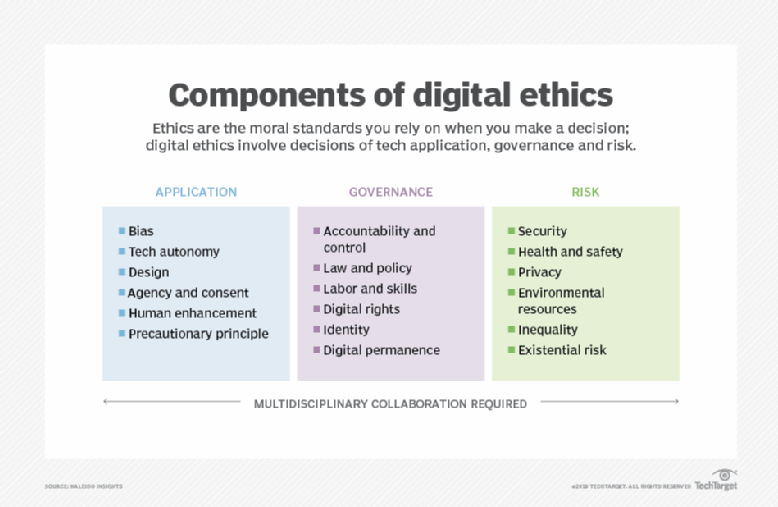

Creating an ethical AI infrastructure will aid in a company’s fight against bias, reduce risk and ensure accountability.AIoT

No discussion of artificial intelligence infrastructure would be complete without mentioning its intersection with IoT. The artificial intelligence IoT (AIoT) involves gathering and analyzing data from countless devices, products, sensors, assets, locations, vehicles, etc., using IoT, AI and machine learning to optimize data management and analytics. AIoT is crucial to gaining insights from all the information coming in from connected things.

Business data platform Statista forecasted there will be more than 10 billion connected IoT devices worldwide in 2021. Furthermore, Statista expects that number to grow to more than 25 billion devices by 2030.

Imagine the staggering amount of data generated by connected objects, and it will be up to companies and their AI tools to integrate, manage and secure all of this information.

From an artificial intelligence infrastructure standpoint, companies need to look at their networks, data storage, data analytics and security platforms to make sure they can effectively handle the growth of their IoT ecosystems. That includes data generated by their own devices, as well as those of their supply chain partners.

AI training

Last but certainly not least: Training and skills development are vital for any IT endeavor and especially enterprise AI initiatives. Companies will need data analysts, data scientists, developers, cybersecurity experts, network engineers and IT professionals with a variety of skills to build and maintain their infrastructure to support AI and to use artificial intelligence technologies, such as machine learning, NLP and deep learning, on an ongoing basis.

They will also need people who are capable of managing the various aspects of infrastructure development and who are well versed in the business goals of the organization. Putting together a strong team is an essential part of any artificial intelligence infrastructure development effort.

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link