A common step when working with BigML is extracting data from a database or document repository for uploading as a BigML Data Source. Have you ever wished you could skip that step and create a BigML Data Source directly from your data store? Well, now you can!

Both the BigML Dashboard and the API allow you to provide connection information along with a table or query specifying the data you wish to extract. BigML will then connect to your data store and create the Data Source in BigML’s server.

Importing External Data with the BigML Dashboard

In the Dashboard, go to the Sources tab and you will see a new database icon with a dropdown for external sources as shown here:

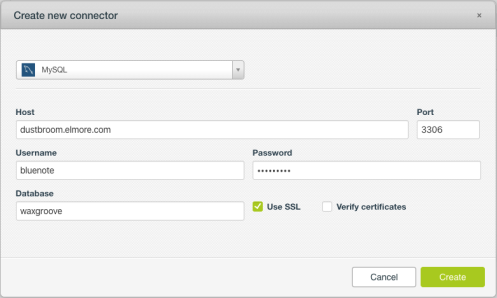

Choose your desired data store and you will have the opportunity to select a connector to a particular instance. Or, you can create a new connector by providing the necessary information. This can vary according to the data store. Here we see the Create New Connector dialog for MySQL:

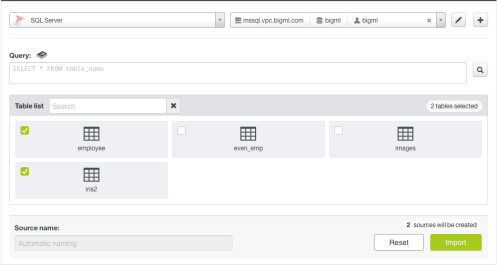

Once you have selected your connector, you will be presented with the tables and views (where applicable) from your data store. Here you have two options. First, you can simply select one or more tables and immediately import them into your BigML account as Data Sources. Each table will be imported into a separate source.

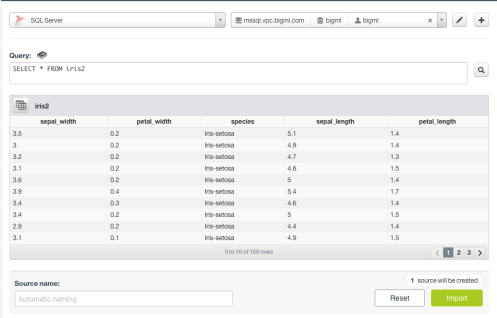

If you’d like to first take a look at a bit of the data from a given table you can click on it for a preview. That way you can remind yourself of the columns and see some sample data before importing. Here we see a preview of a table containing the well-known Iris data set:

Sometimes the simplest table import is too blunt an instrument. That’s where your second option comes in — the ability to select the exact data you want by writing an SQL select statement. If you only wish to import a subset of columns, for example, the query can be as simple as

select sepal_width, petal_width, species from iris

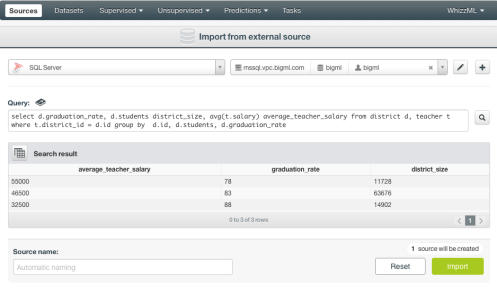

The preview button will verify that the query is valid in your data store and show you the initial result set, allowing you to confirm your intentions before importing into a BigML Data Source. Be assured you can take advantage of your data store’s full query language. A more advanced example, below, shows a select statement with both a join and a group-by clause. The typically normalized data has one table with school district information and another containing individual teacher statistics. Here we are creating a Data Source with information about school districts inclusive of the average teacher salary in each district:

Importing External Data via the BigML API

As is the case with most BigML features, external data sources can be utilized via the API. Again, this is done by providing connection information along with either a table, for a simple import, or a custom query for more control. Here’s an example using curl that imports a “sales” table as a BigML Data Source. (See the BigML documentation for how to construct your BIGML_AUTH string.)

curl "https://bigml.io/source?${BIGML_AUTH}" \

-X POST \

-H 'content-type: application/json' \

-d '{"external_data": {

"source": "sqlserver",

"connection": {

"host": "db.bigbox.com",

"port": 1433,

"database": "biztel",

"user": "autosource",

"password": "********"

},

"tables": "sales"}}'

In the case of the API, you have a few ways to control the imported data without resorting to a query. You can specify which fields to include or which to exclude, as well as limiting the number of records to import. You can also specify an offset along with an order to put that offset in context. All of this is explained in detail in our API docs.

This article has been published from the source link without modifications to the text. Only the headline has been changed.