Soft robots collect more useful information about their surroundings

Creating soft robots has been a long-running challenge in robotics. Their rigid counterparts have a built-in advantage: a limited range of motion. Rigid robots’ finite array of joints and limbs usually makes for manageable calculations by the algorithms that control mapping and motion planning.

A team of MIT researchers developed a deep learning neural network to aid the design of soft-bodied robots.

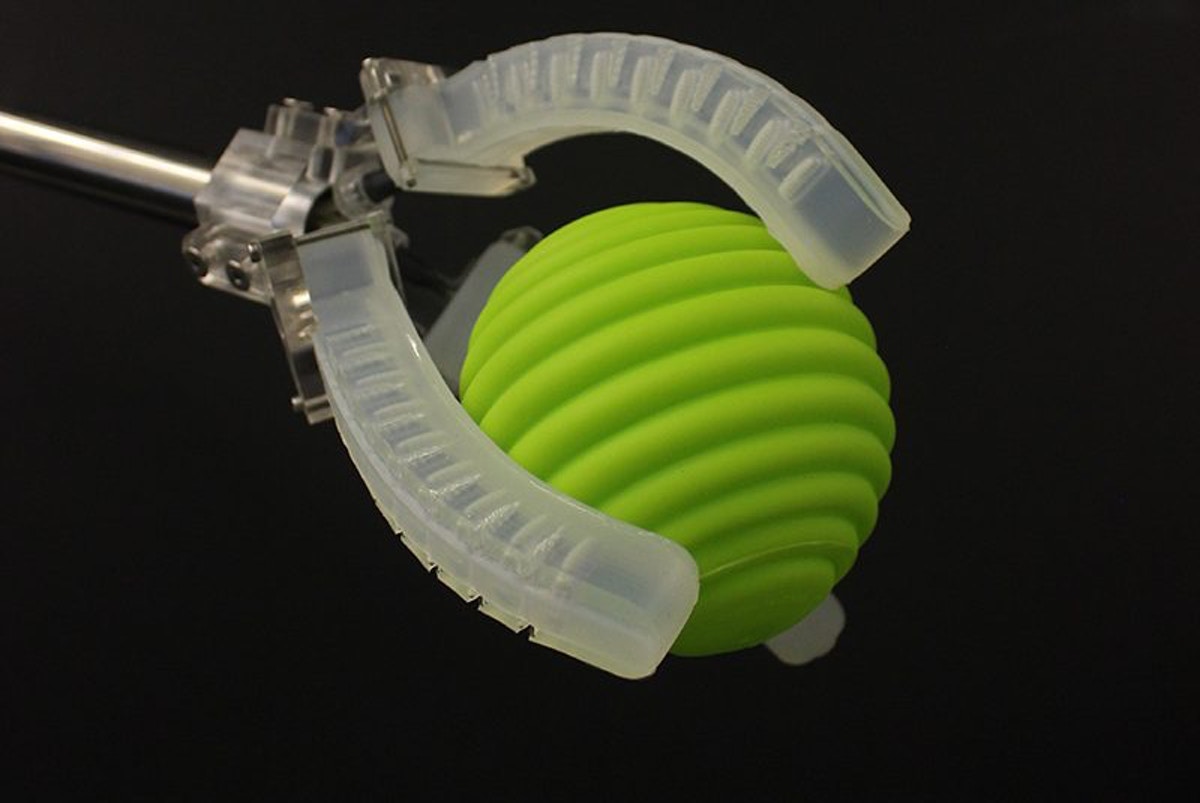

Soft-bodied robots are able to interact with people more safely or slip into tight spaces with ease. Soft robots are not so tractable. But for robots to reliably complete their programmed duties, they need to know the whereabouts of all their body parts. That’s a tall task for a soft robot that can deform in a virtually infinite number of ways. The algorithm developed by MIT researchers can help engineers design soft robots that collect more useful information about their surroundings. The deep-learning algorithm suggests an optimised placement of sensors within the robot’s body, allowing it to better interact with its environment and complete assigned tasks. The advance is a step toward the automation of robot design. “The system not only learns a given task, but also how to best design the robot to solve that task,” says Alexander Amini. “Sensor placement is a very difficult problem to solve. So, having this solution is extremely exciting.”

Soft-bodied robots are able to interact with people more safely or slip into tight spaces with ease. Soft robots are not so tractable. But for robots to reliably complete their programmed duties, they need to know the whereabouts of all their body parts. That’s a tall task for a soft robot that can deform in a virtually infinite number of ways. The algorithm developed by MIT researchers can help engineers design soft robots that collect more useful information about their surroundings. The deep-learning algorithm suggests an optimised placement of sensors within the robot’s body, allowing it to better interact with its environment and complete assigned tasks. The advance is a step toward the automation of robot design. “The system not only learns a given task, but also how to best design the robot to solve that task,” says Alexander Amini. “Sensor placement is a very difficult problem to solve. So, having this solution is extremely exciting.”

“You can’t put an infinite number of sensors on the robot itself,” says Spielberg. “So, the question is: How many sensors do you have, and where do you put those sensors in order to get the most bang for your buck?” The team turned to deep learning for an answer.

The researchers developed a novel neural network architecture that both optimises sensor placement and learns to efficiently complete tasks. First, the researchers divided the robot’s body into regions called “particles.” Each particle’s rate of strain was provided as an input to the neural network. Through a process of trial and error, the network “learns” the most efficient sequence of movements to complete tasks, like gripping objects of different sizes. At the same time, the network keeps track of which particles are used most often, and it culls the lesser-used particles from the set of inputs for the networks’ subsequent trials.

Spielberg says their work could help to automate the process of robot design. In addition to developing algorithms to control a robot’s movements, “we also need to think about how we’re going to sensorize these robots, and how that will interplay with other components of that system,” he says. And better sensor placement could have industrial applications, especially where robots are used for fine tasks like gripping. “That’s something where you need a very robust, well-optimized sense of touch,” says Spielberg. “So, there’s potential for immediate impact.”

“Automating the design of sensorised soft robots is an important step toward rapidly creating intelligent tools that help people with physical tasks,” says coauthor Daniela Rus. “The sensors are an important aspect of the process, as they enable the soft robot to “see” and understand the world and its relationship with the world.”

The research will be presented during April’s IEEE International Conference on Soft Robotics and will be published in the journal IEEE Robotics and Automation Letters.

This article has been published from the source link without modifications to the text. Only the headline has been changed.