Creating art for digital video games takes a high degree of artistic creativity and technical knowledge, while also requiring game artists to quickly iterate on ideas and produce a high volume of assets, often in the face of tight deadlines. What if artists had a paintbrush that acted less like a tool and more like an assistant? A machine learning model acting as such a paintbrush could reduce the amount of time necessary to create high-quality art without sacrificing artistic choices, perhaps even enhancing creativity.

Today, we present Chimera Painter, a trained machine learning (ML) model that automatically creates a fully fleshed out rendering from a user-supplied creature outline. Employed as a demo application, Chimera Painter adds features and textures to a creature outline segmented with body part labels, such as “wings” or “claws”, when the user clicks the “transform” button. Below is an example using the demo with one of the preset creature outlines.

In this post, we describe some of the challenges in creating the ML model behind Chimera Painter and demonstrate how one might use the tool for the creation of video game-ready assets.

Prototyping for a New Type of Model

In developing an ML model to produce video-game ready creature images, we created a digital card game prototype around the concept of combining creatures into new hybrids that can then battle each other. In this game, a player would begin with cards of real-world animals (e.g., an axolotl or a whale) and could make them more powerful by combining them (making the dreaded Axolotl-Whale chimera). This provided a creative environment for demonstrating an image-generating model, as the number of possible chimeras necessitated a method for quickly designing large volumes of artistic assets that could be combined naturally, while still retaining identifiable visual characteristics of the original creatures.

Since our goal was to create high-quality creature card images guided by artist input, we experimented with generative adversarial networks (GANs), informed by artist feedback, to create creature images that would be appropriate for our fantasy card game prototype. GANs pair two convolutional neural networks against each other: a generator network to create new images and a discriminator network to determine if these images are samples from the training dataset (in this case, artist-created images) or not. We used a variant called a conditional GAN, where the generator takes a separate input to guide the image generation process. Interestingly, our approach was a strict departure from other GAN efforts, which typically focus on photorealism.

To train the GANs, we created a dataset of full color images with single-species creature outlines adapted from 3D creature models. The creature outlines characterized the shape and size of each creature, and provided a segmentation map that identified individual body parts. After model training, the model was tasked with generating multi-species chimeras, based on outlines provided by artists. The best performing model was then incorporated into Chimera Painter. Below we show some sample assets generated using the model, including single-species creatures, as well as the more complex multi-species chimeras.

Learning to Generate Creatures with Structure

An issue with using GANs for generating creatures was the potential for loss of anatomical and spatial coherence when rendering subtle or low-contrast parts of images, despite these being of high perceptual importance to humans. Examples of this can include eyes, fingers, or even distinguishing between overlapping body parts with similar textures (see the affectionately named BoggleDog below).

Generating chimeras required a new non-photographic fantasy-styled dataset with unique characteristics, such as dramatic perspective, composition, and lighting. Existing repositories of illustrations were not appropriate to use as datasets for training an ML model, because they may be subject to licensing restrictions, have conflicting styles, or simply lack the variety needed for this task.

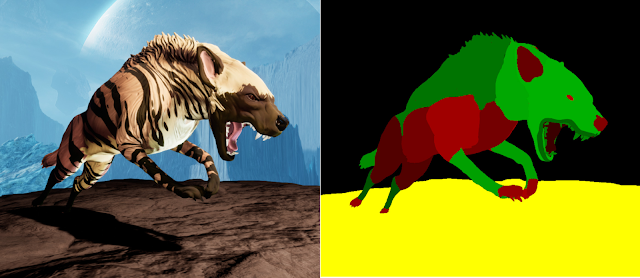

To solve this, we developed a new artist-led, semi-automated approach for creating an ML training dataset from 3D creature models, which allowed us to work at scale and rapidly iterate as needed. In this process, artists would create or obtain a set of 3D creature models, one for each creature type needed (such as hyenas or lions). Artists then produced two sets of textures that were overlaid on the 3D model using the Unreal Engine — one with the full color texture (left image, below) and the other with flat colors for each body part (e.g., head, ears, neck, etc), called a “segmentation map” (right image, below). This second set of body part segments was given to the model at training to ensure that the GAN learned about body part-specific structure, shapes, textures, and proportions for a variety of creatures.

The 3D creature models were all placed in a simple 3D scene, again using the Unreal Engine. A set of automated scripts would then take this 3D scene and interpolate between different poses, viewpoints, and zoom levels for each of the 3D creature models, creating the full color images and segmentation maps that formed the training dataset for the GAN. Using this approach, we generated 10,000+ image + segmentation map pairs per 3D creature model, saving the artists millions of hours of time compared to creating such data manually (at approximately 20 minutes per image).

Fine Tuning

The GAN had many different hyper-parameters that could be adjusted, leading to different qualities in the output images. In order to better understand which versions of the model were better than others, artists were provided samples for different creature types generated by these models and asked to cull them down to a few best examples. We gathered feedback about desired characteristics present in these examples, such as a feeling of depth, style with regard to creature textures, and realism of faces and eyes. This information was used both to train new versions of the model and, after the model had generated hundreds of thousands of creature images, to select the best image from each creature category (e.g., gazelle, lynx, gorilla, etc).

We tuned the GAN for this task by focusing on the perceptual loss. This loss function component (also used in Stadia’s Style Transfer ML) computes a difference between two images using extracted features from a separate convolutional neural network (CNN) that was previously trained on millions of photographs from the ImageNet dataset. The features are extracted from different layers of the CNN and a weight is applied to each, which affects their contribution to the final loss value. We discovered that these weights were critically important in determining what a final generated image would look like. Below are some examples from the GAN trained with different perceptual loss weights.

Some of the variation in the images above is due to the fact that the dataset includes multiple textures for each creature (for example, a reddish or grayish version of the bat). However, ignoring the coloration, many differences are directly tied to changes in perceptual loss values. In particular, we found that certain values brought out sharper facial features (e.g., bottom right vs. top right) or “smooth” versus “patterned” (top right vs. bottom left) that made generated creatures feel more real.

Here are some creatures generated from the GAN trained with different perceptual loss weights, showing off a small sample of the outputs and poses that the model can handle.

Chimera Painter

The trained GAN is now available in the Chimera Painter demo, allowing artists to work iteratively with the model, rather than drawing dozens of similar creatures from scratch. An artist can select a starting point and then adjust the shape, type, or placement of creature parts, enabling rapid exploration and for the creation of a large volume of images. The demo also allows for uploading a creature outline created in an external program, like Photoshop. Simply download one of the preset creature outlines to get the colors needed for each creature part and use this as a template for drawing one outside of Chimera Painter, and then use the “Load’ button on the demo to use this outline to flesh out your creation.

It is our hope that these GAN models and the Chimera Painter demonstration tool might inspire others to think differently about their art pipeline. What can one create when using machine learning as a paintbrush?

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link