Obviously don’t expect it to be Siri or Alexa…

Some people genuinely dislike human interaction. Whenever they are forced to socialize or go to events that involve lots of people, they feel detached and awkward. Personally, I believe that I’m most extroverted because I gain energy from interacting with other people. There are plenty of people on this Earth who are the exact opposite, who get very drained from social interaction.

I’m reminded of a very unique film called Her (2013). The basic premise of the film is that a man who suffers from loneliness, depression, a boring job, and an impending divorce, ends up falling in love with an AI (artificial intelligence) on his computer’s operating system. Maybe at the time this was a very science-fictiony concept, given that AI back then wasn’t advanced enough to become a surrogate human, but now? 2020? Things have changed a LOT. I fear that people will give up on finding love (or even social interaction) among humans and seek it out in the digital realm. Don’t believe me? I won’t tell you what it means, but just search up the definition of the term waifu and just cringe.

Now isn’t this an overly verbose introduction to a simple machine learning project? Possibly. Now that I’ve detailed an issue that has grounds for actual concern for many men (and women) in this world, let’s switch gears and build something simple and fun!

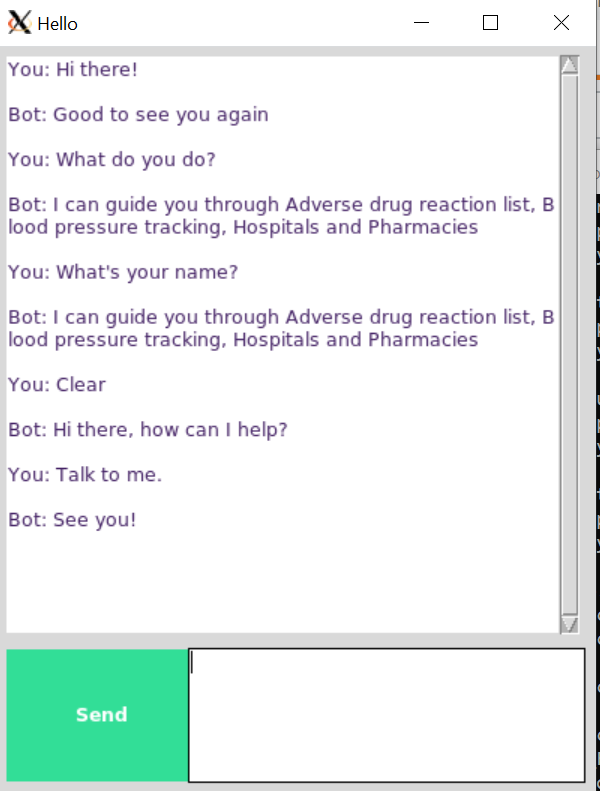

Here’s what the finished product will look like.

Agenda

- Libraries & Data

- Initializing Chatbot Training

- Building the Deep Learning Model

- Building Chatbot GUI

- Running Chatbot

- Conclusion

- Areas of Improvement

If you want a more in-depth view of this project, or if you want to add to the code, check out the GitHub repository.

Libraries & Data

All of the necessary components to run this project are on the GitHub repository. Feel free to fork the repository and clone it to your local machine. Here’s a quick breakdown of the components:

- train_chatbot.py — the code for reading in the natural language data into a training set and using a Keras sequential neural network to create a model

- chatgui.py — the code for cleaning up the responses based on the predictions from the model and creating a graphical interface for interacting with the chatbot

- classes.pkl — a list of different types of classes of responses

- words.pkl — a list of different words that could be used for pattern recognition

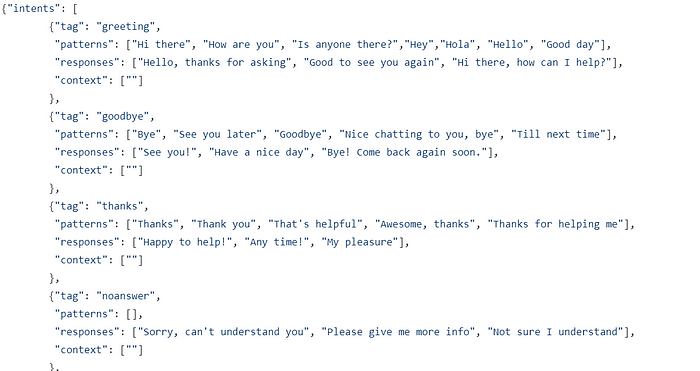

- intents.json — abunch of JavaScript objects that lists different tags that correspond to different types of word patterns

- chatbot_model.h5 — the actual model created by train_chatbot.py and used by chatgui.py

The full code is on the GitHub repository, but I’m going to walk through the details of the code for the sake of transparency and better understanding.

Now let’s begin by importing the necessary libraries. (When you run the python files on your terminal, be sure to make sure they are installed properly. I use pip3 to install the packages.)

import nltk

nltk.download('punkt')

nltk.download('wordnet')

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

import json

import pickle

import numpy as np

from keras.models import Sequential

from keras.layers import Dense, Activation, Dropout

from keras.optimizers import SGD

import random

We have a whole bunch of libraries like nltk (Natural Language Toolkit), which contains a whole bunch of tools for cleaning up text and preparing it for deep learning algorithms, json, which loads json files directly into Python, pickle, which loads pickle files, numpy, which can perform linear algebra operations very efficiently, and keras, which is the deep learning framework we’ll be using.

Initializing Chatbot Training

words=[]

classes = []

documents = []

ignore_words = ['?', '!']

data_file = open('intents.json').read()

intents = json.loads(data_file)

Now it’s time to initialize all of the lists where we’ll store our natural language data. We have our json file I mentioned earlier which contains the “intents”. Here’s a snippet of what the json file actually looks like.

We use the json module to load in the file and save it as the variable intents.

for intent in intents['intents']:

for pattern in intent['patterns']:

# take each word and tokenize it

w = nltk.word_tokenize(pattern)

words.extend(w)

# adding documents

documents.append((w, intent['tag']))

# adding classes to our class list

if intent['tag'] not in classes:

classes.append(intent['tag'])

If you look carefully at the json file, you can see that there are sub-objects within objects. For example, “patterns” is an attribute within “intents”. So we will use a nested for loop to extract all of the words within “patterns” and add them to our words list. We then add to our documents list each pair of patterns within their corresponding tag. We also add the tags into our classes list, and we use a simple conditional statement to prevent repeats.

words = [lemmatizer.lemmatize(w.lower()) for w in words if w not in ignore_words]

words = sorted(list(set(words)))

classes = sorted(list(set(classes)))

print (len(documents), "documents")

print (len(classes), "classes", classes)

print (len(words), "unique lemmatized words", words)

pickle.dump(words,open('words.pkl','wb'))

pickle.dump(classes,open('classes.pkl','wb'))

Next, we will take the words list and lemmatize and lowercase all the words inside. In case you don’t already know, lemmatize means to turn a word into its base meaning, or its lemma. For example, the words “walking”, “walked”, “walks” all have the same lemma, which is just “walk”. The purpose of lemmatizing our words is to narrow everything down to the simplest level it can be. It will save us a lot of time and unnecessary error when we actually process these words for machine learning. This is very similar to stemming, which is to reduce an inflected word down to its base or root form.

Next we sort our lists and print out the results. Alright, looks like we’re set to build our deep learning model!

Building the Deep Learning Model

# initializing training data

training = []

output_empty = [0] * len(classes)

for doc in documents:

# initializing bag of words

bag = []

# list of tokenized words for the pattern

pattern_words = doc[0]

# lemmatize each word - create base word, in attempt to represent related words

pattern_words = [lemmatizer.lemmatize(word.lower()) for word in pattern_words]

# create our bag of words array with 1, if word match found in current pattern

for w in words:

bag.append(1) if w in pattern_words else bag.append(0)

# output is a '0' for each tag and '1' for current tag (for each pattern)

output_row = list(output_empty)

output_row[classes.index(doc[1])] = 1

training.append([bag, output_row])

# shuffle our features and turn into np.array

random.shuffle(training)

training = np.array(training)

# create train and test lists. X - patterns, Y - intents

train_x = list(training[:,0])

train_y = list(training[:,1])

print("Training data created")

Let’s initialize our training data with a variable training. We’re creating a giant nested list which contains bags of words for each of our documents. We have a feature called output_row which simply acts as a key for the list. We then shuffle our training set and do a train-test-split, with the patterns being the X variable and the intents being the Y variable.

# Create model - 3 layers. First layer 128 neurons, second layer 64 neurons and 3rd output layer contains number of neurons

# equal to number of intents to predict output intent with softmax

model = Sequential()

model.add(Dense(128, input_shape=(len(train_x[0]),), activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(64, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(len(train_y[0]), activation='softmax'))

# Compile model. Stochastic gradient descent with Nesterov accelerated gradient gives good results for this model

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

#fitting and saving the model

hist = model.fit(np.array(train_x), np.array(train_y), epochs=200, batch_size=5, verbose=1)

model.save('chatbot_model.h5', hist)

print("model created")

Now that we have our training and test data ready, we will now use a deep learning model from keras called Sequential. I don’t want to overwhelm you with all of the details about how deep learning models work, but if you are curious, check out the resources at the bottom of the article.

The Sequential model in keras is actually one of the simplest neural networks, a multi-layer perceptron. If you don’t know what that is, I don’t blame you. Here’s the documentation in keras.

This particular network has 3 layers, with the first one having 128 neurons, the second one having 64 neurons, and the third one having the number of intents as the number of neurons. Remember, the point of this network is to be able to predict which intent to choose given some data.

The model will be trained with stochastic gradient descent, which is also a very complicated topic. Stochastic gradient descent is more efficient than normal gradient descent, that’s all you need to know.

After the model is trained, the whole thing is turned into a numpy array and saved as chatbot_model.h5.

We will use this model to form our chatbot interface!

Building Chatbot GUI

from keras.models import load_model

model = load_model('chatbot_model.h5')

import json

import random

intents = json.loads(open('intents.json').read())

words = pickle.load(open('words.pkl','rb'))

classes = pickle.load(open('classes.pkl','rb'))

Once again, we need to extract the information from our files.

def clean_up_sentence(sentence):

sentence_words = nltk.word_tokenize(sentence)

sentence_words = [lemmatizer.lemmatize(word.lower()) for word in sentence_words]

return sentence_words

# return bag of words array: 0 or 1 for each word in the bag that exists in the sentence

def bow(sentence, words, show_details=True):

# tokenize the pattern

sentence_words = clean_up_sentence(sentence)

# bag of words - matrix of N words, vocabulary matrix

bag = [0]*len(words)

for s in sentence_words:

for i,w in enumerate(words):

if w == s:

# assign 1 if current word is in the vocabulary position

bag[i] = 1

if show_details:

print ("found in bag: %s" % w)

return(np.array(bag))

def predict_class(sentence, model):

# filter out predictions below a threshold

p = bow(sentence, words,show_details=False)

res = model.predict(np.array([p]))[0]

ERROR_THRESHOLD = 0.25

results = [[i,r] for i,r in enumerate(res) if r>ERROR_THRESHOLD]

# sort by strength of probability

results.sort(key=lambda x: x[1], reverse=True)

return_list = []

for r in results:

return_list.append({"intent": classes[r[0]], "probability": str(r[1])})

return return_list

def getResponse(ints, intents_json):

tag = ints[0]['intent']

list_of_intents = intents_json['intents']

for i in list_of_intents:

if(i['tag']== tag):

result = random.choice(i['responses'])

break

return result

def chatbot_response(msg):

ints = predict_class(msg, model)

res = getResponse(ints, intents)

return res

Here are some functions that contain all of the necessary processes for running the GUI and encapsulates them into units. We have the clean_up_sentence() function which cleans up any sentences that are inputted. This function is used in the bow() function, which takes the sentences that are cleaned up and creates a bag of words that are used for predicting classes (which are based off the results we got from training our model earlier).

In our predict_class() function, we use an error threshold of 0.25 to avoid too much overfitting. This function will output a list of intents and the probabilities, their likelihood of matching the correct intent. The function getResponse() takes the list outputted and checks the json file and outputs the most response with the highest probability.

Finally our chatbot_response() takes in a message (which will be inputted through our chatbot GUI), predicts the class with our predict_class() function, puts the output list into getResponse(), then outputs the response. What we get is the foundation of our chatbot. We can now tell the bot something, and it will then respond back.

#Creating GUI with tkinter

import tkinter

from tkinter import *

def send():

msg = EntryBox.get("1.0",'end-1c').strip()

EntryBox.delete("0.0",END)

if msg != '':

ChatLog.config(state=NORMAL)

ChatLog.insert(END, "You: " + msg + '\n\n')

ChatLog.config(foreground="#442265", font=("Verdana", 12 ))

res = chatbot_response(msg)

ChatLog.insert(END, "Bot: " + res + '\n\n')

ChatLog.config(state=DISABLED)

ChatLog.yview(END)

base = Tk()

base.title("Hello")

base.geometry("400x500")

base.resizable(width=FALSE, height=FALSE)

#Create Chat window

ChatLog = Text(base, bd=0, bg="white", height="8", width="50", font="Arial",)

ChatLog.config(state=DISABLED)

#Bind scrollbar to Chat window

scrollbar = Scrollbar(base, command=ChatLog.yview, cursor="heart")

ChatLog['yscrollcommand'] = scrollbar.set

#Create Button to send message

SendButton = Button(base, font=("Verdana",12,'bold'), text="Send", width="12", height=5,

bd=0, bg="#32de97", activebackground="#3c9d9b",fg='#ffffff',

command= send )

#Create the box to enter message

EntryBox = Text(base, bd=0, bg="white",width="29", height="5", font="Arial")

#EntryBox.bind("<Return>", send)

#Place all components on the screen

scrollbar.place(x=376,y=6, height=386)

ChatLog.place(x=6,y=6, height=386, width=370)

EntryBox.place(x=128, y=401, height=90, width=265)

SendButton.place(x=6, y=401, height=90)

base.mainloop()

Here comes the fun part (if the other parts weren’t fun already). We can create our GUI with tkinter, a Python library that allows us to create custom interfaces.

We create a function called send() which sets up the basic functionality of our chatbot. If the message that we input into the chatbot is not an empty string, the bot will output a response based on our chatbot_response() function.

After this, we build our chat window, our scrollbar, our button for sending messages, and our textbox to create our message. We place all the components on our screen with simple coordinates and heights.

Running Chatbot

Finally it’s time to run our chatbot!

Because I run my program on a Windows 10 machine, I had to download a server called Xming. If you run your program and it gives you some weird errors about the program failing, you can download Xming.

Before you run your program, you need to make sure you install python or python3 with pip (or pip3). If you are unfamiliar with command line commands, check out the resources below.

Once you run your program, you should get this.

Conclusion

Congratulations on completing this project! Building a simple chatbot exposes you to a variety of useful skills for data science and general programming. I feel that the best way (for me, at least) to learn anything is to just build and tinker around. If you want to become good at something, you need to get in lots of practice, and the best way to practice is to just get your hands dirty and build!