Hadoop is one of the most mature and well-known open-source big data frameworks on the market. Sprung from the concepts described in a paper about a distributed file system created at Google and implementing the MapReduce algorithm made famous by Google, Hadoop was first released by the open-source community in 2006.

Fourteen years later, there are quite a number of Hadoop clusters in operation across many companies, though fewer companies are probably creating new Hadoop clusters — instead opting for other choices, such as cloud-based object stores like AWS S3 buckets, newer distributed computing tools, such as Apache Spark or managed services, such as AWS Athena and EMR.

But there are definite advantages to installing and working with the open-source version of Hadoop, even if you don’t actually use its MapReduce features. For instance, if you really want to understand the concept of map and reduce, learning how Hadoop does it will give you a fairly deep understanding of it. You’ll also find that if you are running a Spark job, putting data in the Hadoop Distributed File System and giving your Spark workers access to HDFS, can come in handy.

Installing and getting Hadoop working on a cluster of computers is quite straightforward. We’ll start at the beginning and assume you have access to a Unix laptop and Amazon Web Services. This blog will assume that you know how to find the public DNS and private IP addresses for your instances and that you have access to your AWS EC2 key-pair (or .pem file) and AWS credentials (secret key and access key id).

By the end of this blog, you will have created a 4-node Hadoop cluster and run a sample word count map-reduce job on a file that you pulled from an AWS S3 bucket and saved the results to your cluster’s HDFS.

Provision your instances on AWS

If you were going to programmatically provision your instances, you might use a tool, such as Terraform. For this exercise, if you don’t know how to provision your instances, follow the instructions on this blog to create a cluster of four machines on AWS with one of the machines capable of communicating with the other three.

Update environment, download and unzip Hadoop binaries

For the next set of instructions, you’ll need to log on (via ssh — ssh -i ~/.ssh/PEM_FILE ubuntu@DNS where the PEM_FILE is your AWS key pair file and DNS refers to your instance’s public DNS) to all four of your machines and perform the same commands on each machine.

- Install Java

First, you’ll want to update apt, then install Java and double-check that you have the latest Java 8 version, which is what Hadoop 2.7 is compatible with.

$ sudo apt update$ sudo apt install openjdk-8-jdk$ java -version openjdk version "1.8.0_252" OpenJDK Runtime Environment (build 1.8.0_252-8u252-b09-1~18.04-b09) OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

2. Download Hadoop tar

If you’re planning to use Hadoop in conjunction with Spark 2.4 (at least as of May 2020), you’ll want to download an older Hadoop 2.7 version. If you aren’t planning to use Hadoop with Spark, you can choose a stable and more recent version (e.g., Hadoop 3.x). Download, decompress and move the files into position, which in our case will be to the /usr/local/hadoop directory.

$ wget -P /tmp

$ tar xvf /tmp/hadoop-2.7.7.tar.gz

$ sudo mv hadoop-2.7.7 /usr/local/hadoop

3. Update environment variables

You’ll also want to make sure the Hadoop binaries are in your path, so edit the ~/.profile file and update the PATH environment variable by adding this somewhere in the file:

export PATH=/usr/local/hadoop/bin:$PATH

To ensure that your PATH is updated, be sure and update your environment by executing source ~/.profile.

Remember that once you are on your master instance, and if you correctly installed passwordless ssh as described in the instructions detailed in the blog links provided up above, you should be able to get to all of your workers from your master instance (e.g., ssh ubuntu@WORKER_PUBLIC_DNS where WORKER_PUBLIC_DNS refers to the public DNS for the particular worker instance).

Configure Hadoop on the master

Once you have completed the above steps on all four of your instances, return to your master instance and complete the following steps.

1. Configure Hadoop hadoop-env.sh file

You’ll now want to edit your Hadoop configuration files, starting with the /usr/local/hadoop/etc/hadoop/hadoop-env.sh file. First, you’ll want to find the line that starts export JAVA_HOME and change the assignment (expression after =) so that it points to the directory where java was just installed. In our case, Java can be found at/usr/bin/java but JAVA_HOME is always listed with the /bin/java suffix removed.

Add a new line to the file, which augments the Hadoop classpath to take advantage of additional tool libraries that come with the Hadoop distribution. See below for what those two lines should look like. (i.e., you’ll want to modify the export JAVA_HOME line and add the export HADOOP_CLASSPATH line to the file).

# Modify location of JAVA_HOME export JAVA_HOME=/usr# Add this line to the file export HADOOP_CLASSPATH=/usr/local/hadoop/share/hadoop/tools/lib/*:$HADOOP_CLASSPATH

If you are planning to run the example listed at the end of this blog, which accesses S3 buckets that require a certain type of AWS access signature, you’ll also want to search the same file for a line that mentions HADOOP_OPTS and make sure it includes both of the -D configuration settings (usually it’s the second one that is missing). If you don’t plan on running the example, you can skip this modification.

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true -Dcom.amazonaws.services.s3.enableV4=true"

2. Configure Hadoop core-site.xml file

The /usr/local/hadoop/etc/hadoop/core-site.xml file holds information on how to access HDFS as well as ways of accessing S3 buckets on AWS from Hadoop. See below for what properties to add the file above the ending</configuration> xml tag.

Be sure and replace the below bold and italicized items, such as the MASTER_DNS with the DNS of your master instance. If you won’t require access to S3 buckets, you can skip the next three configuration lines. However, if you also want to access AWS S3 buckets via your Hadoop or Spark jobs, you can add your AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY credentials here. But be aware that this is highly insecure because you are listing your credentials in plain text. A better way might be to store credentials in environment variables and then pull them into your code when trying to access S3.

(VERY IMPORTANT WARNING: If you add your keys here, do not add this file core-site.xml to your Github or Bitbucket repository, otherwise you will compromise your AWS account by revealing these credentials to hackers and public in general.)

<property> <name>fs.defaultFS</name> <value>hdfs://MASTER_DNS:9000</value> </property><property> <name>fs.s3.impl</name> <value>org.apache.hadoop.fs.s3a.S3AFileSystem</value> </property><property> <name>fs.s3a.access.key</name> <value>AWS_ACCESS_KEY_ID</value> </property><property> <name>fs.s3a.secret.key</name> <value>AWS_SECRET_ACCESS_KEY</value> </property>

3. Configure Hadoop yarn-site.xml file

Edit the /usr/local/hadoop/etc/hadoop/yarn-site.xml and add the following lines above the ending</configuration> xml tag. Be sure to change the MASTER_DNS to the DNS of your master instance.

<property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property><property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property><property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>MASTER_DNS:8025</value> </property><property> <name>yarn.resourcemanager.scheduler.address</name> <value>MASTER_DNS:8030</value> </property><property> <name>yarn.resourcemanager.address</name> <value>MASTER_DNS:8050</value> </property>

4. Configure Hadoop mapred-site.xml file

Finally, copy /usr/local/hadoop/etc/hadoop/mapred-site.xml.template to /usr/local/hadoop/etc/hadoop/mapred-site.xml and add the following lines above the ending</configuration> xml tag. The expanded classpath allows you to call Hadoop libraries to successfully access libraries, such as those to connect to AWS.

Be sure and change the MASTER_DNS to the DNS of your master instance.

<property> <name>mapreduce.jobtracker.address</name> <value>MASTER_DNS:54311</value> </property><property> <name>mapreduce.framework.name</name> <value>yarn</value> </property><property> <name>mapreduce.application.classpath</name> <value>/usr/local/hadoop/share/hadoop/mapreduce/*,/usr/local/hadoop/share/hadoop/mapreduce/lib/*,/usr/local/hadoop/share/hadoop/common/*,/usr/local/hadoop/share/hadoop/common/lib/*,/usr/local/hadoop/share/hadoop/tools/lib/*</value> </property>

5. Configure Hadoop hdfs-site.xml file

Edit /usr/local/hadoop/etc/hadoop/hdfs-site.xml and add the following lines above the ending</configuration> tag to set the replication factor and identify where in the normal file system the HDFS metadata would be saved.

<property> <name>dfs.replication</name> <value>3</value> </property><property> <name>dfs.namenode.name.dir</name> <value>file:///usr/local/hadoop/hadoop_data/hdfs/namenode</value> </property>

6. Let your master and workers know about each other

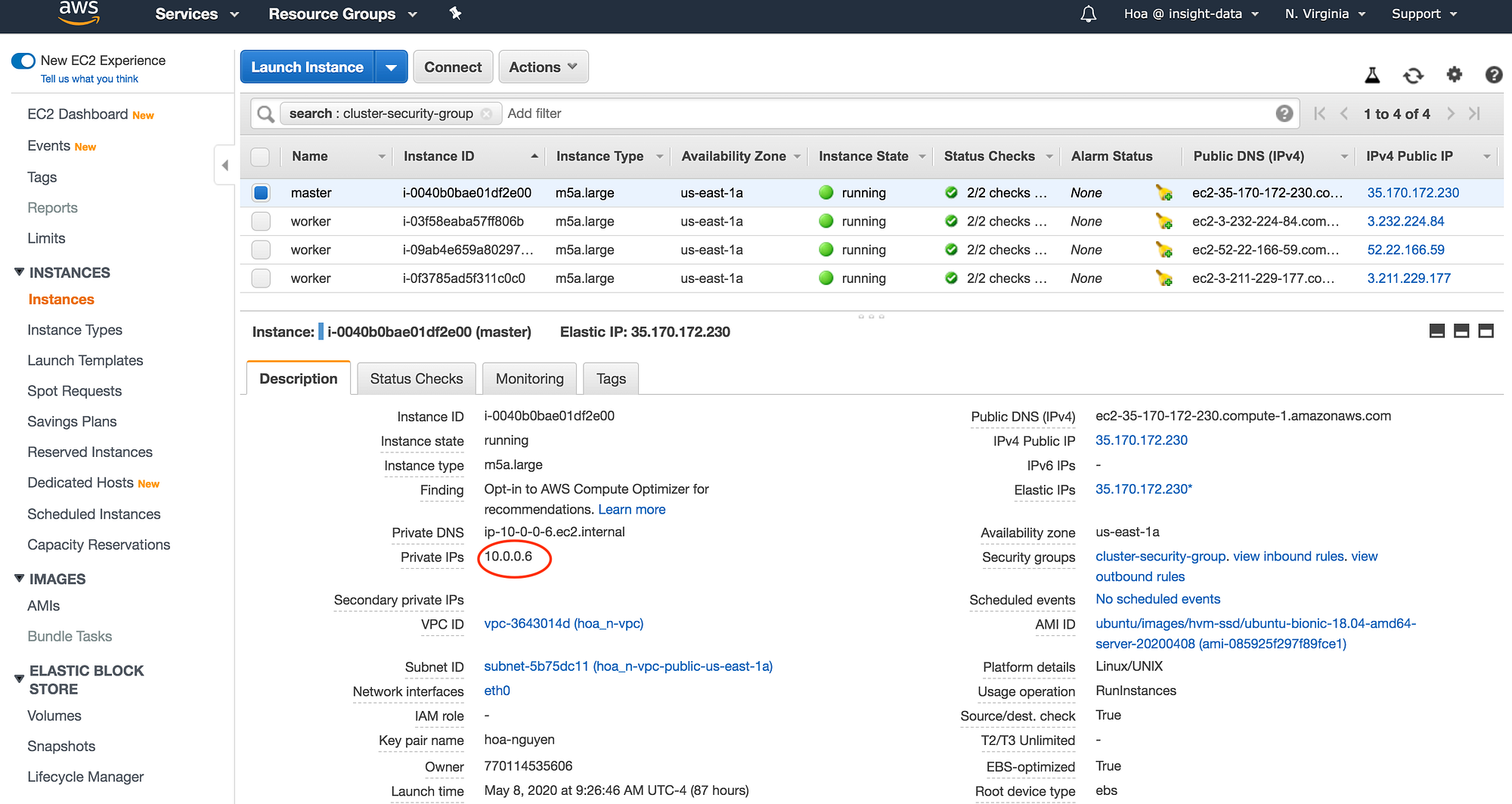

Prior to this section, we’ve been mainly referring to the master instance’s public DNS, primarily because, for processes that need to interact with your Hadoop daemons and other services, they’ll communicate with your master instance via its public DNS. However, for communication between instances in your cluster, Hadoop will rely on private IP addresses. See below for where you might find the private IP address for your instances on the AWS web console:

Create a /usr/local/hadoop/etc/hadoop/masters file and add the private IP address of the master instance.

# Example master file

10.0.0.8

Edit the /usr/local/hadoop/etc/hadoop/slaves file, remove localhost and add each worker instance’s private IP address — one takes up its own line.

# Example slaves file

10.0.0.14

10.0.0.5

10.0.0.9

7. Create namenode and datanode directories on your local filesystem

HDFS requires a namenode directory to be created, and it needs to be owned by the Hadoop user, which in this case is ubuntu and not root.

$ sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/namenode

$ sudo chown -R ubuntu /usr/local/hadoop

Create the datanode directory and make sure that it’s not owned by root.

$ sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/datanode

$ sudo chown -R ubuntu /usr/local/hadoop

Propagate configuration files to your workers

Now that you’ve edited your configuration file on your master instance, you’ll want to make sure all of your workers also get the same changes. Get a list of the public DNS (or private IP addresses) for all of your workers handy and while logged on to your master instance, rsync the Hadoop directory with each of your workers.

$ rsync -r /usr/local/hadoop/ ubuntu@WORKER1_DNS:/usr/local/hadoop

$ rsync -r /usr/local/hadoop/ ubuntu@WORKER2_DNS:/usr/local/hadoop

$ rsync -r /usr/local/hadoop/ ubuntu@WORKER3_DNS:/usr/local/hadoop

(Alternatively, you can manually ssh into each of your workers and redo Configure Hadoop’s steps 1–7 in the previous section. Yes, rsync is our friend.)

Format HDFS and start services

On your master instance, issue the command to format HDFS.

$ hdfs namenode -format

- Start HDFS and Job Tracker

Now, you can start firing off scripts to start the Hadoop daemons and Yarn services. To begin with, start the Distributed Filesystem:

$ /usr/local/hadoop/sbin/start-dfs.sh

You may get Are you sure you want to continue connecting (yes/no)? messages — go ahead and answer yes. At this point, you should see a message similar to the following

Starting namenodes on [MASTER DNS]

MASTER DNS: starting namenode, logging to /usr/local/hadoop/logs/hadoop-ubuntu-namenode-MASTER’S PRIVATE IP NAME.out

WORKER 1'S PRIVATE IP: starting datanode, logging to /usr/local/hadoop/logs/hadoop-ubuntu-datanode-WORKER 1’S PRIVATE IP NAME.out

WORKER 2’S PRIVATE IP: starting datanode, logging to /usr/local/hadoop/logs/hadoop-ubuntu-datanode-WORKER 2’S PRIVATE IP NAME.out

WORKER 3’S PRIVATE IP: starting datanode, logging to /usr/local/hadoop/logs/hadoop-ubuntu-datanode-WORKER 3’S PRIVATE IP NAME.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-ubuntu-secondarynamenode-MASTER'S PRIVATE IP NAME.out

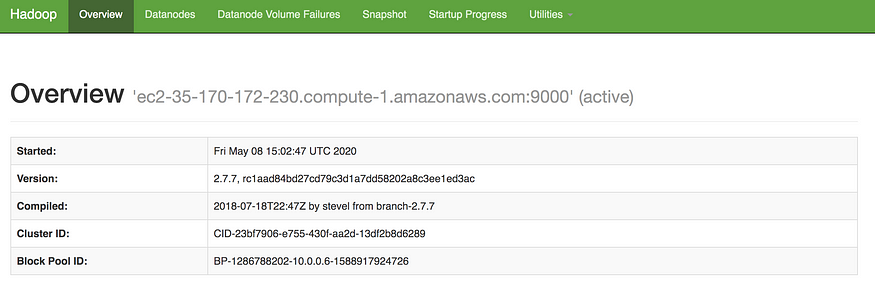

Congratulations, you’ve successfully started HDFS. If you open your web browser and navigate to http://MASTER DNS:50070 (replacing MASTER DNS with the DNS of your own master instance) you should see something like the following (if you do not, there is most likely an issue with your AWS security group settings and/or it is not providing access to your laptop’s IP).

Next, start the Hadoop Job Tracker.

$ /usr/local/hadoop/sbin/start-yarn.sh

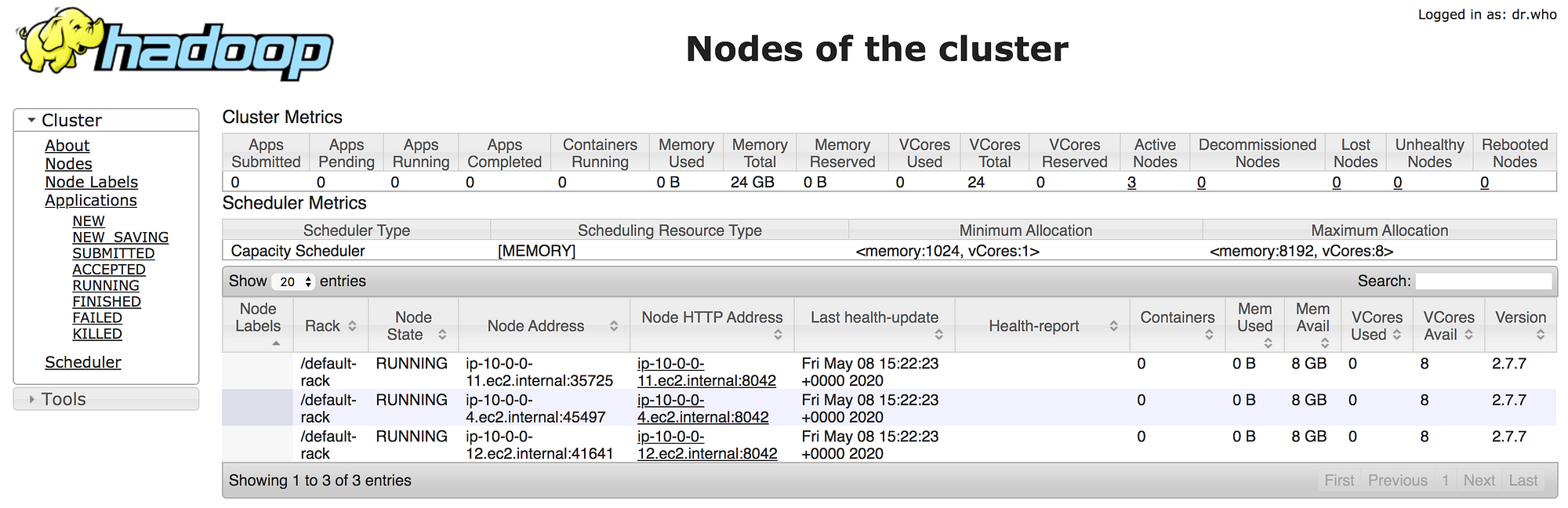

If you navigate to your web browser and type http://MASTER DNS:8088/cluster/nodes replacing MASTER DNS with the DNS of your master instance. You should see a similar image as this, showing three worker nodes:

Run an example

Now that you’ve gotten your Hadoop cluster up and running, let’s check that the installation went smoothly by going through an example that will copy a file from a SWS S3 bucket to your cluster’s HDFS and run a word count map-reduce job on the file.

If you were to use another batch processing framework such as Spark, you probably could read the file directly from the S3 bucket, but because we want to double check that our Hadoop and HDFS installation work, we’ll use Hadoop’s distcp command (or distributed copy command that uses Hadoop’s map-reduce under the covers) to copy the file from S3 to HDFS in this example.

First, let’s create a new directory on our cluster’s HDFS to hold the results of this test. You can do this on any of the machines in your cluster.

$ hdfs dfs -mkdir /my_test

The S3 bucket that we’re going to use is a recently published covid19-lake that is publicly available and accessible in the us-east-2 region. Because this is a recently created bucket on AWS, accessing it relies on a newer authentication method referred to as V4 , which requires the changes we made to our hadoop-env.sh configuration file earlier (see above section). It also requires to pass in additional arguments to hadoop distcp in order to enable V4, specifically,

-Dmapreduce.map.java.opts=”-Dcom.amazonaws.services.s3.enableV4=true”

and

-Dmapreduce.reduce.java.opts=”-Dcom.amazonaws.services.s3.enableV4=true”

Put together, the following command will copy the contents of the states_abv.csv file on the S3 covid19-lake bucket in the static-datasets/csv/state-abv directory to your cluster’s HDFS in the /my_test directory:

$ hadoop distcp -Dmapreduce.map.java.opts="-Dcom.amazonaws.services.s3.enableV4=true" -Dmapreduce.reduce.java.opts="-Dcom.amazonaws.services.s3.enableV4=true" -Dfs.s3a.endpoint=s3.us-east-2.amazonaws.com s3a://covid19-lake/static-datasets/csv/state-abv/states_abv.csv hdfs:///my_test/

After you execute this command, you can double check that the file exists in your HDFS and look at its contents with the following two commands:

$ hdfs dfs -ls /my_test/states_abv.csv -rw-r--r-- 3 ubuntu supergroup 665 2020-05-12 16:55 /my_test/states_abv.csv$ hdfs dfs -cat /my_test/states_abv.csv State,Abbreviation Alabama,AL Alaska,AK ...

Now, let’s run a map-reduce job that will count the words in that state abbreviation file and put it into another file on HDFS:

$ hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples*.jar wordcount /my_test/states_abv.csv /my_test/states_abv_output

The above command should create a new directory on HDFS at /my_test/states_abv_output that will hold the results of the word count job in a file named /my_test/states_abv_output/part-r-00000. If you then print the contents of that file, each line should hold the state name, state abbreviation and the number 1 because each state is only listed once.

$ hdfs dfs -ls /my_test/states_abv_output Found 2 items -rw-r--r-- 3 ubuntu supergroup 0 2020-05-12 02:16 /my_test/states_abv_output/_SUCCESS -rw-r--r-- 3 ubuntu supergroup 759 2020-05-12 02:16 /my_test/states_abv_output/part-r-00000$ hdfs dfs -cat /my_test/states_abv_output/part-r-00000 Alabama,AL 1 Alaska,AK 1 Arizona,AZ 1 Arkansas,AR 1 ...

Congratulations, you’ve successfully installed Hadoop, pulled a file from a S3 bucket and run a Hadoop map-reduce job to count the words in the file.

Obviously, there’s no good reason to do a word count on a lookup file, but it’s shown here to give you an idea of how to interact with Hadoop and HDFS. From here, you can go on to explore tools, such as Presto, which is designed to work with HDFS or Apache Spark, which uses many similar map-reduce concepts from Hadoop.

This article has been published from the source link without modifications to the text. Only the headline has been changed.