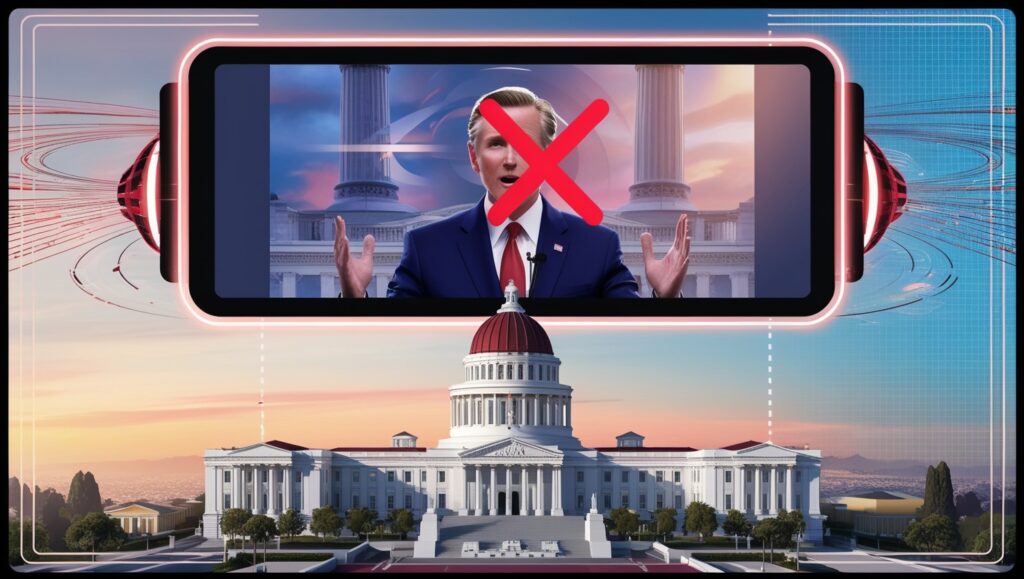

A number of artificial intelligence bills were signed into law by California Governor Gavin Newsom (D) on Tuesday. The bills aim to prevent the use of deepfakes during elections and safeguard celebrities from having their likenesses replicated by AI without their permission.

Deepfakes are a growing source of concern for the 2024 election, and last year’s historic actors strike was largely sparked by worries about Hollywood’s use of artificial intelligence. According to Newsom’s office, California is home to “32 of the world’s 50 leading AI companies, high-impact research and education institutions,” which puts pressure on his administration to strike a balance between the interests of the general public and the aspirations of a quickly developing industry.

Democracy depends on maintaining the integrity of elections, so it’s imperative that we make sure AI isn’t used to spread false information and erode public confidence, particularly in light of the volatile political environment of today, Newsom stated in a statement on Tuesday.

A.B. 2655, one of the measures, mandates that big online platforms either remove or label election-related content that is misleading, digitally altered, or created during specific times both before and after the election. Additionally, he approved A.B. 2355, which mandates that election advertisements declare whether they use AI-generated or significantly altered content, and A.B. 2839, which lengthens the period of time that individuals and organizations are forbidden from intentionally disseminating election materials that contain misleading AI-generated or manipulated content.

After Kamala Harris’s campaign ad was modified and retweeted by Elon Musk in July, Newsom took to social media to declare that “manipulating a voice in a ‘ad’ like this one should be illegal” and pledged to sign a bill “to make sure it is.”

The majority of the tech industry is vehemently opposed to S.B. 1047, which would hold AI companies accountable if their technology is misused, but it is still unclear whether Newsom will sign or veto the bills signed on Tuesday.

The fear of unintended applications of AI technology that developers create, according to venture capitalists and start-up founders, would inhibit innovation. The Democratic senator who is writing the bill, Scott Wiener, claims that its main goal is to codify the promises AI companies have made to prevent improper use of their technology.

Two other measures for actors and performers are included in the new laws, which according to Newsom will guarantee the industry’s continued success while bolstering worker protections and regulating the use of their likeness.

Contracts must state under A.B. 2602 how artificial intelligence (AI)-generated duplicates of a performer’s voice or likeness will be utilized. Digital replicas of performers who have passed away cannot be used for profit without the estates’ permission, according to A.B. 1836.

Artificial intelligence (AI) in entertainment is a contentious topic, with many celebrities warning of AI-altered photos of them making the rounds on the internet without permission or agreeing to replicate performances such as James Earl Jones’s Darth Vader voice.

The Washington Post said that last year, the actors union SAG-AFTRA was able to negotiate a contract that included protections against artificial intelligence. Among the provisions were that actors have to provide studios with “informed consent” and be paid fairly for creating digital replicas.

In a statement on Tuesday, Union President Fran Drescher commended the bills for building on the AI protections that actors had “fought so hard for last year” and commended Newsom for “recognizing that performers matter, and their contributions have value.”

“No one should live in fear of becoming someone else’s unpaid digital puppet,” stated Duncan Crabtree-Ireland, national executive director of SAG-AFTRA.