In this Post we are going to use real Machine Learning and (behind the scenes) Deep learning for Natural Language Processing / Understanding!

In this post we are going to use the RASA conversational AI solution both for the NLP/U engine and for the dialogue part

RASA — Is an Open Sourced Python implementation for NLP Engine / Intent Extraction / Dialogue → in which all of the above run on your machine / On premise → NO CLOUD!

The inspiration/reference to this post was the great tutorial video made by: Justina Petraityte, thanks! I tried to break it down and used my own version which is slightly different on Mac, so I hope you’d enjoy it and find it useful.

TL;DR — skip to here

First here is a short introduction (if you are well familiar with chatbot/dialogue architecture or want to get down to business , skip to here)

When the chatbot input is text, for example: “I want to order pizza”

first the bot needs to run a NLP (Natural Language Processing) engine in order to parse the sentence (create a structure out of it or “try to understand it” if you may)

…but what’s next? let’s assume that the bot classify it successfully to the correct “Intent”, in our case lets say the “Intent” is: order_pizza,

the common sense says that we must have (from the user), the full name, address, pizza size, topping, what else? for this very purpose we need a “Dialogue management” component, in this component we would configure the various “Intents” that our bot is supporting and for each “Intent” (the next part — part 2) we would specify which “Slots”/”entities” are mandatory and which are just optional — this configuration is often called: “Domain definition”.

If you already noticed in our example, the full name, address, pizza size and topping (slots) are mandatory, by mandatory we mean that our bot would continue to interact with our user until this information is fulfilled and only then it would be able to complete the task — pizza ordering!

Build the NLU side

To get the best results run with Python 3.6.4 (and no later), better to work in a virtualenv (if you getting errors) try creating the env with:

pip3 install virtualenv

python3 -m virtualenv <env_name>

source <env_name>/bin/activate

Make sure you run the above command with python3 pointing out to the python 3.6.4 mentioned

You can choose to install it:

pip3 install rasa_nlu==0.12.3

If you want to be able to debug your code and dive in the implementation you should clone it:

git clone [email protected]:RasaHQ/rasa_nlu.gitcd rasa_nlu#go to the relevant release (0.12.3):

git reset --hard d08b5765e92ea27741926b1246e5e22713158987

pip install -r requirements.txt

pip install -e .

Either way create a working directory in which we will place all our files for this tutorial:

mkdir rasa_demo

cd rasa_demo

vim requirements.txt

If you don’t want to use vim, just create the file requirements.txt . and edit it in a text editor (sublime or notepad)

If you cloned the code skip to configuration.

In the file “requirements.txt” add the content below:

gevent==1.2.2

klein==17.10.0

hyperlink==17.3.1

boto3==1.5.20

typing==3.6.2

future==0.16.0

six==1.11.0

jsonschema==2.6.0

matplotlib==2.1.0

requests==2.18.4

tqdm==4.19.5

numpy==1.14.0

simplejson==3.13.2

cloudpickle==0.5.2

msgpack-python==0.5.4Now run (for the location of the file):

pip install -r requirements.txt

Configuration

First lets create the configuration files and the training file.

Lets start with the configuration file. Create a dir named config:

mkdir config

cd config

vim config.json

If you don’t want to use vim, just create the file requirements.txt . and edit it in a text editor (sublime or notepad)

In this file “config.json” add the content below:

{

"pipeline" : [ "nlp_spacy",

"tokenizer_spacy",

"ner_crf",

"ner_spacy",

"intent_featurizer_spacy",

"intent_classifier_sklearn"],

"language" : "en",

"path" : "./models/nlu",

"data" : "./data/training_data.json"

}If you are using a newer version of Rasa i.e configuration is done via yml, create config/config.yml:

language: "en"

pipeline:

- name: "nlp_spacy"

model: "en"

- name: "tokenizer_spacy"

- name: "ner_crf"

- name: "intent_featurizer_spacy"

- name: "intent_classifier_sklearn"Go back to the parent workspace directory — in our case “rasa_demo”

Training data

Now let’s create the training data, for that matter, examples for sentences that we think our user is going to say and to which Intent and entities our chatbot should break it.

First let’s create a directory “data” and in it create a file “training_data.json”

run:

cd ../

mkdir data

cd !$

vim training_data.json

If you don’t want to use vim, just create the file requirements.txt . and edit it in a text editor (sublime or notepad)

with this content:

{

"rasa_nlu_data": {

"common_examples": [

{

"text": "hello",

"intent": "greet",

"entities": []

},

{

"text": "I want to order large pizza",

"intent": "order_pizza",

"entities": [

{

"start": 16,

"end": 21,

"value": "large",

"entity": "size"

}

]

},

{

"text": "I want to order pizza with olives",

"intent": "order_pizza",

"entities": [

{

"start": 27,

"end": 33,

"value": "olives",

"entity": "toppings"

}

]

},

{

"text": "hey",

"intent": "greet",

"entities": []

},

{

"text": "large",

"intent": "order_pizza",

"entities": [

{

"start": 0,

"end": 5,

"value": "large",

"entity": "size"

}

]

},

{

"text": "small",

"intent": "order_pizza",

"entities": [

{

"start": 0,

"end": 5,

"value": "small",

"entity": "size"

}

]

},

{

"text": "olives",

"intent": "order_pizza",

"entities": [

{

"start": 0,

"end": 6,

"value": "olives",

"entity": "toppings"

}

]

},

{

"text": "cheese",

"intent": "order_pizza",

"entities": [

{

"start": 0,

"end": 6,

"value": "cheese",

"entity": "toppings"

}

]

}

]

}

}There is a great tool (rasa_nlu_trainer) you can use to add new examples/Intents/entities.

To install it, run in terminal:

npm i -g rasa-nlu-trainer (you'll need nodejs and npm for this)

If you don’t have npm and nodejs go to here and follow the links to npm and nodejs in the installation part.

Now launch the trainer:

rasa-nlu-trainer -v <path to the training data file>

In our example we the file under the data directory:

rasa-nlu-trainer -v data/training_data.json

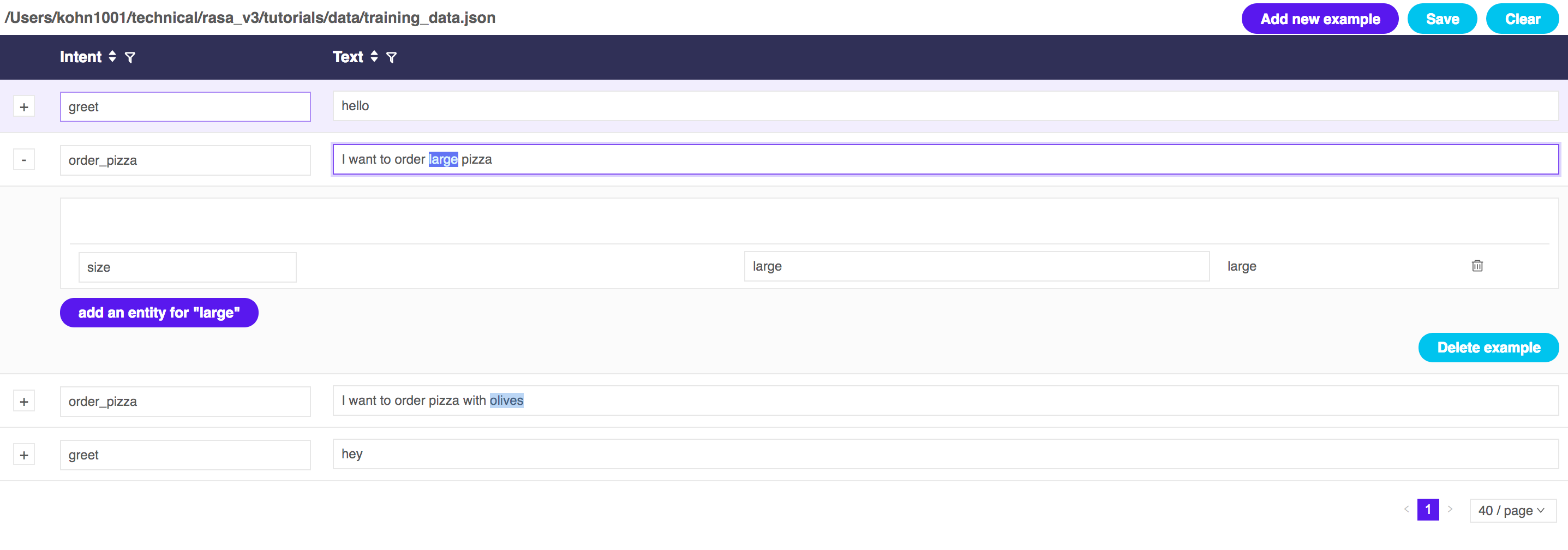

Here is a screenshot of the trainer:

In the screenshot we can see the Intent part: “order_pizza” and the user input as the text: “I want to order large pizza”. In addition I marked the word “large” and now I get an option to add it as an entity: “size” which its value in this example is: “large”

For this example we have only 2 examples per Intent (btw this is the minimum you must have for each Intent otherwise you’d get an error in training time.

Machine Learning Training

Now we are ready to actually train our Machine Learning NLU model.

Create a file: “nlu_model.py” in the parent workspace directory with this code:

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from __future__ import unicode_literals

from rasa_nlu.converters import load_data

from rasa_nlu.config import RasaNLUConfig

from rasa_nlu.model import Trainer, Metadata, Interpreter

def train (data, config, model_dir):

training_data = load_data(data)

configuration = RasaNLUConfig(config)

trainer = Trainer(configuration)

trainer.train(training_data)

model_directory = trainer.persist(model_dir, fixed_model_name = 'chat')

def run():

interpreter = Interpreter.load('./models/nlu/default/chat')

print(interpreter.parse('I want to order pizza'))

#print(interpreter.parse(u'What is the reivew for the movie Die Hard?'))

if __name__ == '__main__':

train('./data/training_data.json', './config/config.json', './models/nlu')

#run()If you are using the newer version of Rasa (no NLU conversters/config via yml), use this nlu_model.py:

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from __future__ import unicode_literals

# from rasa_nlu.converters import load_data

from rasa_nlu.training_data import load_data

from rasa_nlu.config import RasaNLUModelConfig

#from rasa_nlu.config import RasaNLUConfig

from rasa_nlu.model import Trainer, Metadata, Interpreter

from rasa_nlu import config

def train (data, config_file, model_dir):

training_data = load_data(data)

configuration = config.load(config_file)

trainer = Trainer(configuration)

trainer.train(training_data)

model_directory = trainer.persist(model_dir, fixed_model_name = 'chat')

def run():

interpreter = Interpreter.load('./models/nlu/default/chat')

print(interpreter.parse('I want to order pizza'))

#print(interpreter.parse(u'What is the reivew for the movie Die Hard?'))

if __name__ == '__main__':

train('./data/training_data.json', './config/config.yml', './models/nlu')

#run()

Now run (make sure that you are in the parent workspace directory):

python nlu_modle.py

Notice that in the above code the call to run() function is commented out.

Now try to uncomment it and comment out the call to the function train().

Run again:

python nlu_modle.py

You should see the output:

{u’entities’: [], u’intent’: {u’confidence’: 0.78863250761506243, u’name’: u’order_pizza’}, ‘text’: u’I want to order pizza’, u’intent_ranking’: [{u’confidence’: 0.78863250761506243, u’name’: u’order_pizza’}, {u’confidence’: 0.21136749238493763, u’name’: u’greet’}]}

As you can see our model has successfully classified the text to the Intent: “order_pizza”, with confidence: “0.788…” — to get better probability, simply add more examples in the training data.

Congratulations! we’ve just built an Interpreter for our chatbot — half way 🙂

You can take a break and get to the second part later — don’t forget to clap 🙂

Now you are ready to build the dialogue side.

Troubleshooting

If you’re experiencing issues!

git clone the latest version, install all the requirements like this:

Run:

virtualenv -p python3 <env name>python3 -m virtualenv <env_name>

pip3 install alt_requirements/requirements_tensorflow_sklearn.txt

And now you ready

- Just make sure you have a training file (similar to the above) config file like this — config.yml:

pipeline:

- name: "tokenizer_whitespace"

- name: "ner_crf"

- name: "intent_featurizer_count_vectors"

- name: "intent_classifier_tensorflow_embedding"

batch_size: [64, 256]

epochs: 1500

embed_dim: 20

2. Now train:

python3 rasa_nlu/train.py \

--config `pwd`/config/config.yml \

--data `pwd`/data/training_data.json \

--path `pwd`/projects/

3. Now run:

python3 rasa_nlu/server.py --path `pwd`/projects --config config/config.yml

Make sure the config.yml file is in the same place.

If you have issues with Python pkgs follow this:

virtualenv -p python3 <env name>python3 -m virtualenv <env_name>

pip3 install alt_requirements/requirements_tensorflow_sklearn.txt

More issues?

If you get the below error when trying to run the NLU (> python nlu_model.py):

“OSError: Can’t find model ‘en’”

It means that you haven’t got the spacy “en” language model downloaded.

run:

python3 -m spacy download en

Or:

python -m spacy download en

[ad_2]

This article has been published from the source link without modifications to the text. Only the deadline has been changed.