When it comes to troubleshooting application performance, metrics are no longer enough. To fully understand the environment you need logs and traces. Today, we’re going to focus on your Java applications.

Logging in Java could be done just by easily writing data to a file, however, this is not the simplest or most convenient way of logging. There are frameworks for Java that provide the object, methods, and unified configuration methods that help setting up logging, store logs, and in some cases even ship them to a log centralization solution. On top of that, there are also abstraction layers that enable easy switching of the underlying framework without the need of changing the implementation. This can come in handy if you ever need to replace your logging library for whatever reason – for example performance, configuration, or even simplicity.

Sounds complicated? It doesn’t have to be.

In this blog post we will focus on how to properly set up logging for your code to avoid all those mistakes that we already did. We will cover:

- Logging abstraction layers for Java

- Out of the box Java logging capabilities

- Java logging libraries, their configuration, and usage

- Logging the important information

- Log centralization solutions.

Let’s get started!

Logging Frameworks: Choosing the Logging Solution for Your Java Application

The best logging solution for your Java application is….well, it’s complicated. You have to think and look at your current environment and organization needs. If you already have a framework of choice that the majority of your applications use – go for that. It is very likely that you will already have an established format for your logs. That also means that you may already have an easy way to ship your logs to a log centralization solution of your choice, and you just need to follow the existing pattern.

However, if your organization does not have any kind of common logging framework, go and see what kind of logging is used in the application you are using. Are you using Elasticsearch? It uses Log4j 2. Majority of the third party applications also use Log4j 2? If so, consider using it. Why? Because you will probably want to have your logs in a single place and it will be easier for you to just work with a single configuration or pattern. However, there is a pitfall here. Don’t go that route if it means using an outdated technology when a newer and mature alternative already exists.

Finally, if you are selecting a new logging framework I would suggest using an abstraction layer and a logging framework. Such an approach gives you the flexibility to switch to a different logging framework when needed and, what’s most important, without needing to change the code. You will only have to update the dependencies and configuration, the rest will stay the same.

In most cases, the SLF4J with bindings to the logging framework of your choice will be a good idea. The Log4j 2 is the go-to framework for many projects out there both open and closed source ones.

The Abstraction Layers

Of course, your application can use the basic logging API provided out of the box by Java via the java.util.logging package. There is nothing wrong with that, but note that this will limit you can do with your logs. Certain libraries provide easy to configure formatters, out of the box industry standard destinations and top-notch performance. If you wish to use one of those frameworks and you would also like to be able to switch the framework in the future you should look at the abstraction layer on top of the logging APIs.

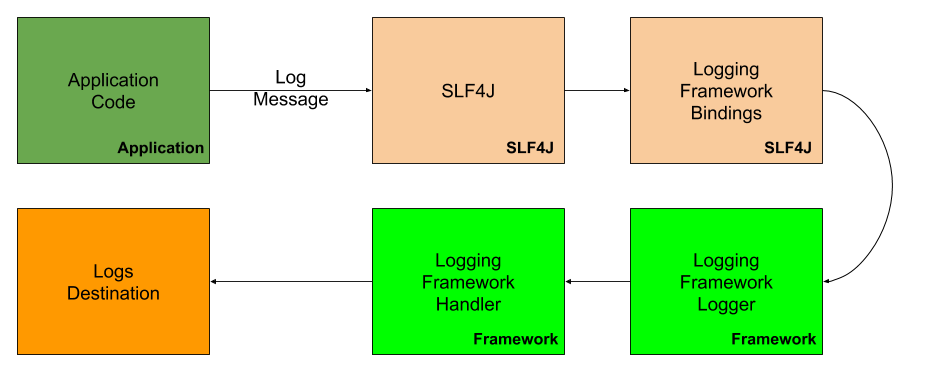

SLF4J – The Simple Logging Facade for Java is one such abstraction layer. It provides bindings for common logging frameworks such as Log4j, Logback, and the out of the box java.util.logging package. You can imagine the process of writing the log message in the following, simplified way:

But how would that look from the code perspective? Well, that’s a very good question. Let’s start by looking at the out of the box java.util.logging code. For example, if we would like to just start our application and print something to the log it would look as follows:

package com.sematext.blog.logging;

import java.util.logging.Level;

import java.util.logging.Logger;

public class JavaUtilsLogging {

private static Logger LOGGER = Logger.getLogger(JavaUtilsLogging.class.getName());

public static void main(String[] args) {

LOGGER.log(Level.INFO, "Hello world!");

}

}

You can see that we initialize the static Logger class by using the class name. That way we can clearly identify where the log message comes from and we can reuse a single Logger for all the log messages that are generated by a given class.

The output of the execution of the above code will be as follows:

Jun 26, 2020 2:58:40 PM com.sematext.blog.logging.JavaUtilsLogging

main INFO: Hello world!

Now let’s do the same using the SLF4J abstraction layer:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class JavaSLF4JLogging {

private static Logger LOGGER = LoggerFactory.getLogger(JavaSLF4JLogging.class);

public static void main(String[] args) {

LOGGER.info("Hello world!");

}

}

This time the output is slightly different and looks like this:

[main] INFO com.sematext.blog.logging.JavaSLF4JLogging - Hello world!This time, things are a bit different. We use the LoggerFactory class to retrieve the logger for our class and we use a dedicated info method of the Logger object to write the log message using the INFO level.

Starting with SLF4J 2.0 the fluent logging API was introduced, but at the time of writing of this blog it is still in alpha and is considered experimental, so we will skip it for now as the API may change.

So to finish up with the abstraction layer – it gives us a few major advantages compared to using the java.util.logging:

- A common API for all your application logging

- An easy way to use the desired logging framework

- An easy way to exchange the logging framework and not having to go through the whole code when wanting to switch

What Are Log Levels?

Before continuing with the Java logging API we should talk about log levels and how to use them to properly categorize our log messages. Java log level is a way to specify how important a given log message is.

For example, the following log levels are sorted from least to most important with some explanation on how I see them:

- TRACE – Very fine-grained information only used in a rare case where you need the full visibility of what is happening. In most cases, the TRACE level will be very verbose, but you can also expect a lot of information about the application. Use for annotating the steps in an algorithm that are not relevant in everyday use.

- DEBUG – Less granular than TRACE level, but still more granular than you should need in your normal, everyday use. The DEBUG level should be used for information that can be useful for troubleshooting and is not needed for looking at the everyday application state.

- INFO – The standard level of log information that indicates normal application action – for example “Created a user {} with id {}” is an example of a log message on an INFO level that gives you information about a certain process that finished with a success. In most cases, if you are not looking into how your application performs you could ignore most if not all of the INFO level logs.

- WARN – Log level that usually indicates a state of the application that might be problematic or that it detected an unusual execution. Something may be wrong, but it doesn’t mean that the application failed. For example, a message was not parsed correctly, because it was not correct. The code execution is continuing, but we could log that with the WARN level to inform us and others that potential problems are happening.

- ERROR – Log level that indicates an issue with a system that prevents certain functionality from working. For example, if you provide login via social media as one way of logging into your system, the failure of such a module is an ERROR level log for sure.

- FATAL – The log level indicating that your application encountered an event that prevents it from working or a crucial part of it from working. A FATAL log level is for example an inability to connect to a database that your system relies on or to an external payment system that is needed to check out the basket in your e-commerce system.

Hopefully, that sheds some light on what the log levels are and how you can use them in your application.

Java Logging API

The Java logging APIs come with a standard set of key elements that we should know about to be able to proceed and discuss more than just the basic logging. Those classes elements include:

- Logger – The logger is the main entity that an application uses to make logging calls. The Logger object is usually used for a single class or a single component to provide context-bound to a specific use case.

- LogRecord – The entity used to pass the logging requests between the framework that is used for logging and the handlers that are responsible for log shipping.

- Handler – The handler is used to export the LogRecord entity to a given destination. Those destinations can be the memory, console, files, and remote locations via sockets and various APIs. Eample of such a handlers are the handler for Syslog or a handler for Elasticsearch.

- Level – Defines the set of standard severities of the logging message such as INFO, ERROR, etc. Tells how important the log record is.

- Filter – Gives control over what gets logged and what gets dropped. It gives the application the ability to attach functionality controlling the logging output.

- Formatter – Supports the formatting of the LogRecord objects. By default, two formatters are available – the SimpleFormatter and the XMLFormatter. The first one prints the LogRecord object in a human-readable form using one or two lines. The second one writes the messages in the standard XML format.

This is everything that we need to know about these APIs and we can start looking into the configuration of different frameworks and abstraction layers of Java logging.

Configuration: How Do You Enable Logging in Java?

Now that we know the basics we are ready to include logging in our Java application. I assume that we didn’t choose the framework that we would like to go with, so I’ll discuss each of the common solutions mentioned earlier, so you can see how they can be integrated.

Adding logging to our Java application is usually about configuring the library of choice and including the Logger. That allows us to add logging into the parts of our application that we want to know about.

The Logger

Java Logger is the main entity that our application uses to create LogRecord, so basically to log what we want to output as the log message. Before discussing each logging framework in greater detail let’s discuss how to obtain the Logger instance and how to log a single log event in each of the four discussed frameworks.

Creating a Logger

How do we create a Logger? Well, that actually depends on the logging framework of our choice. But it is usually very simple. For example, for the SLF4J APIs you would just do the following:

... Logger LOGGER = LoggerFactory.getLogger(SomeFancyClassName.class)When using java.util.logging as the logging framework of choice we would do:

... Logger LOGGER = Logger.getLogger(SomeFancyClassName.class.getName())While using Log4j 2 would require the following Java code call:

... Logger LOGGER = LogManager.getLogger(SomeFancyClassName.class)For Logback we would call the same code as for SLF4J:

... Logger LOGGER = LoggerFactory.getLogger(SomeFancyClassName.class)Logging Events

Similar to obtaining the Logger, logging the events depends on the framework. Let’s look at the various ways of logging an INFO level log message.

For the SLF4J APIs you would just do the following:

LOGGER.info("This is an info level log message!")When using java.util.logging as the logging framework of choice we would do:

LOGGER.log(Level.INFO, "This is an info level log message!")While using Log4j 2 we would do:

LOGGER.info("This is an info level log message!")Finally, if using Logback, we would have to do the same call as for SLF4J:

LOGGER.info("This is an info level log message!")SLF4J: Using The Logging Facade

The SLF4J or the Simple Logging Facade for Java serves as an abstraction layer for various Java logging frameworks, like Log4J or Logback. This allows for plugging different logging frameworks at deployment time without the need for code changes.

To enable SLF4J in your project you need to include the slf4j-api library and logging framework of your choice. For the purpose of this blog post we will also include the slf4j-simple library, so that we can show how the SLF4J API looks in an easy way without complicating it with additional logging framework configuration. The slf4j-simple results in the SLF4J facade printing all log messages with INFO level or above to be printed in the System.err. Because of that, our Gradle build.gradle file looks as follows (you can find the whole project on Sematext Github account):

dependencies {

implementation 'org.slf4j:slf4j-api:1.7.30'

implementation 'org.slf4j:slf4j-simple:1.7.30'

}The code that will generate our SLF4J log message looks as follows:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class SLF4J {

private static Logger LOGGER = LoggerFactory.getLogger(SLF4J.class);

public static void main(String[] args) {

LOGGER.info("This is an info level log message!");

}

}We’ve already seen that – we create the Logger instance by using the LoggerFactory class and its getLogger method and providing the name of the class. That way we bind the logger to the class name which gives us context of the log message. Once we have that we can use the Logger methods to create LogRecord on a given level.

The above code execution results in the following output:

[main] INFO com.sematext.blog.logging.SLF4J - This is an info level log message!The SLF4J API is well designed and allows not only for simple messages. The typical usage pattern will require parameterized log messages. Of course SLF4J allows for that. Have a look at the following class (I omitted the import section for simplicity):

public class SLF4JParametrized {

private static Logger LOGGER = LoggerFactory.getLogger(SLF4JParametrized.class);

public static void main(String[] args) {

int currentValue = 36;

LOGGER.info("The parameter value in the log message is {}", currentValue);

}

}The output of the above code execution looks as follows:

[main] INFO com.sematext.blog.logging.SLF4JParametrized - The parameter value in the log message is 36As you can see the parameter placeholder was properly replaced and the log message contains the value. This allows for efficient log message building without the need of String concatenation or buffers and writers usage.

Binding SLF4J With Logging Framework at the Deploy Time

When using SLF4J you probably won’t be using the slf4j-simple library and you’ll want to use it with a dedicated logging framework so that your logs can be put in the destination of your choice or even multiple destinations of choice.

For SLF4J to be able to work with a logging framework of your choice in addition to the slf4j-api library you need one of the following:

slf4j-jdk1.4-SLF4J_VERSION.jar– the bindings for the java.util.logging, the one by default bundled with the JDK.slf4j-log4j12-SLF4J_VERSION.jar– the bindings for the Log4j version 1.2 and of course requires the log4j library to be present in the classpath.slf4j-jcl-SLF4J_VERSION.jar– the bindings for the Jakarta Commons Logging also referred to as Commons Logging.slf4j-nop-SLF4J_VERSION.jar– the binding that silently discards all the log messages.logback-classic-1.2.3.jar– the bindings for the Logback logging framework.log4j-slf4j-impl-SLF4J_VERSION.jar– the bindings for Log4J 2 and the SLF4J up to version 1.8.log4j-slf4j18-impl-SLF4J_VERSION.jar– the bindings for Log4J 2 and the SLF4J 1.8 and up.

Starting with the 1.6 version of the SLF4J library, if no bindings are found on the classpath SLF4J API will start to discard all log messages silently. So please keep that in mind.

It is also a good Java logging practice for the libraries and embedded software to only include dependency to the slf4j-api library and nothing else. That way the binding will be chosen by the developer of the application that uses the library.

SLF4J supports the Mapped Diagnostic Context which is a map where the application code provides key-value pairs which can be inserted by the logging framework in the log messages. If the underlying logging framework supports the MDC then SLF4J facade will pass the maintained key-value pairs to the used logging framework.

java.util.logging

When using the standard java.util.logging package we don’t need any kind of external dependencies. Everything that we need is already present in the JDK distribution so we can just jump on it and start including logging to our awesome application.

There are two ways we can include and configure logging using the java.util.logging package – by using a configuration file or programmatically. For the demo purposes let’s assume that we want to see our log messages in a single line starting with a date and time, severity of the log message and of course the log message itself.

Configuring java.util.logging via Configuration File

To show you how to use the java.util.logging package we’ve created a simple Java project and shared it in our Github repository. The code that generates the log and sets up our logging looks as follows:

package com.sematext.blog.loggging;

import java.io.InputStream;

import java.util.logging.Level;

import java.util.logging.LogManager;

import java.util.logging.Logger;

public class UtilLoggingConfiguration {

private static Logger LOGGER = Logger.getLogger(UtilLoggingConfiguration.class.getName());

static {

try {

InputStream stream = UtilLoggingConfiguration.class.getClassLoader()

.getResourceAsStream("logging.properties");

LogManager.getLogManager().readConfiguration(stream);

} catch (Exception ex) {

ex.printStackTrace();

}

}

public static void main(String[] args) throws Exception {

LOGGER.log(Level.INFO, "An INFO level log!");

}

}We start with initializing the Logger for our UtilLoggingConfiguration class by using the name of the class. We also need to provide the name of our logging configuration. By default the default configuration lives in the JAVA_HOME/jre/lib/logging.properties file and we don’t want to adjust the default configuration. Because of that, I created a new file called logging.properties and in the static block we just initialize it and provide it to the LogManager class by using its readConfiguration method. That is enough to initialize the logging configuration and results in the following output:

[2020-06-29 15:34:49] [INFO ] An INFO level log!Of course, you would only do the initialization once and not in each and every class. Keep that in mind.

Now that is different from what we’ve seen earlier in the blog post and the only thing that changed is the configuration that we included. Let’s now look at our logging.properties file contents:

handlers=java.util.logging.ConsoleHandler

java.util.logging.ConsoleHandler.formatter=java.util.logging.SimpleFormatter

java.util.logging.SimpleFormatter.format=[%1$tF %1$tT] [%4$-7s] %5$s %nYou can see that we’ve set up the handler which is used to set the log records destination. In our case, this is the java.util.logging.ConsoleHandler that prints the logs to the console.

Next, we said what format we would like to use for our handler and we’ve said that the formatter of our choice is the java.util.logging.SimpleFormatter. This allows us to provide the format of the log record.

Finally by using the format property we set the format to [%1$tF %1$tT] [%4$-7s] %5$s %n. That is a very nice pattern, but it can be confusing if you see something like this for the first time. Let’s discuss it. The SimpleFormatter uses String.format method with the following list or arguments: (format, date, source, logger, level, message, thrown).

So the [%1$tF %1$tT] part tells the formatter to take the second argument and provide the date and time parts. Then the [%4$-7s] part reads the log level and uses 7 spaces for formatting that. So for the INFO level, it will add 3 additional spaces. The %5$s tells the formatter to take the message of the log record and print it as a string and finally, the %n is the new line printing. Now that should be clearer.

Configuring java.util.logging Programmatically

Let’s now look at how to do similar formatting by configuring the formatter from the Java code level. We won’t be using any kind of properties file this time, but we will set up our Logger using Java.

The code that does that looks as follows:

package com.sematext.blog.loggging;

import java.util.Date;

import java.util.logging.*;

public class UtilLoggingConfigurationProgramatically {

private static Logger LOGGER = Logger.getLogger(UtilLoggingConfigurationProgramatically.class.getName());

static {

ConsoleHandler handler = new ConsoleHandler();

handler.setFormatter(new SimpleFormatter() {

private static final String format = "[%1$tF %1$tT] [%2$-7s] %3$s %n";

@Override

public String formatMessage(LogRecord record) {

return String.format(format,

new Date(record.getMillis()),

record.getLevel().getLocalizedName(),

record.getMessage()

);

}

});

LOGGER.setUseParentHandlers(false);

LOGGER.addHandler(handler);

}

public static void main(String[] args) throws Exception {

LOGGER.log(Level.INFO, "An INFO level log!");

}

}The difference between this method and the one using a configuration file is this static block. Instead of providing the location of the configuration file we are creating a new instance of the ConsoleHandler and we override the formatMessage method that takes the LogRecord object as its argument. We provide the format, but we are not passing the same number of arguments to the String.format method, so we modified our format as well. We also said that we don’t want to use the parent handler which means that we would only like to use our own handler and finally we are adding our handler by calling the LOGGER.addHandler method. And that’s it – we are done. The output of the above code looks as follows:

[2020-06-29 16:25:34] [INFO ] An INFO level log!I wanted to show that setting up logging in Java can also be done programmatically, not only via configuration files. However, in most cases, you will end up with a file that configures the logging part of your application. It is just more convenient to use, simpler to adjust, modify, and work with.

Log4j

Log4j 2 and its predecessor the Log4j are the most common and widely known logging framework for Java. It promises to improve the first version of the Log4j library and fix some of the issues identified in the Logback framework. It also supports asynchronous logging. For the purpose of this tutorial I created a simple Java project that uses Log4j 2, you can find it in our Github account.

Let’s start with the code that we will be using for the test:

package com.sematext.blog.logging;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

public class Log4JDefaultConfig {

private static final Logger LOGGER = LogManager.getLogger(Log4JDefaultConfig.class);

public static void main(String[] args) {

LOGGER.info("This is an INFO level log message!");

LOGGER.error("This is an ERROR level log message!");

}

}For the code to compile we need two dependencies – the Log4j 2 API and the Core. Our build.gradle includes the following:

dependencies {

implementation 'org.apache.logging.log4j:log4j-api:2.13.3'

implementation 'org.apache.logging.log4j:log4j-core:2.13.3'

}Things are quite simple here – we get the Logger instance by using the Log4J 2 LogManager class and its getLogger method. Once we have that we use the info and error methods of the Logger to write the log records. We could of course use SLF4J and we will in one of the examples. For now, though, let’s stick to the pure Log4J 2 API.

In comparison to the java.util.logging we don’t have to do any setup. By default, Log4j will use the ConsoleAppender to write the log message to the console. The log record will be printed using the PatternLayout attached to the mentioned ConsoleAppander with the pattern defined as follows: %d{HH:mm:ss.SSS} [%t] %-5level %logger{36} – %msg%n. However, the root logger is defined for ERROR level. That means, that by default, ERROR level logs and beyond will be only visible. Log messages with level INFO, DEBUG, TRACE will not be visible.

There are multiple conditions when Log4j2 will use the default configuration, but in general, you can expect it to kick in when no log4j.configurationFile property is present in the application startup parameters and when this property doesn’t point to a valid configuration file. In addition no log4j2-test.[properties|xml|yaml|jsn] files are present in the classpath and no log4j2.[properties|xml|yaml|jsn] files are present in the classpath. Yes, that means that you can easily have different logging configurations for tests, different configurations as the default for your codebase, and then use the log4j.configurationFile property and point to a configuration for a given environment.

A simple test – running the above code gives the following output:

09:27:10.312 [main] ERROR com.sematext.blog.logging.Log4JDefaultConfig - This is an ERROR level log message!Of course, such default behavior will not be enough for almost anything apart from a simple code, so let’s see what we can do about it.

Configuring Log4J 2

Log4J 2 can be configured in one of the two ways:

- By using the configuration file. By default, Log4J 2 understands configuration written in Java properties files and XML files, but you can also include additional dependencies to work with JSON or YAML. In this blog post, we will use this method.

- Programmatically by creating the ConfigurationFactory and Configuration implementations, or by using the exposed APIs in the Configuration interface or by calling internal Logger methods.

Let’s create a simple, old fashioned XML configuration file first just to print the time, level and the message associated with the log record. Log4J 2 requires us to call that file log4j2.xml. We put it in the resources folder of our example project and run it. This time the output looks as follows:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} %-5level - %msg%n"/>

</Console>

</Appenders>

<Loggers>

<Root level="info">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>Let’s stay here for a minute or two and discuss what we did here. We started with the Configuration definition. In this element, we defined that all the status-related messages will be logged with the WARN level – status=”WARN”.

Next, we defined the appender. The Appender is responsible for delivering the LogEvent to its destination. You can have multiple of those. In our case the Appenders section contains a single appender of the Console type:

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} %-5level - %msg%n"/>

</Console>We set its name, which we will use a bit later, we say that the target is the standard system output – SYSTEM_OUT. We also provided the pattern for the PatternLayout which defines the way our LogEvent will be formatted in the Console appender.

The last section defines the Loggers. We define the configuration of different loggers that we defined in our code or in the libraries that we are using. In our case this section only contains the Root logger definition:

<Root level="info">

<AppenderRef ref="Console"/>

</Root>The Root logger definition tells Log4J to use that configuration when a dedicated configuration for a logger is not found. In our Root logger definition, we say that the default log level should be set to INFO and the log events should be sent to the appender with the name Console.

If we run the example code mentioned above with our new XML configuration the output will be as follows:

09:29:58.735 INFO - This is an INFO level log message!

09:29:58.737 ERROR - This is an ERROR level log message!Just as we expected we have both log messages present in the console.

If we would like to use the properties file instead of the XML one we could just create a log4j2.properties file and include it in the classpath or just use the log4j.configuration property and point it to the properties file of our choice. To achieve similar results as we got with the XML based configuration we would use the following properties:

appender.console.type = Console

appender.console.name = STDOUT

appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{HH:mm:ss.SSS} %-5level - %msg%n

rootLogger.level = info

rootLogger.appenderRef.stdout.ref = STDOUTIt is less verbose and works, but is also less flexible if you want to use slightly more complicated functionalities.

Log4J Appenders

Log4J appender is responsible for delivering LogEvents to their destination. Some appenders only send LogEvents while others wrap other appenders to provide additional functionality. Let’s look at some of the appenders available in Log4J. Keep in mind that this is not a full list of appenders and to see all of them look at Log4J appenders documentation.

The not-so-full list of appenders in Log4J 2:

- Console – writes the data to System.out or System.err with the default begin the first one (a good practice when logging in containers)

- File – appender that uses the FileManager to write the data to a defined file

- Rolling File – appender that writes data to a defined file and rolls over the file according to a defined policy

- Memory Mapped File – added in version 2.1, uses memory-mapped files and relies on the operating system virtual memory manager to synchronize the changes in the file with the storage device

- Flume – appender that writes data to Apache Flume

- Cassandra – appender that writes data to Apache Cassandra

- JDBC – writes data to a database using standard JDBC driver

- HTTP – writes data to a defined HTTP endpoint

- Kafka – writes data to Apache Kafka

- Syslog – writes data to a Syslog compatible destination

- ZeroMQ – writes data to ZeroMQ

- Async – encapsulates another appender and uses a different thread to write data, which results in asynchronous logging

Of course, we won’t be discussing each and every appender mentioned above, but let’s look at the File appender to see how to log the log messages to the console and file at the same time.

Using Multiple Appenders

Let’s assume we have the following use case – we would like to set up our application to log everything to a file so we can use it for shipping data to an external log management and analysis solution such as Sematext Cloud and we would also like to have the logs from com.sematext.blog loggers printed to the console.

I’ll use the same example application that looks as follows:

package com.sematext.blog.logging;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

public class Log4j2 {

private static final Logger LOGGER = LogManager.getLogger(Log4j2.class);

public static void main(String[] args) {

LOGGER.info("This is an INFO level log message!");

LOGGER.error("This is an ERROR level log message!");

}

}The log4j2.xml will look as follows:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} %-5level - %msg%n"/>

</Console>

<File name="File" fileName="/tmp/log4j2.log" append="true">

<PatternLayout>

<Pattern>%d{HH:mm:ss.SSS} [%t] %-5level - %msg%n</Pattern>

</PatternLayout>

</File>

</Appenders>

<Loggers>

<Logger name="com.sematext.blog" level="info" additivity="true">

<AppenderRef ref="Console"/>

</Logger>

<Root level="info">

<AppenderRef ref="File"/>

</Root>

</Loggers>

</Configuration>You can see that I have two appenders defined – one called Console that appends the data to the System.out or System.err. The second one is called File. I provided a fileName configuration property to tell the appender where the data should be written and I said that I want to append the data to the end of the file. Keep in mind that those two appenders have slightly different patterns – the File one also logs the thread, while the Console one doesn’t.

We also have two Loggers defined. We have the Root logger that appends the data to our File appender. It does that for all the log events with the INFO log level and higher. We also have a second Logger defined with the name of com.sematext.blog, which appends the data using the Console appender as well with the INFO level.

After running the above code we will see the following output on the console:

15:19:54.730 INFO - This is an INFO level log message!

15:19:54.732 ERROR - This is an ERROR level log message!And the following output in the /tmp/log4j2.log file:

15:19:54.730 [main] INFO - This is an INFO level log message!

15:19:54.732 [main] ERROR - This is an ERROR level log message!You can see that the times are exactly the same, the lines are different though just as our patterns are.

In the normal production environment, you would probably use the Rolling File appender to roll over the files daily or when they will reach a certain size.

Log4J Layouts

Layout is used by the appender to format a LogEvent into a form that is defined.

By default, there are a few layouts available in Log4j 2 (some of them require additional runtime dependencies):

- Pattern – uses a string pattern to format log events (learn more about the Pattern layout)

- CSV – the layout for writing data in the CSV format

- GELF – layout for writing events in the Graylog Extended Log Format 1.1

- HTML – layout for writing data in the HTML format

- JSON – layout for writing data in JSON

- RFC5424 – writes the data in accordance with RFC 5424 – the extended Syslog format

- Serialized – serializes log events into a byte array using Java serialization

- Syslog – formats log events into Syslog compatible format

- XML – layout for writing data in the XML format

- YAML – layout for writing data in the YAML format

For example, if we would like to have our logs be formatted in HTML we could use the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<HTMLLayout></HTMLLayout>

</Console>

</Appenders>

<Loggers>

<Root level="info">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>The output of running the example code with the above configuration is quite verbose and looks as follows:

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta charset="UTF-8"/>

<title>Log4j Log Messages</title>

<style type="text/css">

<!--

body, table {font-family:arial,sans-serif; font-size: medium

;}th {background: #336699; color: #FFFFFF; text-align: left;}

-->

</style>

</head>

<body bgcolor="#FFFFFF" topmargin="6" leftmargin="6">

<hr size="1" noshade="noshade">

Log session start time Wed Jul 01 15:45:08 CEST 2020<br>

<br>

<table cellspacing="0" cellpadding="4" border="1" bordercolor="#224466" width="100%">

<tr>

<th>Time</th>

<th>Thread</th>

<th>Level</th>

<th>Logger</th>

<th>Message</th>

</tr>

<tr>

<td>652</td>

<td title="main thread">main</td>

<td title="Level">INFO</td>

<td title="com.sematext.blog.logging.Log4j2 logger">com.sematext.blog.logging.Log4j2</td>

<td title="Message">This is an INFO level log message!</td>

</tr>

<tr>

<td>653</td>

<td title="main thread">main</td>

<td title="Level"><font color="#993300"><strong>ERROR</strong></font></td>

<td title="com.sematext.blog.logging.Log4j2 logger">com.sematext.blog.logging.Log4j2</td>

<td title="Message">This is an ERROR level log message!</td>

</tr>

</table>

<br>

</body></html>Log4J Filters

The Filter allows log events to be checked to determine if or how they should be published. A filter execution can end with one of three values – ACCEPT, DENY or NEUTRAL.

Filters can be configured in one of the following locations:

- Directly in the Configuration for context – wide filters

- In the Logger for the logger specific filtering

- In the Appender for appender specific filtering

- In the Appender reference to determine if the log event should reach a given appender

There are a few out of the box filters and we can also develop our own filters. The ones that are available out of the box are:

- Burst – provides a mechanism to control the rate at which log events are processed and discards them silently is a limit is hit

- Composite – provides a mechanism to combine multiple filters

- Threshold – allows filtering on the log level of the log event

- Dynamic Threshold – similar to Threshold filter, but allows to include additional attributes, for example user

- Map – allows filtering of data that is in a MapMessage

- Marker – compares the Marker defined in the filter with the Marker in the log event and filters on the basis of that

- No Marker – allows checking for Marker existence in the log event and filter on the basis of that information

- Regex – filters on the basis of the defined regular expression

- Script – executes a script that should return a boolean result

- Structured Data – allows filtering on the id, type, and message of the log event

- Thread Context Map – allows filtering against data that are in the current context

- Time – allows restricting log events to a certain time

For example, if we would like to include all logs with level WARN or higher we could use the ThresholdFilter with the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} %-5level - %msg%n"/>

<ThresholdFilter level="WARN" onMatch="ACCEPT" onMismatch="DENY"/>

</Console>

</Appenders>

<Loggers>

<Root level="info">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>Running our example code with the above Log4j 2 configuration would result in the following output:

16:34:30.797 ERROR - This is an ERROR level log message!Log4J Garbage Free Logging

With Log4J 2.6 a partially implemented garbage-free logging was introduced. The framework reuses objects stored in ThreadLocal and tries to reuse buffers when converting text to bytes.

With the 2.6 version of the framework, two properties are used to control the garbage-free logging capabilities of Log4J 2. The log4j2.enableThreadlocals which is by default set to true for non-web applications and the log4j2.enableDirectEncoders which is also set to true by default are the ones that enable optimizations in Log4J 2.

With the 2.7 version of the framework, the third property was added – the log4j2.garbagefreeThreadContextMap. If set to true the ThreadContext map will also use a garbage-free approach. By default, this property is set to false.

There is a set of limitations when it comes to garbage-free logging in Log4J 2. Not all filters, appenders and layouts are available. If you decide to use it check Log4J 2 documentation on that topic.

Log4J Asynchronous Logging

The asynchronous logging is a new addition to the Log4J 2. The aim is to return to the application from the Logger.log method call as soon as possible by executing the logging operation in a separate thread.

There are several benefits and drawbacks of using asynchronous logging. The benefits are:

- Higher peak throughput

- Lower logging response time latency

There are also drawbacks:

- Complicated error handling

- Will not give higher performance on machines with low CPU count

- If you log more than the appender can process, the speed of logging will be dedicated by the slowest appender because of the queue filling up

If at this point you still think about turning on asynchronous logging you need to be aware that additional runtime dependencies are needed. Log4J 2 uses the Disruptor library and requires it as the runtime dependency. You also need to set the log4j2.contextSelector system property to org.apache.logging.log4j.core.async.AsyncLoggerContextSelector.

Log4J Thread Context

Log4J combines the Mapped Diagnostic Context with the Nested Diagnostic Context in the Thread Context. But let’s discuss each of those separate for a moment.

The Mapped Diagnostic Context or MDC is basically a map that can be used to store the context data of the particular thread where the context is running. For example, we can store the user identifier or the step of the algorithm. In the Log4J 2 instead of MDC we will be using the Thread Context. Thread Context is used to associate multiple events with limited numbers of information.

The Nested Diagnostic Context or NDC is similar to MDC, but can be used to distinguish interleaved log output from different sources, so in situations where a server or application handles multiple clients at once. In Log4J 2 instead of NDC we will use Thread Context Stack.

Let’s see how we can work with it. What we would like to do is add additional information to our log messages – the user name and the step of the algorithm. This could be done by using the Thread Context in the following way:

package com.sematext.blog.logging;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.apache.logging.log4j.ThreadContext;

public class Log4j2ThreadContext {

private static final Logger LOGGER = LogManager.getLogger(Log4j2ThreadContext.class);

public static void main(String[] args) {

ThreadContext.put("user", "[email protected]");

LOGGER.info("This is the first INFO level log message!");

ThreadContext.put("executionStep", "one");

LOGGER.info("This is the second INFO level log message!");

ThreadContext.put("executionStep", "two");

LOGGER.info("This is the third INFO level log message!");

}

}For our additional information from the Thread Context to be displayed in the log messages, we need a different configuration. The configuration that we used to achieve this look as follows:

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} [%X{user}] [%X{executionStep}] %-5level - %msg%n"/>

</Console>

</Appenders>

<Loggers>

<Root level="info">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>You can see that we’ve added the [%X{user}] [%X{executionStep}] part to our pattern. This means that we will take the user and executionStep property values from the Thread Context and include that. If we would like to include all that are present we would just add %X.

The output of running the above code with the above configuration would be as follows:

18:05:40.181 [[email protected]] [] INFO - This is the first INFO level log message!

18:05:40.183 [[email protected]] [one] INFO - This is the second INFO level log message!

18:05:40.183 [[email protected]] [two] INFO - This is the third INFO level log message!You can see that when the context information is available it is written along with the log messages. It will be present until it is changed or cleared.

What Is a Marker?

Markers are named objects that are used to enrich the data. While the Thread Context is used to provide additional information for the log events, the Markers can be used to mark a single log statement. You can image marking a log event with the IMPORTANT marker, which will mean that the appender should for example store the event in a separate log file.

How to do that? Look at the following code example that uses filtering to achieve that:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.Marker;

import org.slf4j.MarkerFactory;

public class Log4J2MarkerFiltering {

private static Logger LOGGER = LoggerFactory.getLogger(Log4J2MarkerFiltering.class);

private static final Marker IMPORTANT = MarkerFactory.getMarker("IMPORTANT");

public static void main(String[] args) {

LOGGER.info("This is a log message that is not important!");

LOGGER.info(IMPORTANT, "This is a very important log message!");

}

}The above example uses SLF4J as the API of choice and Log4J 2 as the logging framework. That means that our dependencies section of the Gradle build file looks like this:

dependencies {

implementation 'org.slf4j:slf4j-api:1.7.30'

implementation 'org.apache.logging.log4j:log4j-api:2.13.3'

implementation 'org.apache.logging.log4j:log4j-core:2.13.3'

implementation 'org.apache.logging.log4j:log4j-slf4j-impl:2.13.3'

}There are two important things in the above code example. First of all, we are creating a static Marker:

private static final Marker IMPORTANT = MarkerFactory.getMarker("IMPORTANT");We use the MarkerFactory class to do that by calling its getMarker method and providing the name of the marker. Usually, you would set up those markers in a separate, common class that your whole code can access.

Finally, to provide the Marker to the Logger we use the appropriate logging method of the Logger class and provide the marker as the first argument:

LOGGER.info(IMPORTANT, "This is a very important log message!");After running the code the console will show the following output:

12:51:25.905 INFO - This is a log message that is not important!

12:51:25.907 INFO - This is a very important log message!While the file that is created by the File appender will contain the following log message:

12:51:25.907 [main] INFO - This is a very important log message!Exactly what we wanted!

Keep in mind that Markers are not only available in Log4J. As you can see in the above example, they come from the SLF4J, so the SLF4J compatible frameworks should support them. In fact, the next logging framework that we will discuss – Logback also has support for Markers and we will look into that while discussing it.

Logback

Logback starts where the first version of Log4J ends and promises to provide improvements to that. For the purpose of this blog post, I created a simple project and shared it in our Github account.

Logback uses SLF4J as the logging facade. In order to use it we need to include it as the project dependency. In addition to that we need the logback-core and logback-classic libraries. Our Gradle build file dependency section looks as follows:

dependencies {

implementation 'ch.qos.logback:logback-core:1.2.3'

implementation 'ch.qos.logback:logback-classic:1.2.3'

implementation 'org.slf4j:slf4j-api:1.7.30'

}I also created a simple class for the purpose of showing how the Logback logging framework works:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Logback {

private static final Logger LOGGER = LoggerFactory.getLogger(Logback.class);

public static void main(String[] args) {

LOGGER.info("This is an INFO level log message!");

LOGGER.error("This is an ERROR level log message!");

}

}As you can see, we generate two log messages. But before that, we start by creating the Logger by using the SLF4J LoggerFactory class and its getLogger method. Once we have that we can start generating log messages by using appropriate Logger methods. We already discussed that when we were looking at the SLF4J earlier, so I’ll skip the more detailed discussion.

By default, when no configuration is provided the output generated by the execution of the above code will look as follows:

12:44:39.162 [main] INFO com.sematext.blog.logging.Logback - This is an INFO level log message!

12:44:39.165 [main] ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!The default behavior will be activated if the logback-test.xml file is not found in the classpath and logback.groovy is not found in the classpath and the logback.xml file is not present in the classpath. The default configuration uses the ConsoleAppender which prints the log messages to the standard output and uses the PatternLayoutEncoder with the pattern used for formatting the log messages defined as follows %d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} – %msg%n. The default Logger is assigned to the DEBUG log level, so it can be quite verbose.

Configuring Logback

To configure Logback we can either use the standard XML files as well as Groovy ones. We’ll use the XML method. Let’s just create the logback.xml file in the resources folder in our project with the following contents:

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<root level="info">

<appender-ref ref="STDOUT" />

</root>

</configuration>You can already see some similarities with Log4j 2. We have the root configuration element which is responsible for keeping the whole configuration. Inside we see two sections. The first one is the Appender section. We created a single appender with the STDOUT name that prints the output to the console and uses an Encoder with a given pattern. The Encoder is responsible for formatting the LogRecord. Finally, we defined the root element to use the INFO level as the default log level and by default send all the messages to the appender with the name STDOUT.

The output of the execution of the above code with the created logback.xml file present in the classpath results in the following output:

12:49:47.730 INFO com.sematext.blog.logging.Logback - This is an INFO level log message!

12:49:47.732 ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!Logback Appenders

Logback appender is the component that Logback uses to write log events. They have their name and a single method that can process the event.

The logback-core library lays the foundation for the Logback appenders and provides a few classes that are ready to be used. Those are:

- ConsoleAppender – appends the log events to the System.out or System.err

- OutputStreamAppender – appends the log events to java.io.Outputstream providing the basic services for other appenders

- FileAppender – appends the log events to a file

- RollingFileAppender – appends the log events to a file with the option of automatic file rollover

The logback-classic library that we included in our example project extends the list of available appenders and provide the appenders that are able to send data to the external systems:

- SocketAppender – appends the log events to a socket

- SSLSocketAppender – appends the log events to a socket using secure connection

- SMTPAppender – accumulates data in batches and send the content of the batch to a user-defined email after a user-specified event occurs

- DBAppender – appends data into a database tables

- SyslogAppender – appends data into Syslog compatible destination

- SiftingAppender – appender that is able to separate logging according to a given runtime attribute

- AsyncAppender – appends the logs events asynchronously

There are many more appenders that are present in Logback extensions, but we won’t mention them all. We won’t be talking about each and every appender either, but let’s look at one of the common use cases – writing logs both to a console and to a file.

Using Multiple Appenders

Let’s assume we have the following use case – we would like to set up our application to log everything to file so we can use it for shipping data to an external log management and analysis solution such as Sematext Cloud and we would also like to have the logs from com.sematext.blog loggers printed to the console.

I’ll use the same example application that looks as follows:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Logback {

private static final Logger LOGGER = LoggerFactory.getLogger(Logback.class);

public static void main(String[] args) {

LOGGER.info("This is an INFO level log message!");

LOGGER.error("This is an ERROR level log message!");

}

}But this time, the logback.xml will look slightly different:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<appender name="file" class="ch.qos.logback.core.FileAppender">

<file>/tmp/logback.log</file>

<append>true</append>

<immediateFlush>true</immediateFlush>

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<logger name="com.sematext.blog">

<appender-ref ref="console"/>

</logger>

<root level="info">

<appender-ref ref="file" />

</root>

</configuration>You can see that I have two appenders defined – one called console that appends the data to the System.out or System.err. The second one is called the file and uses the ch.qos.logback.core.FileAppender to write the log events to file. I provided a file configuration property to tell the appender where data should be written, I specified that I want to append data and I want an immediate flush to increase logging throughput. I also added an encoder to format my data and included the thread in the logline.

The difference between the earlier examples are also in the loggers definition. We have a root logger that appends the data to our file appender. It does that for all log events with the INFO log level and higher. We also have a second logger defined with the name of com.sematext.blog, which appends data using the console appender.

After running the above code we will see the following output on the console:

12:54:09.077 INFO com.sematext.blog.logging.Logback - This is an INFO level log message!

12:54:09.078 ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!And the following output in the /tmp/logback.log file:

12:54:09.077 [main] INFO com.sematext.blog.logging.Logback - This is an INFO level log message!

12:54:09.078 [main] ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!You can see that the times are exactly the same, the lines are different though just as our encoders are.

In the normal production environment, you would probably use the RollingFileAppender to roll over the files daily or when they reach a certain size. Keep that in mind when setting up your own production logging.

Logback Encoders

Logback encoder is responsible for transforming a log event into a byte array and writing that byte array to an OutputStream.

Right now there are two encoders available in Logback:

- PatternLayoutEncoder – encoder that takes a pattern and encodes the log event based on that pattern

- LayoutWrappingEncoder – encoder that closes the gap between the current Logback version and the versions prior to 0.9.19 that used to use Layout instances instead of the patterns.

Right now, in most cases you will be using a pattern, for example like in the following appender definition:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

<outputPatternAsHeader>true</outputPatternAsHeader>

</encoder>

</appender>

<root level="info">

<appender-ref ref="console" />

</root>

</configuration>The execution of such configuration along with our example project would result in the following output:

#logback.classic pattern: %d{HH:mm:ss.SSS} %-5level %logger{36} – %msg%n

13:16:30.724 INFO com.sematext.blog.logging.Logback - This is an INFO level log message!

13:16:30.726 ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!Logback Layouts

Logback layout is a component that is responsible for transforming an incoming event into a String. You can write your own layout and include it in your appender using the ch.qos.logback.code.encoder.LayoutWrappingEncoder:

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.code.encoder.LayoutWrappingEncoder">

<layout class="com.sematext.blog.logging.LayoutThatDoesntExist" />

</encoder>

</appender>Or you can use the PatternLayout, which is flexible and provides us with numerous ways of formatting our log events. In fact, we already used the PatternLayoutEncoder in our Logback examples! If you are interested in all the formatting options available check out the Logback documentation on layouts.

Logback Filters

Logback filter is a mechanism for accepting or rejecting a log event based on the criteria defined by the filter itself.

A very simple filter implementation could look as follows:

package com.sematext.blog.logging.logback;

import ch.qos.logback.classic.spi.ILoggingEvent;

import ch.qos.logback.core.filter.Filter;

import ch.qos.logback.core.spi.FilterReply;

public class SampleFilter extends Filter<ILoggingEvent> {

@Override

public FilterReply decide(ILoggingEvent event) {

if (event.getMessage().contains("ERROR")) {

return FilterReply.ACCEPT;

}

return FilterReply.DENY;

}

}You can see that we extend the Filter<ILoggingEvent> class and provide an implementation for the decide(ILoggingEvent event) method. In our case, we just check if the message contains a given String value and if it does we accept the message by returning FilterReply.ACCEPT. Otherwise, we reject the log event by running FilterReply.DENY.

We can include the filter in our appender by including the Filter tag and providing the class:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

<filter class="com.sematext.blog.logging.logback.SampleFilter" />

</appender>

<root level="info">

<appender-ref ref="console" />

</root>

</configuration>If we were to now execute our example code with the above Logback configuration the output would be as follows:

13:52:12.451 ERROR com.sematext.blog.logging.Logback - This is an ERROR level log message!There are also out of the box filters. The ch.qos.logback.classic.filter.LevelFilter allows us to filter events based on exact log level matching. For example, to reject all INFO level logs we could use the following configuration:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>INFO</level>

<onMatch>DENY</onMatch>

<onMismatch>ACCEPT</onMismatch>

</filter>

</appender>

<root level="info">

<appender-ref ref="console" />

</root>

</configuration>The ch.qos.logback.classic.filter.ThresholdFilter allows us to filter log events below the specified threshold. For example, to discard all log events that have level lower than WARN, so INFO and beyond we could use the following filter:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>WARN</level>

</filter>

</appender>

<root level="info">

<appender-ref ref="console" />

</root>

</configuration>There are also other filter implementations and one more additional type of filters called TurboFilters – we suggest looking into Logback documentation regarding filters to learn about them.

Mapped Diagnostic Contexts in Logback

As we already discussed when discussing Log4J 2 the MDC or Mapped Diagnostic Contexts is a way for the developers to provide the additional context information that will be included along with the log events if we wish. MDC can be used to distinguish log output from different sources – for example in highly concurrent environments. MDC is managed on a per thread basis.

Logback uses SLF4J APIs to use the MDC. For example, let’s look at the following code:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

public class LogbackMDC {

private static final Logger LOGGER = LoggerFactory.getLogger(LogbackMDC.class);

public static void main(String[] args) {

MDC.put("user", "[email protected]");

LOGGER.info("This is the first INFO level log message!");

MDC.put("executionStep", "one");

LOGGER.info("This is the second INFO level log message!");

MDC.put("executionStep", "two");

LOGGER.info("This is the third INFO level log message!");

}

}We’ve used the org.slf4j.MDC class and its put method to provide additional context information. In our case, there are two properties that we provide – user and the executionStep.

To be able to display the added context information we need to modify our pattern, for example like this:

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%X{user}] [%X{executionStep}] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<root level="info">

<appender-ref ref="STDOUT" />

</root>

</configuration>I added two new elements: [%X{user}] [%X{executionStep}]. By using the %{name_of_the_mdc_property} we can easily include our additional context information.

After executing our code with the above Logback configuration we would get the following output on the console:

14:14:10.687 [[email protected]] [] INFO com.sematext.blog.logging.LogbackMDC - This is the first INFO level log message!

14:14:10.688 [[email protected]] [one] INFO com.sematext.blog.logging.LogbackMDC - This is the second INFO level log message!

14:14:10.688 [[email protected]] [two] INFO com.sematext.blog.logging.LogbackMDC - This is the third INFO level log message!You can see that when the context information is available it is written along with the log messages. It will be present until it is changed or cleared.

Of course, this is just a simple example and the Mapped Diagnostic Contexts can be used in advanced scenarios such as distributed client-server architectures. If you are interested to learn more have a look at the Logback documentation dedicated to MDC. You can also use MDC as a discriminator value for the Sifting appender and route your logs based on that.

Markers in Logback

When we were discussing Log4J 2 we saw an example of using Markers for filtering. I also promised to get back to this topic and show you a different use case. For example, this time we will try sending an email when a log message with an important marker will appear.

First, we need to have some code for that:

package com.sematext.blog.logging;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.Marker;

import org.slf4j.MarkerFactory;

public class LogbackMarker {

private static Logger LOGGER = LoggerFactory.getLogger(LogbackMarker.class);

private static final Marker IMPORTANT = MarkerFactory.getMarker("IMPORTANT");

public static void main(String[] args) {

LOGGER.info("This is a log message that is not important!");

LOGGER.info(IMPORTANT, "This is a very important log message!");

}

}We’ve already seen that example. The code is the case, but this time we use SLF4J as the API of our choice and Logback as the logging framework.

Our logback.xml file will look as follows:

<configuration>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

<appender name="markersift" class="ch.qos.logback.classic.sift.SiftingAppender">

<discriminator class="com.sematext.blog.logging.logback.MarkerDiscriminator">

<key>importance</key>

<defaultValue>not_important</defaultValue>

</discriminator>

<sift>

<appender name="file-${importance}" class="ch.qos.logback.core.FileAppender">

<file>/tmp/logback-${importance}.log</file>

<append>false</append>

<immediateFlush>true</immediateFlush>

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern>

</encoder>

</appender>

</sift>

</appender>

<logger name="com.sematext.blog">

<appender-ref ref="console" />

</logger>

<root level="info">

<appender-ref ref="markersift" />

</root>

</configuration>This time the configuration is a bit more complicated. We’ve used the SiftingAppender to be able to create two files, depending on the value returned by our MarkerDiscriminator. Our discriminator implementation is simple and returns the name of the marker if the IMPORTANT marker is present in the log message and the defaultValue if it is not present. The code looks as follows:

package com.sematext.blog.logging.logback;

import ch.qos.logback.classic.spi.ILoggingEvent;

import ch.qos.logback.core.sift.AbstractDiscriminator;

import org.slf4j.Marker;

public class MarkerDiscriminator extends AbstractDiscriminator<ILoggingEvent> {

private String key;

private String defaultValue;

@Override

public String getDiscriminatingValue(ILoggingEvent iLoggingEvent) {

Marker marker = iLoggingEvent.getMarker();

if (marker != null && marker.contains("IMPORTANT")) {

return marker.getName();

}

return defaultValue;

}

public String getKey() { return key; }

public void setKey(String key) { this.key = key; }

public String getDefaultValue() { return defaultValue; }

public void setDefaultValue(String defaultValue) { this.defaultValue = defaultValue; }

}Let’s get back to our Logback configuration though. The sift appender that we have uses the same variable name as the key in our discriminator. That means that for each of the values returned by the discriminator a new file appender will be created. In our case, there will be two files: /tmp/logback-IMPORTANT.log and /tmp/logback-not_important.log.

After the code execution the /tmp/logback-IMPORTANT.log file will contain the following log message:

11:08:47.543 [main] INFO c.s.blog.logging.LogbackMarker - This is a very important log message!While the second file, the /tmp/logback-not_important.log will contain the following log message:

11:08:47.537 [main] INFO c.s.blog.logging.LogbackMarker - This is a log message that is not important!The output on the console will be as follows:

11:08:47.537 INFO c.s.blog.logging.LogbackMarker - This is a log message that is not important!

11:08:47.543 INFO c.s.blog.logging.LogbackMarker - This is a very important log message!As you can see in this simple example, everything works as we wanted.

Java Logging & Monitoring with Log Management Solutions

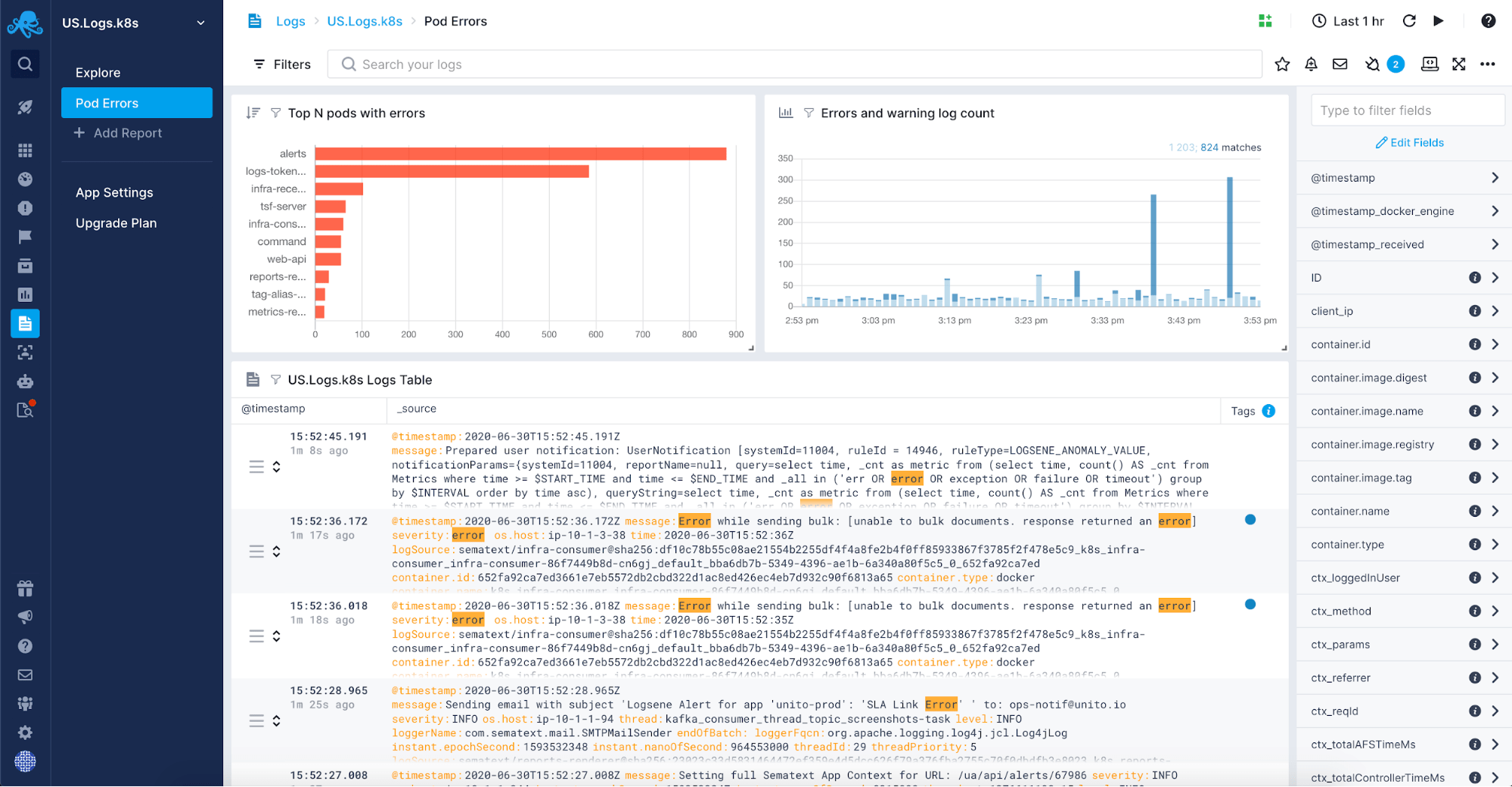

Now you know the basics about how to turn on logging in our Java application but with the complexity of the applications, the volume of the logs grows. You may get away with logging to a file and only using them when troubleshooting is needed, but working with huge amounts of data quickly becomes unmanageable and you should end up using a log management solution to centralize and monitor your logs. You can either go for an in house solution based on the open-source software or use one of the products available on the market like Sematext Cloud or Sematext Enterprise.

A fully managed log centralization solution will give you the freedom of not needing to manage yet another, usually quite complex, part of your infrastructure. It will allow you to manage a plethora of sources for your logs. You may want to include logs like JVM garbage collection logs in your managed log solution. After turning them on for your applications and systems working on the JVM you will want to have them in a single place for correlation, analysis, and to help you tune the garbage collection in the JVM instances. Alert on logs, aggregate the data, save and re-run the queries, hook up your favorite incident management software. Correlating logs data with metrics coming from the JVM applications, system and infrastructure, real user and API endpoints is something that platforms like Sematext Cloud are capable of. And of course, remember that application logs are not everything.

Conclusions

Logging is invaluable when troubleshooting Java applications. In fact, logging is invaluable in troubleshooting in general, no matter if that is a Java application or a hardware switch or firewall. Both the software and hardware gives us a look into how they are working in the form of parametrized logs enriched with contextual information.

In this article, we started with the basics of logging in your Java applications. We’ve learned what are the options when it comes to Java logging, how to add logging to your application, how to configure it. We’ve also seen some of the advanced features of logging frameworks such as Log4j and Logback. We learned about SLF4J and how it helps in safe proofing the application for future changes.

I hope this article gave you an idea on how to deal with Java application logs and why you should start working with them right away if you haven’t already. Good luck!

This article has been published from the source link without modifications to the text. Only the headline has been changed.