Conducting surveys and polls is one of the best ways to collect data and gain insight into questions like why are customers leaving our website? or why are voters attracted to this candidate? But analyzing survey data can be a real challenge!

In this tutorial, we’re going to walk through how to analyze survey data using Python. But don’t worry — even if you’ve never written code before, you can handle this! We’re going to take it step by step, and by the end of this tutorial you’ll see how you can unlock some pretty impressive analytical power with just a few lines of code!

For the purposes of this article, we’ll be analyzing StackOverflow’s 2019 developer survey data, because it’s a large and recent survey data set that’s public and properly anonymized. But these techniques will work for almost any sort of survey data.

Most survey data will be in a similar format to what we’re working with here: a spreadsheet where each row contains one person’s answers, and each column contains all the answers to a particular question. Here’s a snippet of our data set; yours will probably look similar.

Now, let’s dive into analyzing this data! We’ll start with a few quick steps to get ourselves set up for the analysis.

Step 1: Get the Survey Responses as a CSV File

To analyze our survey data using code, we need to get it in the form of a .csv file. If you’d like to work through this tutorial using the same data set we’re using, you can grab the 2019 StackOverflow Developer Survey results data here, and it comes as already prepared as a CSV file (inside of a .zip file).

If you’d like to start working with your own data, here’s how you can get it in CSV form:

- If you ran an online survey, you can probably download a CSV directly from the survey service you used. Typeform and many other online survey tools will allow you to download a CSV that contains all of your survey responses, which makes things nice and simple.

- If you used Google Forms for your survey, your data will be available in the form of a Google Sheet online. In the Google Sheets interface, click

File > Downloadand then selectComma-separated values (.csv, current sheet)to download your data in CSV format. - If you’ve collected your data some other way but you have it in spreadsheet format, you can save spreadsheets as CSV files from Excel or pretty much any other spreadsheet program. In Excel, you’ll want to navigate to

File > Save As. In theSave as type:field, selectCSV (Comma delimited) (*.csv)and then hit Save. In other spreadsheet software, the process should be very similar.

Before proceeding, you may want to open your CSV file with spreadsheet software and take a look at the format. Analysis will be simplest if it looks like the snippet we looked at earlier: questions in the first row of the spreadsheet, and respondent answers in every subsequent row. If your data has, for example, some extra rows at the top, it’s best to delete these rows before proceeding so that the first row in your data set is your survey questions and every subsequent row is one respondent’s answers.

Step 2: Set Up Your Coding Environment

(If you already have Anaconda installed and are familiar with Jupyter Notebooks, you can skip this step.)

The next step is setting up a tool called Jupyter Notebooks. Jupyter Notebooks are a popular tool for data analysis because they’re quick to set up and very convenient to use. We’ve written an in-depth Jupyter Notebooks tutorial that has a lot of detail, but we’ll cover what you you need to get up and running right here.

First, head to the Anaconda website. Scroll down slightly, select your computer’s operating system, and then click Download for the Python 3.7 version.

Once the file has downloaded, open it and follow the prompts to install it on your computer in the location of your choice. The default options will be fine if you’re not sure what you need.

After the install has completed, open the Anaconda Navigator app. You’ll be able to find this in whatever directory you just installed Anaconda, or simply by searching for “Anaconda” on your computer. You may see a few screens flash as the app opens, and then you’ll see this:

Click “Launch” under that center option, Jupyter Notebook. That will open a new tab in your web browser. From there, click “New” in the top right, and then under “Notebook” in the drop-down menu, click “Python 3”.

Presto! You’ve opened a new Jupyter notebook and we’re ready to start writing some code!

Step 3: Getting Our Survey Data Into Python

The first two lines of code we write will allow us to get our data set into Python and our Jupyter Notebook so that we can start working with it.

We’ll start by importing a Python library called pandas, and nicknaming it pd so that we can refer to it easily in our code. To do this, we’ll use the syntax import pandas as pd. This code tells Python to import the pandas library, and then tells it that when we use the letters pd, we want it to refer to that pandas library.

Python libraries are kind of like browser plugins; they add extra features and functionality so that we can do more with Python. Pandas is a very popular library for data analysis, and it will make our analysis work easier.

Using the “nickname” pd isn’t mandatory, but it’s a convention that’s common among pandas users, so getting used to using it will make it easier to read other people’s code.

Once we’ve imported pandas, we need to read our CSV to create what’s called a pandas DataFrame. A DataFrame is simply a table of data that we can manipulate with pandas. We’ll see what a DataFrame looks like visually in a moment, but to get our data into Python and pandas, we need to do two things:

- Read the CSV file we downloaded, which we can do using a pandas function called

.read_csv() - Assign the CSV data to a variable so that we can refer to it easily

Functions are bits of code that perform actions on an input. In this case, we’ll be inputting the file name for our CSV file, and then the .read_csv() function will automatically parse that file as a pandas DataFrame for us.

You can think of a variable in Python as sort of like a book title. Titles make referring to books easy — we can just say To Kill a Mockingbird and people will know what we’re talking about, so we don’t have to recite the entire book. Variables work similarly. They’re like short titles we can use to refer Python to much bigger pieces of information (like the survey data we’re trying to analyze) without having to restate all that information.

We do have to tell Python what we’re talking about the first time we use a variable, though. We’ll use the variable name df (short for DataFrame) for our survey data.

So, the code we’ll use to read our data set looks like this: df = pd.read_csv('survey_results_public.csv'). Here’s what that code is telling Python, from left to right:

df =tells Python we’re creating a new variable calleddf, and when you seedf, please refer to the following information:pdtells Python to look at the pandas library we imported earlier..read_csv('survey_results_public.csv')tells Python to use the function.read_csv()to read the filesurvey_results_public.csv.

Note that if your CSV file isn’t stored in the same folder as the Jupyter Notebook you’re working in, you’ll need to specify the file path for your data set. This will vary depending on when you’ve saved it, but it will probably look something like this: df = pd.read_csv('C://Users/Username/Documents/Filename.csv').

That’s more than enough preamble. Let’s run our code by typing this into the first cell of our Jupyter Notebook and then clicking the Run button:

import pandas as pd

df = pd.read_csv('survey_results_public.csv')But wait, nothing happened! That’s because we didn’t actually tell Python to give us any kind of response. Did our code actually work?

To check, let’s use another pandas method called .head(). This one will show us the first few rows of a DataFrame. We can specify how many rows we want to see by putting a number between those parentheses, or we can simply leave it as is and it will display the first five rows.

We do need to tell .head() what DataFrame to look at, though, so we’ll use the syntax df.head(). The df tells Python we want to look at that DataFrame we just made with our CSV data, the . tells Python that we’re going to do something to that DataFrame, and then the head() tells Python what we want to do: display the first five rows.

df.head()| Respondent | MainBranch | Hobbyist | OpenSourcer | OpenSource | Employment | Country | Student | EdLevel | UndergradMajor | … | WelcomeChange | SONewContent | Age | Gender | Trans | Sexuality | Ethnicity | Dependents | SurveyLength | SurveyEase | |

| 0 | 1 | I am a student who is learning to code |

Yes | Never | The quality of OSS and closed source software … |

Not employed, and not looking for work |

United Kingdom |

No | Primary/elementary school |

NaN | Just as welcome now as I felt last year |

Tech articles written by other developers;Indu… |

14.0 |

Man |

No | Straight / Heterosexual |

NaN | No | Appropriate in length |

Neither easy nor difficult |

|

| 1 | 2 | I am a student who is learning to code |

No | Less than once per year |

The quality of OSS and closed source software … |

Not employed, but looking for work |

Bosnia and Herzegovina |

Yes, full-time |

Secondary school (e.g. American high school, G… |

NaN | … | Just as welcome now as I felt last year |

Tech articles written by other developers;Indu… |

19.0 | Man | No | Straight / Heterosexual |

NaN | No |

Appropriate in length |

Neither easy nor difficult |

| 2 | 3 | I am not primarily a developer, but I write co… |

Yes | Never | The quality of OSS and closed source software … |

Employed full-time |

Thailand | No | Bachelor’s degree (BA, BS, B.Eng., etc.) |

Web development or web design |

… | Just as welcome now as I felt last year |

Tech meetups or events in your area;Courses on… |

28.0 | Man | No | Straight / Heterosexual |

NaN | Yes | Appropriate in length |

Neither easy nor difficult |

| 3 | 4 | I am a developer by profession |

No | Never | The quality of OSS and closed source software … |

Employed full-time |

United States |

No | Bachelor’s degree (BA, BS, B.Eng., etc.) |

Computer science, computer engineering, or sof… |

… |

Just as welcome now as I felt last year |

Tech articles written by other developers;Indu… |

22.0 | Man | No | Straight / Heterosexual |

White or of European descent |

No | Appropriate in length |

Easy |

| 4 | 5 | I am a developer by profession |

Yes | Once a month or more often |

OSS is, on average, of HIGHER quality than pro… |

Employed full-time |

Ukraine |

No | Bachelor’s degree (BA, BS, B.Eng., etc.) |

Computer science, computer engineering, or sof… |

… |

Just as welcome now as I felt last year |

Tech meetups or events in your area;Courses on… |

30.0 | Man |

No | Straight / Heterosexual |

White or of European descent;Multiracial |

No | Appropriate in length |

Easy |

There’s our DataFrame! Looks a lot like a spreadsheet, right? We can see that some of the answers look truncated, but don’t worry, that data hasn’t been lost, it just isn’t being displayed visually.

We might also notice a few other weird things in this data, like the appearance of NaN in some rows. We’ll deal with that later, but first, let’s take a closer look at our data set using another pandas feature called .shape to give us the size of our data set.

df.shape(88883, 85)

This tells us that we have 88,883 rows and 85 columns in our data set. These numbers should correspond exactly to the number of respondents (rows) and questions (columns) from the survey. If they do, it means that all of our survey data is now stored in that DataFrame and ready for analysis.

Step 4: Analyzing Multiple Choice Survey Questions

How you proceed with your analysis from here is really up to you, and with 85 questions, there are tons of different things we could do with this data. But let’s start with something simple: a Yes or No question.

(Note: it can be tough to figure out what some of the column names mean in StackOverflow’s data, but the dataset download comes with an accompanying schema file that includes the full text of each question, so you may need to refer to that from time to time to match column names with the questions respondents actually saw.)

One of the more unique Yes or No questions on the survey was this: “Do you think people born today will have a better life than their parents?”

It might be interesting to see how optimistic StackOverflow’s community is feeling about the future! We can do that with a handy pandas function called value_counts().

The value_counts() function looks at a single column of data at a time and counts how many instances of each unique entry that column contains. (A single column is called a Series in pandas lingo, so you may see this function referred to as Series.value_counts().)

To use it, all we need to do is tell Python the specific Series (a.k.a. column) we want to look at, and then tell it to execute .value_counts(). We can specify a specific column by writing the name of the dataframe, followed by the name of the column inside brackets, like so: df['BetterLife'].

(Just like with our list, since 'BetterLife' is a string of characters rather than a number or a variable name, we need to put it inside apostrophes or quotation marks to keep Python from getting confused).

Let’s run that code and see what we get back!

df['BetterLife'].value_counts()Yes 54938 No 31331 Name: BetterLife, dtype: int64

Nice! Now we know that of the 88,883 respondents in our data set, 54,938 think the future is looking bright.

It might be more helpful to see that represented as a percentage of the total number of responses, though. Thankfully, we can do that by simply adding an input inside the value_counts() parentheses. Function inputs are called arguments in programming, and can use them to pass information to the function that affects what it will output.

In this case, we’re going to pass an argument that looks like this: normalize=True. The pandas documentation has some details about this, but long story short: value_counts will assume we want normalize to be False if we don’t put anything inside the function, and thus it will return the raw counts for each value.

However, if we set normalize to True, it will “normalize” the counts by representing them as a percentage of the total number of rows in the pandas series we’ve specified.

df['BetterLife'].value_counts(normalize=True)Yes 0.636822 No 0.363178 Name: BetterLife, dtype: float64

Now we can see that roughly 64% of developers see life getting better for kids being born today, and roughly 36% think kids today are in for a similar or worse quality of life.

Let’s try the same thing on another interesting Yes/No question: “Do you believe that you need to be a manager to make more money?” Many Silicon Valley companies claim that management isn’t the only path to financial success, but are developers buying it?

df['MgrMoney'].value_counts(normalize=True)No 0.512550 Yes 0.291528 Not sure 0.195922 Name: MgrMoney, dtype: float64

Apparently, most developers aren’t buying it. In fact, less than 30% believe they can make more money without moving into management!

We can also see that although this was a Yes/No question, StackOverflow included a third response option (‘Not Sure’) and our code still worked the same way. value_counts() will work for any multiple choice question.

Step 5: Plotting Multiple Choice Answers

Looking at the numbers can be illuminating, but humans are visual creatures. And thankfully, it’s quite simple for us to chart the responses to these questions visually!

Since we’re writing our code in Jupyter Notebooks, we’ll start with a line of Jupyter magic:

%matplotlib inlineThis code isn’t part of our analysis, it’s just an instruction that tells our Jupyter Notebook to display our charts inline, right in the notebook we’re working in.

Once we’ve run that, all we need to do is add a little snippet to the end of our code: .plot(kind='bar'). This tells Python to take whatever we’ve just given it and plot the results in a bar graph. (We could replace 'bar' with 'pie' to get a pie chart instead, if we wanted).

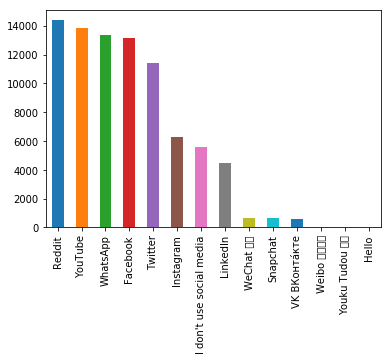

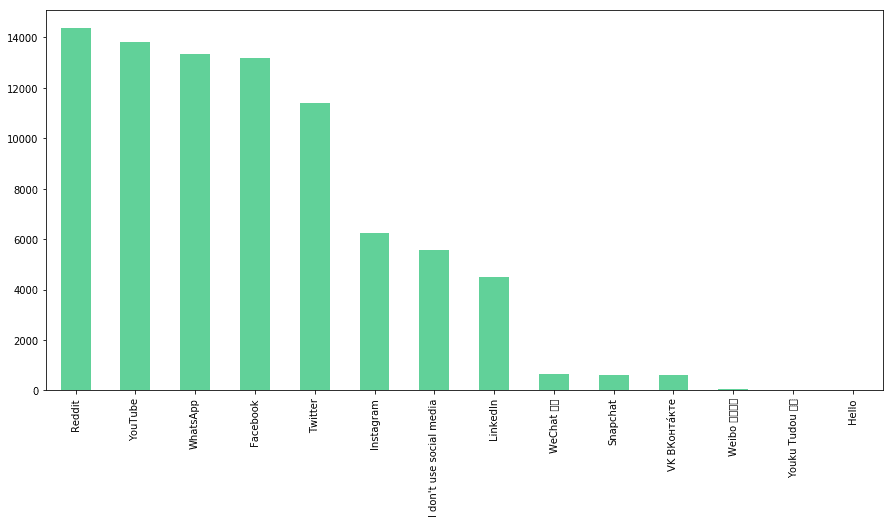

Let’s try it out on a multiple choice question about developers’ preferred social media sites:

df['SocialMedia'].value_counts().plot(kind="bar")<matplotlib.axes._subplots.AxesSubplot at 0x2142658a9b0>

That’s already pretty cool, but we can make this look even better very quickly by adding a couple more arguments to that .plot() function. Specifically, let’s add two:

- An argument called

figsizethat defines the size of the chart in the form of a width and height in inches (i.e.(15,7) - An argument called

colorthat defines the color of the bars.

Let’s use #61D199, Dataquest’s green color:

df['SocialMedia'].value_counts().plot(kind="bar", figsize=(15,7), color="#61d199")<matplotlib.axes._subplots.AxesSubplot at 0x214268d4048>

There’s a lot more we could do with this chart, but for our purposes here, that’s good enough — we’re just after quick visualizations!

Step 6: Analyzing Subsets of Survey Data

Of course, we’ll often want to go much deeper than printing out simple counts of our results! We can use Python and pandas to easily select and analyze very granular subsets of data, according to virtually any contions we set.

For example, we saw earlier that there’s a minority, but a significant minority, of StackOverflow users expect the world to get worse, based on their answers to the 'BetterLife' question we analyzed. Might this subset of users be, for example, older or younger than the more optimistic devs?

We can find out using what’s called a Boolean to sort our data and show only the responses of people who answered ‘Yes’ or ‘No’ to that question.

We’ll create our Boolean by specifying the DataFrame and Series (i.e., column) we want to look at, and then filtering for only the responses in that Series that meet a certain criteria by using a conditional operator.

This time, let’s run the code first, and then we’ll take a closer look at what it’s doing:

said_no = df[df['BetterLife'] == 'No']

said_no.head(3)| Respondent | MainBranch | Hobbyist | OpenSourcer | OpenSource | Employment | Country | Student | EdLevel | UndergradMajor | … | WelcomeChange | SONewContent | Age | Gender | Trans | Sexuality | Ethnicity | Dependents | SurveyLength | SurveyEase | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 6 | I am not primarily a developer, but I write co… | Yes | Never | The quality of OSS and closed source software … | Employed full-time | Canada | No | Bachelor’s degree (BA, BS, B.Eng., etc.) | Mathematics or statistics | … | Just as welcome now as I felt last year | Tech articles written by other developers;Indu… | 28.0 | Man | No | Straight / Heterosexual | East Asian | No | Too long | Neither easy nor difficult |

| 6 | 7 | I am a developer by profession | No | Never | The quality of OSS and closed source software … | Independent contractor, freelancer, or self-em… | Ukraine | No | Bachelor’s degree (BA, BS, B.Eng., etc.) | Another engineering discipline (ex. civil, ele… | … | A lot more welcome now than last year | NaN | 42.0 | Man | No | Straight / Heterosexual | White or of European descent | Yes | Appropriate in length | Neither easy nor difficult |

| 8 | 9 | I am a developer by profession | Yes | Once a month or more often | The quality of OSS and closed source software … | Employed full-time | New Zealand | No | Some college/university study without earning … | Computer science, computer engineering, or sof… | … | Just as welcome now as I felt last year | NaN | 23.0 | Man | No | Bisexual | White or of European descent | No | Appropriate in length | Neither easy nor difficult |

3 rows × 85 columns

To confirm that this worked, we can check the size of this dataset with .shape, and compare the number of rows in said_no to the number of people who answered ‘No’ to that question, using our old friend .value_counts().

said_no.shape(31331, 85)

df['BetterLife'].value_counts()Yes 54938 No 31331 Name: BetterLife, dtype: int64

We can see that our new dataframe has 31,331 rows, the same number of people who answered ‘No’ to the better life question. We can further confirm the filter has worked by running a quick value_counts() on this new dataframe:

said_no['BetterLife'].value_counts()No 31331 Name: BetterLife, dtype: int64

Perfect. OK, let’s backtrack and take a deeper look at this bit of code:

said_no = df[df['BetterLife'] == 'No']What’s happening here? From left to right:

said_no =is telling Python to create a new variable called said_no, and make it equal to whatever’s on the right side of the equals sign.dfis telling Python to makesaid_noequivalent to thedfDataFrame (our original data set), but then…[df['BetterLife'] == 'No']is telling Python to only include rows fromdfin which the answer in the'BetterLife'column is equal to'No'.

Note the double equals sign here. In Python, we use a single equals sign when we want to assign a value, i.e. a = 1. We use a double equals sign to check equivalence, and what Python actually returns is either True or False. In this case, we’re telling Python to only return rows in which df['BetterLife'] == 'No' returns True.

Now that we have this dataframe containing only the ‘No’ answerers, let’s make an equivalent one for the ‘Yes’ folks and then run some comparisons.

said_yes = df[df['BetterLife'] == 'Yes']Now, let’s see what we can determine by comparing these groups with how people answered the question about their age. The answers to that question are integers, so we can perform mathematical operations on them. One quick check we can run is to see whether there’s a significant difference in the mean or median ages of people who said ‘Yes’ versus ‘No’ to the better life question.

We’ll use a couple of new tricks in this code: .mean() and .median() functions, which will automatically calculate the mean and median, respectively, of a column of numerical data. We’re also going to enclose our calculations within a print() command so that all four numbers will be printed at once.

print(said_no['Age'].mean(),

said_yes['Age'].mean(),

said_no['Age'].median(),

said_yes['Age'].median()

)31.85781027611728 29.439848681016347 30.0 28.0

As we can see here, the pessimists tended to be slightly older, but not by a significant margin. It might be interesting to look at how specific age groups answered this question and whether that differed. If the pessimists tend to be slightly older, will we see a significant difference between the answers from devs over age 50 and under age 25, for example?

We can find out using the same Boolean trick we’ve been using, but instead of using == to check our conditional, we’ll use >= and <= since we want to filter for respondents whose 'Age' were 50 and up or 25 and down.

over50 = df[df['Age'] >= 50]

under25 = df[df['Age'] < = 25]</code>print(over50['BetterLife'].value_counts(normalize=True))

print(under25['BetterLife'].value_counts(normalize=True))No 0.514675 Yes 0.485325 Name: BetterLife, dtype: float64 Yes 0.704308 No 0.295692 Name: BetterLife, dtype: float64

Interesting! It looks like the oldest devs really are quite pessimistic, with slightly more than half of them saying that children born today won’t have a better life than their parents. Young devs, on the other hand, seem to be more optimistic than average.

However, it’s worth checking just how large the samples we’re dealing with here actually are. We can do this quickly using the len() function, which will count the number of items in a list or rows in a DataFrame.

print(len(over50))

print(len(under25))3406 26294

Neither group is huge compared to the total datase, but both are big enough that this might represent a real split.

Step 7: Filtering More Specific Subsets

Thus far, we’ve been filtering our data using Booleans one at a time to look at people who answered a specific question in a specific way. But we can actually chain Booleans together to very quickly filter down to a very granular level.

To do this, we’ll use a couple of pandas Boolean operators, & and &~.

&, as we might expect, allows us to string two Booleans together and return True only if both conditions are true. So in our context, if we’re creating a new DataFrame by filtering rows from our original DataFrame, using & between two Booleans will allow us to add only the rows that meet both conditions.

We can think about &~ as meaning “and not.” If we use &~ between two Booleans, it will return a row only when the first Boolean evaluates to True but the second is False.

Syntax-wise, the only change from using a single Boolean is that when we’re stringing together more than one, we need to enclose each Boolean in parentheses, so the basic format looks like this: df[(Boolean 1) & (Boolean 2)]

Let’s try this out by filtering for people who answered ‘Yes’ to the better life question and who live in India:

filtered_1 = df[(df['BetterLife'] == 'Yes') & (df['Country'] == 'India')]We can confirm that this worked as expected by quickly checking out the value counts for the answers we filtered for:

print(filtered_1['BetterLife'].value_counts())

print(filtered_1['Country'].value_counts())Yes 6136 Name: BetterLife, dtype: int64 India 6136 Name: Country, dtype: int64

As we can see, our new DataFrame, filtered_1, contains only people in India who gave the optimistic answer about the future.

Just now, we combined two Booleans. But there’s no limit to the number of these we can string together, so let’s try a more complicated drill-down. We’re going to filter for only people who:

- Answered ‘Yes’ to the better life question

- Are over age 50

- Live in India

- Do NOT code as a hobby

- Contribute to open source projects at least occasionally

Here we go:

filtered = df[(df['BetterLife'] == 'Yes') & (df['Age'] >= 50) & (df['Country'] == 'India') &~ (df['Hobbyist'] == "Yes") &~ (df['OpenSourcer'] == "Never")]

filtered| Respondent | MainBranch | Hobbyist | OpenSourcer | OpenSource | Employment | Country | Student | EdLevel | UndergradMajor | … | WelcomeChange | SONewContent | Age | Gender | Trans | Sexuality | Ethnicity | Dependents | SurveyLength | SurveyEase | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2201 | 2209 | I am not primarily a developer, but I write co… | No | Less than once a month but more than once per … | The quality of OSS and closed source software … | Independent contractor, freelancer, or self-em… | India | No | Bachelor’s degree (BA, BS, B.Eng., etc.) | Another engineering discipline (ex. civil, ele… | … | Somewhat more welcome now than last year | NaN | 55.0 | Man | No | Straight / Heterosexual | Black or of African descent;East Asian;Hispani… | Yes | Too long | Neither easy nor difficult |

| 18401 | 18504 | I am not primarily a developer, but I write co… | No | Less than once per year | The quality of OSS and closed source software … | Independent contractor, freelancer, or self-em… | India | No | Other doctoral degree (Ph.D, Ed.D., etc.) | A business discipline (ex. accounting, finance… | … | Just as welcome now as I felt last year | Tech articles written by other developers;Indu… | 55.0 | Man | No | Straight / Heterosexual | South Asian | Yes | Too long | Easy |

| 52359 | 52695 | I am a developer by profession | No | Less than once per year | The quality of OSS and closed source software … | Employed full-time | India | No | Bachelor’s degree (BA, BS, B.Eng., etc.) | A business discipline (ex. accounting, finance… | … | Somewhat more welcome now than last year | Tech articles written by other developers | 53.0 | Man | No | Straight / Heterosexual | NaN | Yes | Appropriate in length | Neither easy nor difficult |

| 64464 | 64877 | I used to be a developer by profession, but no… | No | Once a month or more often | OSS is, on average, of HIGHER quality than pro… | Not employed, but looking for work | India | Yes, full-time | Master’s degree (MA, MS, M.Eng., MBA, etc.) | Computer science, computer engineering, or sof… | … | Just as welcome now as I felt last year | Courses on technologies you’re interested in | 95.0 | Man | No | Straight / Heterosexual | NaN | No | Too long | Neither easy nor difficult |

| 75382 | 75856 | I am a developer by profession | No | Less than once per year | OSS is, on average, of LOWER quality than prop… | Employed full-time | India | Yes, full-time | Bachelor’s degree (BA, BS, B.Eng., etc.) | Computer science, computer engineering, or sof… | … | A lot less welcome now than last year | Tech articles written by other developers;Indu… | 98.0 | Man | No | Bisexual | South Asian | Yes | Appropriate in length | Neither easy nor difficult |

5 rows × 85 columns

Now we’ve started to see the true power of using programming to analyze survey data! From an initial data set of more than 88,000 responses, we’ve located a very specific audience of just five people!

Trying to filter down to such a specific audience quickly would be fairly challenging using spreadsheet software, but here we’ve built and run the filter with a single line of code.

Step 8: Analyzing Multi-Answer Survey Questions

Another thing we might need to do in the context of analyzing survey data is deal with multi-answer questions. On this survey, for example, respondents were asked what prorgramming languages they worked with and directed to select as many answers as apply.

Different surveys may handle the answers to these sorts of questions differently. Sometimes, each answer may be in a separate column, or all of a respondent’s answers could be stored in a single column with some sort of delimiter between each answer. So our first task is to take a look at the relevant column to see how answers were recorded in this particular survey.

df["LanguageWorkedWith"].head()0 HTML/CSS;Java;JavaScript;Python 1 C++;HTML/CSS;Python 2 HTML/CSS 3 C;C++;C#;Python;SQL 4 C++;HTML/CSS;Java;JavaScript;Python;SQL;VBA Name: LanguageWorkedWith, dtype: object

In this survey, we can see that the respondents’ answers were stored in a single column, using ; as a delimiter.

Since we’re working with Python in this tutorial, let’s start analyzing this survey data to see how many developers are using Python.

One way of doing this is to see how many rows contain the string Python in this column. Pandas has a built-in method for doing this with a series called Series.str.contains. This will look at each row in the series (which in this case will be our LanguageWorkedWith column) and determine whether it contains whatever string we give it as an argument. If the row does contain that string argument, it will return True, otherwise it will return False.

Knowing that, we can quickly figure out how many respondents included Python in their languages used answer. We’ll tell Python the series we want to look at (df["LanguageWorkedWith"]) and then use str.contains() with the argument Python. This will give us a Boolean series, and from there all we have to do is count the number of “True” responses using value_counts().

And once again, we can use the argument normalize=True to view the results as percentages rather than seeing the raw counts.

python_bool = df["LanguageWorkedWith"].str.contains('Python')

python_bool.value_counts(normalize=True)False 0.583837 True 0.416163 Name: LanguageWorkedWith, dtype: float64

That’s a quick-and-dirty way of getting our answer (almost 42% of all developers use Python!). But often, we may want to be able to break the answers in this column down for deeper analysis.

Say, for example, we wanted to figure out how often each language was mentioned. To do that using the code above, we’d need to know every single possible response, and then we’d need to run something similar to the above code for each potential answer.

In some cases, that may be doable, but if there are a ton of potential answers (for example, if respondents were allowed to select an “Other” option and fill in their own choice), that approach might not be feasible. Instead, we’ll need to separate out each individual answer using the delimiter we found earlier.

Just as we used Series.str.contains to see if a string in a pandas series contained a substring, we can use Series.string.split to split each row in the series based a delimited we pass to that function as an argument. In this case, we know the delimiter is ;, so we can use .str.split(';').

We’re also going to add an extra argument, expand=True, to str.split(). This will create a new dataframe from our series by making each language its own column (each row still represents one respondent). You can read more about how this works here.

Let’s run that code on the 'LanguageWorkedWith' column and store the results as a new pandas series called lang_lists.

lang_lists = df["LanguageWorkedWith"].str.split(';', expand=True)

lang_lists.head()| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | … | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | HTML/CSS | Java | JavaScript | Python | None | None | None | None | None | None | … | None | None | None | None | None | None | None | None | None | None |

| 1 | C++ | HTML/CSS | Python | None | None | None | None | None | None | None | … | None | None | None | None | None | None | None | None | None | None |

| 2 | HTML/CSS | None | None | None | None | None | None | None | None | None | … | None | None | None | None | None | None | None | None | None | None |

| 3 | C | C++ | C# | Python | SQL | None | None | None | None | None | … | None | None | None | None | None | None | None | None | None | None |

| 4 | C++ | HTML/CSS | Java | JavaScript | Python | SQL | VBA | None | None | None | … | None | None | None | None | None | None | None | None | None | None |

5 rows × 28 columns

As we can see, our string splitting worked. Each row in our series is now a row in the new data frame, and each language has been split from the others into unique columns.

But we wanted to figure out how many times each language was mentioned, and we’re not done yet. value_counts() won’t help us here — we can only use that on a pandas Series, not a DataFrame.

To be able to see the number of times each language was mentioned in total, we have to do a bit more work. There are a number of ways we could approach this problem, but here’s one:

- Use

df.stack()to stack this DataFrame, slicing each column and then stacking them on top of each other so that every data point in the DataFrame appears in a single pandas Series. - Use

value_counts()on this new “stacked” series to get the total number of times each language is mentioned.

This is a bit complicated, so let’s try this with a simpler example first so we can observe what’s happening visually. We’ll start with a DataFame that’s very similar to lang_lists, just a lot shorter so that it’s easier to follow.

Here’s our DataFrame (since this isn’t part of our real data set, you probably don’t want to try to code along with this bit, just read the code on this page and try to follow what’s happening):

new_df| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | Python | Javascript | SQL | NaN |

| 1 | HTML/CSS | Javascript | Java | C# |

| 2 | R | Python | NaN | NaN |

First, we’ll use .stack() to slice this dataframe apart and stack the columns on top of one another. Note that when this happens, the null values that were inserted into the dataframe above are automatically removed.

new_df.stack()0 0 Python 1 Javascript 2 SQL 1 0 HTML/CSS 1 Javascript 2 Java 3 C# 2 0 R 1 Python dtype: object

Here’s an animated view of what’s happening here:

Now that we’ve got every answer stacked into a single series, we can use value_counts() to count the totals:

new_df.stack().value_counts()Javascript 2 Python 2 Java 1 C# 1 SQL 1 HTML/CSS 1 R 1 dtype: int64

That’s the raw count we need! Note that we can’t use normalize=True to get a percentage-based readout here because that calculation is based on the length of the series, and this series has nine rows whereas our original data set only has three responses.

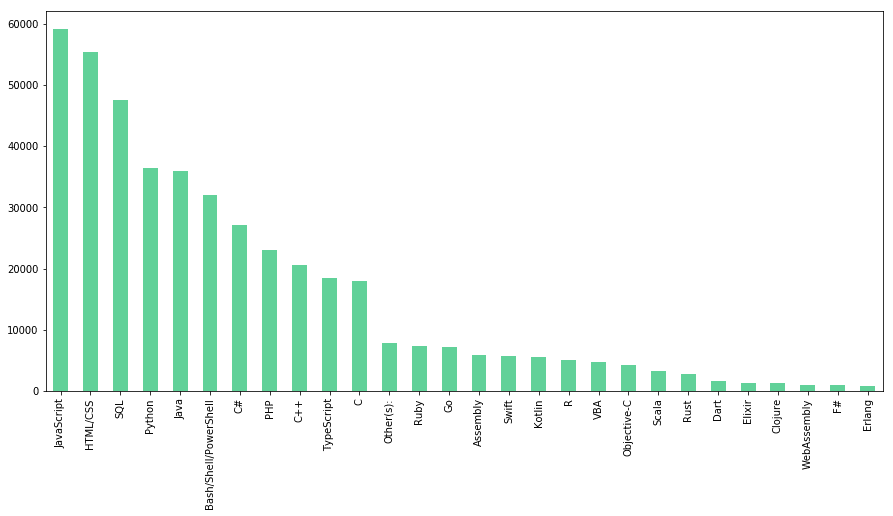

Now that we know how to do this, though, let’s try the same thing on our real data set using the lang_lists series we already created.

lang_lists.stack().value_counts()JavaScript 59219 HTML/CSS 55466 SQL 47544 Python 36443 Java 35917 Bash/Shell/PowerShell 31991 C# 27097 PHP 23030 C++ 20524 TypeScript 18523 C 18017 Other(s): 7920 Ruby 7331 Go 7201 Assembly 5833 Swift 5744 Kotlin 5620 R 5048 VBA 4781 Objective-C 4191 Scala 3309 Rust 2794 Dart 1683 Elixir 1260 Clojure 1254 WebAssembly 1015 F# 973 Erlang 777 dtype: int64

That’s the information we were looking for! Now, let’s put a final touch on our analysis project by charting this information visually, using the same approach to plotting we used earlier.

lang_df.stack().value_counts().plot(kind='bar', figsize=(15,7), color="#61d199")<matplotlib.axes._subplots.AxesSubplot at 0x2144192ea20>

Awesome!

Analyzing Survey Data: Next Steps

In this tutorial, we’ve covered some of the basic ways you can analyze survey data using Python. And although it’s a long read, if you look back, you’ll see that we’ve actually only used a few lines of code. Once you get the hang of it, doing this sort of analysis is actually very quick!

And of course, we’ve only scratched the surface here. There’s much, more more that you can do, particularly with a data set this large. If you’re looking for a challenge, here are some questions you could try to answer.

- If you work out the percentages based on our counts above divided by total survey respondents (88,883), you’ll notice that they’re not quite the same as the numbers StackOverflow reported. This is because a small number of respondents didn’t answer the language question. How could you find and count these respondents to find the exact percentage of respondents who listed each language?

- The results above give us the most popular languages used among all developers surveyed, but a different question on this survey identifies different types of roles, including data scientists, data analysts, etc. Can you create a chart that shows the langauges that are most popular among data professionals?

This article has been published from the source link without modifications to the text. Only the headline has been changed.

Source link