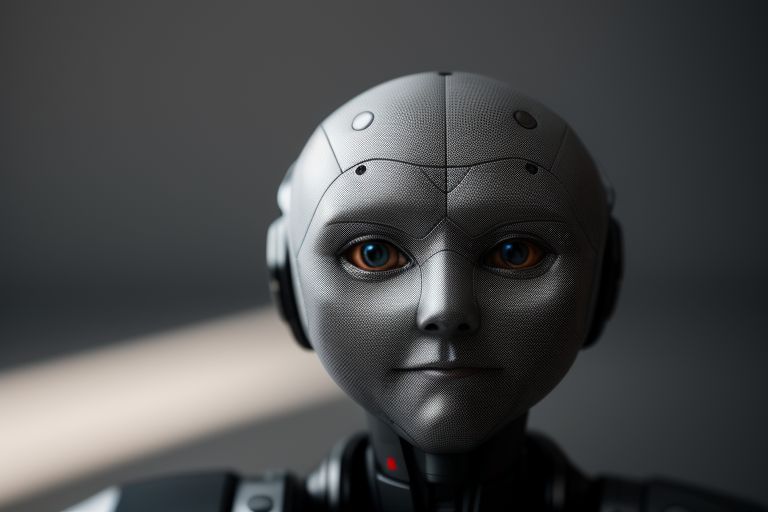

Clones, digital duplicates and synthetic actors

One issue with generative AI is that it can be used to mimic real people and trick you into believing they are saying or doing things they aren’t. Deepfakes, as their name suggests, aim to mislead or fool, and that’s the problem with them. In October, actor Tom Hanks warned that an unapproved advertisement purporting to be an AI clone was promoting dental plans. President Joe Biden made a joke about a deepfake doppelgänger mimicking his voice while he was introducing his Executive Order that places restrictions on the development and use of AI.

Hollywood actors and performers, however, are worried that Hollywood studios and content creators may use genAI to create digital doubles or synthetic performers instead of using (and paying) humans, in addition to advertisements and disinformation campaigns. The resolution of the Hollywood actor strike includes restrictions on the use of genAI in the business, requiring actors to grant permission to producers in order to create and utilize digital replicas of themselves.

In an earlier post on X (formerly known as Twitter), actor Justine Bateman, who acted as the union advisor for the genAI negotiations with Hollywood, effectively summed up the bigger issue.

Winning an audition could become extremely challenging since, according to Bateman, you will now have to compete not only with actors that fit the role but also with every actor, living or dead, who has offered to rent their “digital double” for an inordinate amount of money in order to fit the part. Additionally, you’ll be up against an endless supply of AI Objects that streamers and studios are free to use. Additionally, there is no need for a set or crew at all if the entire cast is made up of AI objects rather than real actors.

To what extent can artificial intelligence be used to create digital twins and phoney actors? Allow me to highlight three noteworthy advances in AI that came out this past week in the news and highlight the problem.

According to report, Charlie Holtz, a “hacker in residence” at the machine-learning startup Replicate, developed the first AI clone of British biologist and historian Sir David Attenborough. Holtz demonstrated on X how he was able to mimic the unique voice of the documentarian. The outcome: This is what occurs when your life is narrated by David Attenborough.

Holtz gave away the code needed to co-opt Attenborough’s voice. As of this writing, Attenborough had not responded to for comment. Meanwhile, Holtz’s experiment has received over 3.5 million views. Regarding Attenborough, one commenter expressed excitement about hearing him “narrate videos of my baby learning how to eat broccoli.”

The second is an experimental music tool available on YouTube called Dream Track, which allows you to make your own songs by legally cloning the voices of nine musicians, such as Sia, John Legend, and Demi Lovato. Dream Track, developed in partnership with Google’s DeepMind AI lab, is currently being tested by a select group of US creators who can use it to create music for their YouTube shorts by simply typing in their idea for a song and selecting one of the nine available artists. After that, the tool will produce an original Shorts soundtrack with the artist’s AI-generated voice.

Legend stated in a testimonial uploaded on a YouTube blog that taking part in the Dream Track experiment on YouTube is a chance to help shape possibilities for the future. He is glad to have a place at the table as an artist, and he is excited to see what the creators will come up with next.

In her support, Charli XCX came across as a little more circumspect. She was wary when YouTube first approached her, and she remains so because artificial intelligence will change the world and the music business in ways that we do not fully comprehend. She hopes to learn a little bit about the creative possibilities that may arise from this experiment, and she is eager to see what results.

A sample showcasing T-Pain that was created using the prompt, “a sunny morning in Florida, R&B,” is available for listening. One more person adopts Charlie Puth’s persona and performs an energetic acoustic ballad about how opposites attract.

The announcement of YouTube’s policies for “responsible AI innovation” on the platform coincided with the news regarding Dream Track. When uploading a video, video producers will have to choose from a list of content labels to indicate whether or not the video includes artificially altered or realistic content. This is crucial in situations where the content addresses touchy subjects like elections, ongoing disputes, public health emergencies, or public officials.

Lets focus on three different sets of genAI technology: Meta, Emu Video and Emu Edit. Using text only, image only, or both text and image, Emu Video is a “simple” text-to-video generator that allows you to create an animated 4-second clip at 16 frames per second. An easy way to edit those photos is provided by Emu Edit. You can see for yourself how it works.

With Meta’s demo tool, you can select from a selection of images (a fawn Pembroke Welsh corgi, a panda wearing sunglasses, etc.) and then use the prompts to make your character appear in Central Park, underwater, walking in slow motion, or skateboarding in an anime manga or photorealistic style.

“Oh, that’s an easy way to create a GIF,” you may be thinking. In the not-too-distant future, though, you might be able to insert a variety of characters into the tool and make a brief movie with just a few words.

Will AI travel with you? Kind of

Helping with travel planning—the labor-intensive and time-consuming process of creating a detailed itinerary—is one of the more common use cases for chatbots. The success of using genAI to handle that work for you has been the subject of numerous anecdotal reports, but as CNET’s Katie Collins points out, planning an itinerary involves more than just making a list of things to see and do.

The itinerary will arrange your day so that it makes thematic and geographical sense. Collins, who claims to be familiar with her hometown of Edinburgh, Scotland, wrote about taking readers on a tour of the area. She was dependent on several tools, such as the Out of Office (OOO) app, Wonderplan, Roam Around, ChatGPT, GuideGeek, and Tripnotes.

Part of the adventure will be travelling from attraction A to attraction B; you’ll pass by a charming street or be treated to an unexpected view you might not have seen otherwise. Additionally, she said, it will be thoughtfully timed, considering that by the third gallery of the day, even the most sophisticated among us will probably be experiencing museum fatigue.

Although chatbots are capable of producing lists of well-known and well-liked attractions, Collins stated that very few of the itineraries he asked AI to design for Edinburgh met this requirement. Additionally, because AI is based on historical data, it is extremely retrograde and could direct you to locations that are no longer in existence.

Therefore, before you go, you should confirm, double-check, and cross-check what the AI is telling you—as is the case with most genAI. Collins was advised to exercise caution when following its advice.

To what extent is this kind of hallucination being discussed?

Collins’ narrative brought to mind the issue of hallucinations, which arises when chatbots respond to your inquiries with false information that appears genuine. This issue persists in large language models like ChatGPT and Google Bard.

In an attempt to measure the severity of the issue, researchers at Vectara, a startup formed by former Google employees, discovered that chatbots fabricate information at least 3% of the time and as much as 27% of the time.

From now on, Vectara will release a “Hallucination Leaderboard,” assessing the frequency with which the LLM experiences hallucinations when summarizing a document. As of November 1, it ranked Google’s Palm 2 technology lowest with a hallucination rate of 27.2% and OpenAI’s GPT 4 highest with a 3% rate. According to the company, the leaderboard will be updated frequently as our model and the LLMs are updated over time.

Microsoft introduces its AI chip

Following the report, Microsoft unveiled the first of a planned line of Maia accelerators for artificial intelligence, claiming that the chip was created to power its own cloud business and subscription software services and not to be resold to other providers.

The Maia chip was created to run large language models, a class of AI software that powers Microsoft’s Azure OpenAI service and is the result of the company’s partnership with OpenAI, the company that created ChatGPT. “Delivering AI services can be ten times more expensive than delivering traditional services like search engines, a problem that Microsoft and other tech behemoths like Alphabet (GOOGL.O) are facing.

According to report, in an interview with Microsoft corporate vice president Rani Borkar, the company is testing Maia 100 to see how well it can support the demands of its AI chatbot for the Bing search engine (which is now called Copilot instead of Bing Chat), the GitHub Copilot coding assistant, and GPT-3.5-Turbo, a large language model from Microsoft-backed OpenAI.

With 105 billion transistors, the Maia 100 is among the largest chips using 5-nanometer process technology, which refers to the chip’s smallest features being five billionths of a meter in size.

This week’s AI term: deep learning

You might hear about how AI will (or won’t) function similarly to the human brain when people discuss it. For this reason, the phrase “deep learning” is used.

Deep learning is a subfield of machine learning that focuses on the development and use of artificial neural networks to model and solve complex tasks. It is inspired by the structure and function of the human brain, particularly the interconnected networks of neurons.

In traditional machine learning, feature engineering plays a crucial role, where experts manually design features to represent the input data. In contrast, deep learning algorithms learn hierarchical representations of data through neural networks, eliminating the need for explicit feature engineering.

The term “deep” in deep learning refers to the use of deep neural networks, which are neural networks with multiple layers (deep architectures). These deep architectures enable the model to learn intricate patterns and representations from the input data. Each layer in a deep neural network processes the input data at a different level of abstraction, allowing the network to automatically learn and extract relevant features.

Deep learning has achieved remarkable success in various applications, such as image and speech recognition, natural language processing, and playing games. Convolutional Neural Networks (CNNs) are commonly used for image-related tasks, while Recurrent Neural Networks (RNNs) and Transformer models are popular for sequential data and natural language processing.

Training deep learning models often requires large amounts of labeled data and substantial computational resources, but advancements in hardware, algorithms, and techniques have contributed to the widespread adoption and success of deep learning in recent years.