“As soon as it works, no one calls it AI anymore.”

— John McCarthy

Interest and press around AI comes and goes, but the reality is that we have had AI systems with us for quite some time now. Because many of these systems are narrow AI and actually work, they are often not thought of as being AI. But they are smart and help us, and that’s all that counts.

Recognizing AI Is Everywhere

When Netflix and Amazon suggest movies or books for you, they are doing something quite human. They look at what you have liked in the past (evidenced by what you have viewed or purchased), find people with similar purchasing profiles, and suggest things those people liked that you haven’t seen yet. Of course, some interesting nuances exist here, but see that this is what you might do yourself if you notice that two of your friends share common interests and use the likes and dislikes of one of them to figure out a gift for the other.

For now, don’t get caught up thinking how good or bad recommendation engines are. Instead, focus on the ability of these systems to build profiles, figure out similarities, and make predictions about one person’s likes and dislikes based on those of someone who is similar to him or her.

Asking Ourselves, “What Makes Us Smart?”

For our purposes, intelligence or cognition can be broken down into three main categories: taking stuff in, thinking about it, and acting on it. Think of these three categories as sensing, reasoning, and acting. This book does not look at robotics, so our focus on “acting” is the generation of speech.

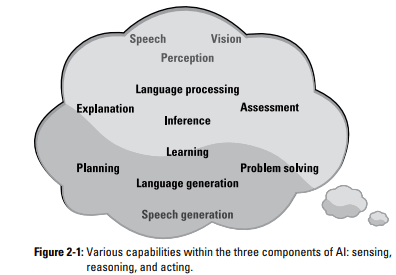

As Figure 2-1 shows, within these macro areas, we can make more fine‐grained distinctions related to speech and image recognition, different flavors of reasoning (logic versus evidence‐based), and the generation of speech (and other more physical actions) to facilitate communication.

Gray areas exist among these tasks, particularly when it comes to the role of reasoning, but thinking about intelligence as defined by these three components is useful when characterizing behaviors:

✓ Sensing: Taking in sensor data about the world, including:

• Image processing: Recognizing important objects, paths, faces, cars, or kittens.

• Speech recognition: Filtering out the noise and recognizing specific words.

• Other sensors: Mostly for robotics; sonar, accelerometers, balance detection, and so on.

✓ Reasoning: Thinking about how things relate to what is known; for example:

• Language processing: Turning words into ideas and their relationships.

• Situation assessment: Figuring out what is going on in the world at a broader level than the ideas alone.

• Logic‐based inference: Deciding that something is true because, logically, it must be true.

• Evidence‐based inference: Deciding that something is true based on the weight of evidence at hand.

• Planning/problem solving: Figuring out what to do to achieve a goal.

• Learning: Building new knowledge based on examples or examination of a data set.

• Natural language generation: Given a communication goal, generating the language to satisfy it.

✓ Acting: Generating and controlling actions, such as:

• Speech generation: Given a piece of text, generating the audio that expresses that text.

• Robotic control: Moving and managing the different effectors that move you about the world.

Examining the Components of AI

Research in AI tends to parallel the different aspects of human and machine reasoning. However, most of today’s systems, particularly the consumer‐oriented products like Apple’s Siri, Microsoft’s Cortana, and Google’s Now, make use of all three of these layers.

These systems are just one kind of animal in the new AI ecosystem and are complete, end‐to‐end. They make use of speech recognition and generation at both ends, and they use simple language processing to extract terms that drive a decision model, which, in turn, figures out what you have requested, and thus what task to perform. A response may then be crafted and handed to the speech generation system. The result is that each of these provides a seemingly singular experience built out of a combination of functionalities.

The following sections give you an idea of how the three aspects of intelligence — sensing, reasoning, and interacting — come together in this type of system.

Sensing

Consumer‐oriented mobile assistants use speech recognition to identify the words that you have spoken to the system. They do this by capturing your voice and using the resulting waveform to recognize a set of words. Each of these systems uses its own version of voice recognition, with Apple making use of a product built by Nuance and both Microsoft and Google rolling their own.

Even though these assistants can capture the words, they do not immediately comprehend what those words mean. They just have access to the words you have said in the same way they would have access to them if you had typed them. They are simply taking input like the waveform in Figure 2-2 and transforming it into the words “I want pizza!”

The result of this process is really just a string of words. In order to make use of them, these systems have to reason about the words, which includes determining what they mean, what you might want, and how they can help you get what you need. This happens by using a tiny bit of natural language processing (see Chapter 5 for more on this).

Reasoning

While each system has its own take on the problem, they all do very similar things at this phase. In the pizza example mentioned previously, they might note the use of the term “pizza,” which is marked as being food, see that there is no term such as “recipe” in the text that would indicate that the speaker wanted to know how to make pizza, and thus decide that the speaker is looking for a restaurant that serves pizza.

This is fairly lightweight language processing, driven by simple definitions and relationships, allowing these systems to determine that an individual wants a pizza restaurant or, more precisely, inferring that the individual wants to know where she can find one. Knowing what to do, however, is very different from knowing how to do it!

These transitions — from sounds to words to ideas to user needs — provide these systems with the required information to now plan to satisfy those needs. In this case, the system grabs GPS info, looks up restaurants that serve pizza, and ranks them by proximity, rating, or price. Or, if you have a history, it may suggest a place that you already like.

While the reasoning involved in deciding between different plans of action is certainly AI, the plans tend to be simple scripts for gathering information. But their simplicity should not undercut their role in an AI system. Knowing exactly what to do and when to do it is often called “expertise.”

Acting

After sensing and reasoning have been enabled, the results of these systems need to be communicated to users. This involves organizing the results into a reasonable set of ideas to be communicated, mapping the ideas onto a sentence or two (natural language generation, described in more detail in Chapter 5), and then turning those words into sounds (speech generation), as shown in Figure 2-3.

Not One, But Many

For Siri and her sisters, the functionality we get is the result of the integration of multiple components. These systems, and AI systems in general, are not based on a single monolithic algorithm. They comprise multiple components and approaches to reasoning that work together or sometimes in competition to produce a single flow. There is no “one theory to rule them all.” Instead, multiple approaches to the different aspects of intelligence come together to create a complete experience. The result is that each of these systems provides a seemingly singular experience built out of a combination of functionalities

Consumer systems are designed for non‐technical people. As such, they need to seem “human” as they both listen to and communicate directly with their users. Systems for the workplace are sometimes assumed not to need a strong communication layer because technical audiences are using them. This is a faulty assumption that I discuss in Chapter 5. In the meantime, the takeaway is that communication and the ability to explain turns out to be a crucial element of how we perceive intelligence and is thus a crucial component of AI systems as they enter the workspace.

This article has been published from the source link without modifications to the text. Only the headline has been published.

Source link