[ad_1]

The O’Reilly Data Show Podcast: Roger Chen on the fair value and decentralized governance of data.

(source: Library of Congress on Wikimedia Commons)

Subscribe to the O’Reilly Data Show Podcast to explore the opportunities and techniques driving big data, data science, and AI. Find us on Stitcher, TuneIn, iTunes, SoundCloud, RSS.

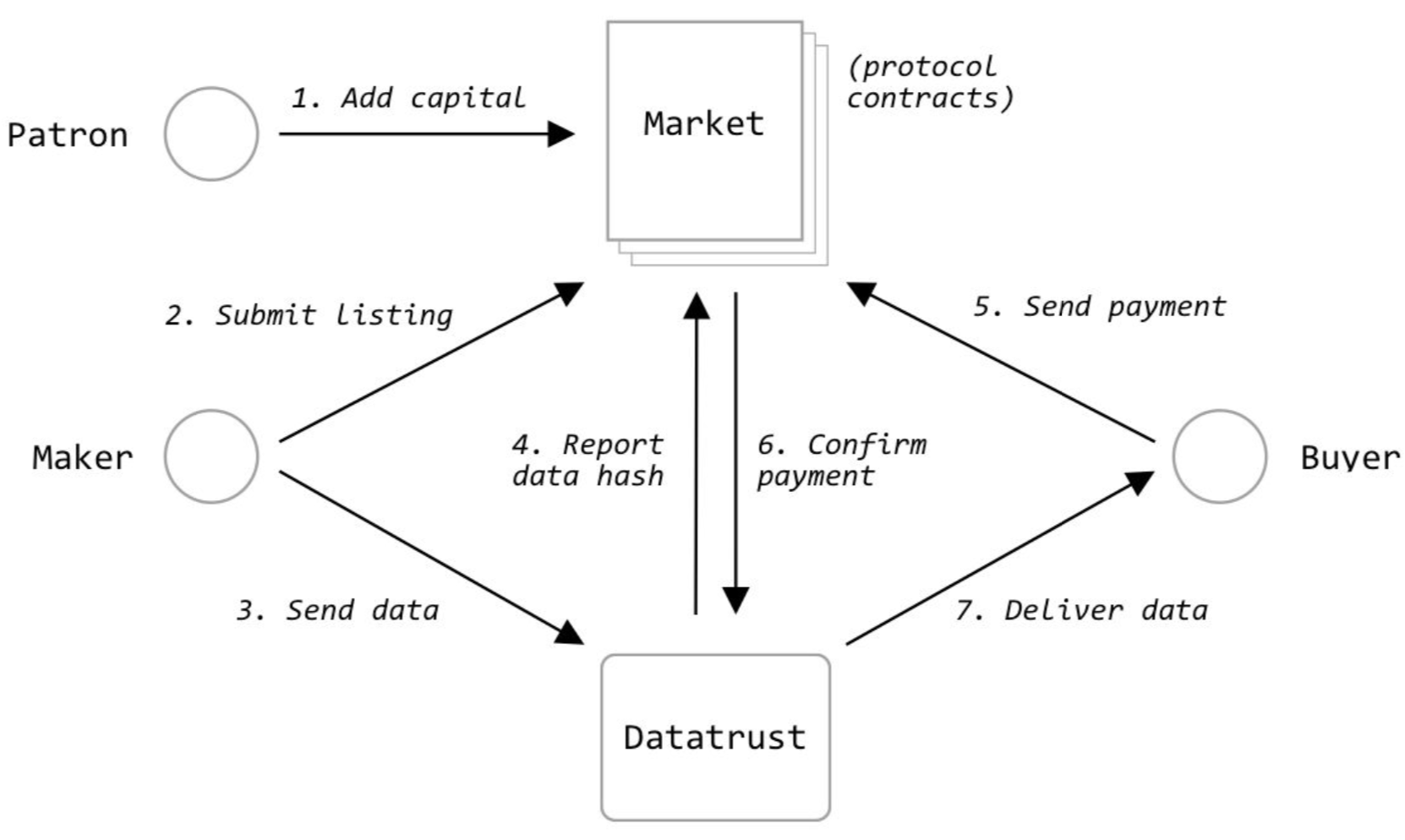

In this episode of the Data Show, I spoke with Roger Chen, co-founder and CEO of Computable Labs, a startup focused on building tools for the creation of data networks and data exchanges. Chen has also served as co-chair of O’Reilly’s Artificial Intelligence Conference since its inception in 2016. This conversation took place the day after Chen and his collaborators released an interesting new white paper, Fair value and decentralized governance of data. Current-generation AI and machine learning technologies rely on large amounts of data, and to the extent they can use their large user bases to create “data silos,” large companies in large countries (like the U.S. and China) enjoy a competitive advantage. With that said, we are awash in articles about the dangers posed by these data silos. Privacy and security, disinformation, bias, and a lack of transparency and control are just some of the issues that have plagued the perceived owners of “data monopolies.”

In recent years, researchers and practitioners have begun building tools focused on helping organizations acquire, build, and share high-quality data. Chen and his collaborators are doing some of the most interesting work in this space, and I recommend their new white paper and accompanying open source projects.