Whether attempting to forecast tomorrow’s weather, forecast future stock prices, identify missed sales opportunities in retail, or estimate a patient’s risk of developing a disease, time-series data, which are a collection of observations recorded over time, will almost certainly require interpretation.

Making predictions with time-series data typically needs several data-processing steps as well as the use of complex machine-learning algorithms, which have such a steep learning curve that they are inaccessible to nonexperts.

MIT researchers created a system that directly integrates prediction functionality on top of an existing time-series database to make these powerful tools more user-friendly. Their simplified interface, dubbed tspDB (time series predict database), handles all of the complex modeling behind the scenes, allowing a nonexpert to generate a prediction in just a few seconds.

When performing two tasks, the new system outperforms state-of-the-art deep learning methods in terms of accuracy and efficiency: predicting future values and filling in missing data points.

According to Abdullah Alomar, an electrical engineering and computer science (EECS) graduate student and author of a recent research paper in which he and his co-authors describe the algorithm, one of the reasons tspDB is so outstanding is that it integrates a novel time-series-prediction algorithm. This algorithm is particularly effective at forecasting multivariate time-series data, which contains more than one time-dependent variable. Temperature, dew point, and cloud cover, for example, are all dependent on previous values in a weather database.

In addition, the algorithm estimates the volatility of a multivariate time series to provide the user with a level of confidence in its predictions.

Even as time-series data becomes more complex, this algorithm is capable of effectively capturing any time-series structure out there. It feels like we’ve found the right lens to examine the model complexity of time-series data, says senior author Devavrat Shah, the Andrew and Erna Viterbi Professor in EECS and a member of the Institute for Data, Systems, and Society as well as the Laboratory for Information and Decision Systems.

Anish Agrawal, a former EECS graduate student who is now a postdoc at the Simons Institute at the University of California, Berkeley, is a co-author of the paper with Alomar and Shah. The results of the study will be presented at the ACM SIGMETRICS conference.

Adapting a new algorithm

For years, Shah and his colleagues have worked on the problem of interpreting time-series data, adapting various algorithms, and integrating them into tspDB as they built the interface.

They discovered a particularly powerful classical algorithm called singular spectrum analysis (SSA) that imputes and forecasts single time series about four years ago. The process of replacing missing values or correcting previous values is known as imputation. While this algorithm required manual parameter selection, the researchers suspected it could allow their interface to make accurate predictions utilizing time-series data. Previously, they eliminated the need for manual intervention for algorithmic implementation.

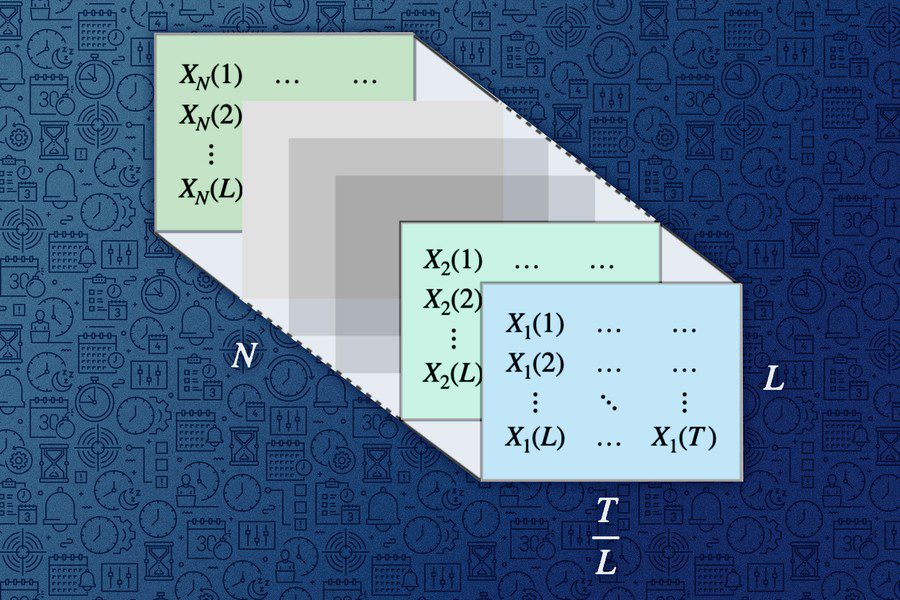

The algorithm for a single time series converted it to a matrix and used matrix estimation procedures. The main intellectual challenge was figuring out how to adapt it to use multiple time series. After several years of struggle, they realized the solution was simple: “Stack” the matrices for each time series, treat it as a single large matrix and then apply the single time-series algorithm to it.

This naturally uses information from multiple time series — both across time series and across time, as they describe in their new paper.

This recent publication also discusses intriguing alternatives, such as viewing the multivariate time series as a three-dimensional tensor rather than transforming it into a large matrix. A tensor is a multidimensional array of numbers, also known as a grid. According to Alomar, this has established a promising link between the traditional field of time series analysis and the emerging field of tensor estimation.

The mSSA variant that we introduced captures all of that beautifully. It provides not only the most likely estimate but also a time-varying confidence interval, Shah explains.

The simpler, the better

They compared the adapted mSSA to other cutting-edge algorithms, including deep-learning methods, on real-world time-series datasets containing inputs from the electricity grid, traffic patterns, and financial markets.

Their algorithm outperformed all of the others in terms of imputation, and it outperformed all but one of the others in terms of forecasting future values. The researchers also demonstrated that their modified version of mSSA can be used with any type of time-series data.

One reason Alomar believes this works so well is that the model captures a lot of time series dynamics while remaining a simple model at the end of the day. When we work with something simple like this, rather than a neural network that can easily overfit the data, we can perform better, Alomar explains.

According to Shah, the impressive performance of mSSA is what makes tspDB so effective. Their current goal is to make this algorithm available to everyone.

When a user installs tspDB on top of an existing database, they can run a prediction query in about 0.9 milliseconds, compared to 0.5 milliseconds for a standard search query. The confidence intervals are also intended to assist non-experts in making more informed decisions by integrating in the degree of uncertainty of the predictions into their decision-making.

For example, even if the time-series dataset contains missing values, the system could allow a nonexpert to predict future stock prices with high accuracy in just a few minutes.

Now that the researchers have demonstrated why mSSA works so well, they are looking for new algorithms to incorporate into tspDB. One of these algorithms uses the same model to enable change point detection automatically, so if the user believes their time series will change behavior at some point, the system will automatically detect that change and incorporate it into its predictions.

They also want to keep gathering feedback from current tspDB users to see how they can improve the system’s functionality and usability, according to Shah.

At the highest level, our goal is to make tspDB a success as a widely usable, open-source system.” Time-series data are extremely valuable, and this is a brilliant idea to incorporate prediction capabilities directly into the database. It’s never been done before, so we want to make sure the rest of the world uses it, he says.

This work is fascinating for a variety of reasons. It provides a practical variant of mSSA that does not require hand-tuning, they provide the first known analysis of mSSA, and the authors demonstrate the real-world value of their algorithm by competing with or out-performing several known algorithms for imputations and predictions in (multivariate) time series for several real-world data sets, says Vishal Misra, a professor of computer science at Columbia University who wasn’t associated in this research.

At the heart of it all is the stunning modeling work, in which they cleverly exploit correlations across time (within a time series) and space (across time series) for generating a low-rank spatiotemporal factor depiction of a multivariate time series. This model, in particular, connects the field of time series analysis to the rapidly evolving topic of tensor completion, and a lot of follow-up research is anticipated as a result of this paper.