Jordan Perchik began his residency in radiology at the University of Alabama in Birmingham, right around the time of the height of the “AI scare” in the field. In 2018, only two years had passed since computer scientist Geoffrey Hinton declared that radiologists should not pursue further education since machine learning tools would soon replace them. Hinton, who is sometimes called the “godfather” of artificial intelligence (AI), foresaw the day when these machines would surpass human comprehension in reading and interpreting X-rays and medical scans. There was a significant decline in the number of applications for radiology programmes. According to Perchik, people were concerned that after completing their residency, they would lose their jobs.

Hinton made a valid point. Over 500 AI-based instruments have been approved for use in medicine by the US Food and Drug Administration (FDA), making them a more common feature of medical care. The majority have to do with medical imaging and are employed to measure anomalies, improve images, or mark test results for further investigation.

However, radiologists are still in high demand seven years after Hinton made his prediction. Furthermore, the majority of clinicians appear unimpressed with these technologies’ performance.

Studies reveal that while a large number of doctors are aware of clinical AI tools, only a small percentage—between 10% and 30%—have really used them. From cautious optimism to blatant lack of trust, attitudes vary. “A few radiologists have concerns about the efficacy and security of AI applications,” notes Charisma Hehakaya, a specialist at University Medical Centre Utrecht in the Netherlands who handles the application of medical innovations. She participated in a team that in 20192 gathered the opinions of twenty-six Dutch physicians and hospital administrators regarding AI tools. She claims that when people have doubts, the newest strategies are occasionally dropped.

Furthermore, it remains unclear if using AI tools will improve patient care even in cases where they perform as intended. Perchik claims that would necessitate a more thorough investigation.

However, enthusiasm seems to be building around a strategy known as generalist medical AI. Just like the models that drive ChatGPT and other AI chatbots, these models were trained on enormous amounts of data. The models are adaptable to a wide range of tasks after consuming copious amounts of medical images and text. These generalist models would function more like a doctor, evaluating each anomaly in the scan and assimilating it into something like a diagnosis, in contrast to the specific roles currently fulfilled by approved tools, such as identifying lung nodules in a computed tomography (CT) chest scan.

While proponents of AI in medicine now tend to avoid making audacious claims about computers taking the place of medical professionals, many believe that these models have the potential to overcome some of the present limitations of medical AI and even surpass doctors in specific situations in the future. According to radiologist Bibb Allen, chief medical officer of the American College of Radiology Data Science Institute, located in Birmingham, Alabama, “the real goal to me is for AI to help us do the things that humans aren’t very good at.”

But before these cutting-edge instruments are applied to clinical care in the real world, much work needs to be done.

Present limitations

AI medical tools help practitioners by, for instance, processing scans quickly and identifying possible problems that a doctor might want to check out right away. Sometimes these tools perform flawlessly. Perchik recalls an instance where an AI triage system identified a chest CT scan as necessary for an individual exhibiting breathing difficulties. In the midst of an overnight shift, it was 3 a.m. He gave the scan top priority and concurred with the AI’s assessment that it revealed a pulmonary embolism, a potentially fatal illness that needs to be treated right away. The scan might not have been evaluated until later that day if it hadn’t been flagged.

However, if the AI makes a mistake, the outcome might be the opposite. According to Perchik, he recently discovered a pulmonary embolism case that the AI had overlooked. He chose to conduct additional review procedures, which slowed down his work but validated his assessment. That might not have been discovered if he had chosen to just move forward and put his trust in the AI.

According to radiologist Curtis Langlotz, head of Stanford University’s Centre for Artificial Intelligence in Medicine and Imaging in Palo Alto, California, many approved devices don’t always meet the needs of medical professionals. Early artificial intelligence (AI) medical tools were created based on the imaging data that was available, so certain applications were created for common and noticeable objects. Langlotz asserts, that he require no assistance identifying pneumonia” or a bone fracture. Nevertheless, a variety of tools are available to help doctors make these diagnoses.

The tools’ propensity to concentrate on particular tasks rather than thoroughly interpreting a medical examination, which includes looking at everything that could be relevant in an image, accounting for past outcomes, and considering the patient’s clinical history, is another problem. According to Pranav Rajpurkar, a computer scientist who works on biomedical AI at Harvard Medical School in Boston, Massachusetts, concentrating on a small number of diseases has some value, but it doesn’t accurately represent the true cognitive work of the radiologist.

Adding more AI-powered tools has been the usual solution, but Alan Karthikesalingam, a clinical research scientist at Google Health in London, notes that this presents difficulties for medical care as well. Imagine a patient undergoing routine mammography. An artificial intelligence tool for breast cancer screening may help the technicians. The same individual may need an MRI scan to confirm the diagnosis if an anomaly is discovered; this could be done with a different AI device. In the event that the diagnosis is verified, the lesion would be surgically removed, and additional AI system(s) might help with the pathology.

According to him, when you extend that to the scope of a health system, you can begin to see how many decisions there are regarding the devices themselves as well as how to integrate, buy, monitor, and deploy them. It can turn into an IT soup very quickly.

As per Xiaoxuan Liu, a clinical researcher at the University of Birmingham, UK, who specialises in responsible innovation in health AI, many hospitals are not aware of the difficulties associated with monitoring AI safety and performance. She and her colleagues located thousands of medical imaging studies that contrasted deep learning models’ diagnostic abilities with those of medical professionals. One key finding for the 69 studies the team evaluated for diagnostic accuracy was that most models were not tested on a data set that was truly independent of the information used for model training. This implies that the performance of the models may have been overestimated in these studies.

According to Liu, the need for an external validation is now more widely acknowledged in the field. However, she continues, very few institutions worldwide are fully cognizant of this. It is impossible to know whether these tools are truly beneficial without testing the model’s performance, especially in the environment in which it will be used.

Solid foundations

Researchers have been investigating medical AI with broader capabilities in an effort to address some of the shortcomings of AI tools in medicine. They have drawn inspiration from cutting-edge large language models, like the ones that underpin ChatGPT.

These are illustrations of foundation models, as defined by some scientists. Using a technique known as self-supervised learning, models trained on large data sets—which may include text, images, and other types of data—are described by this term, which was first used in 2021 by researchers at Stanford University. They are also known as base models or pre-trained models, and they serve as a foundation for later task adaptations.

Supervised learning was used in the development of the majority of medical AI devices currently in use in hospitals. In order to train a model using this method to identify, say, pneumonia, experts must analyze a large number of chest X-rays and classify them as either “pneumonia” or “not pneumonia.” This allows the system to learn patterns associated with the illness.

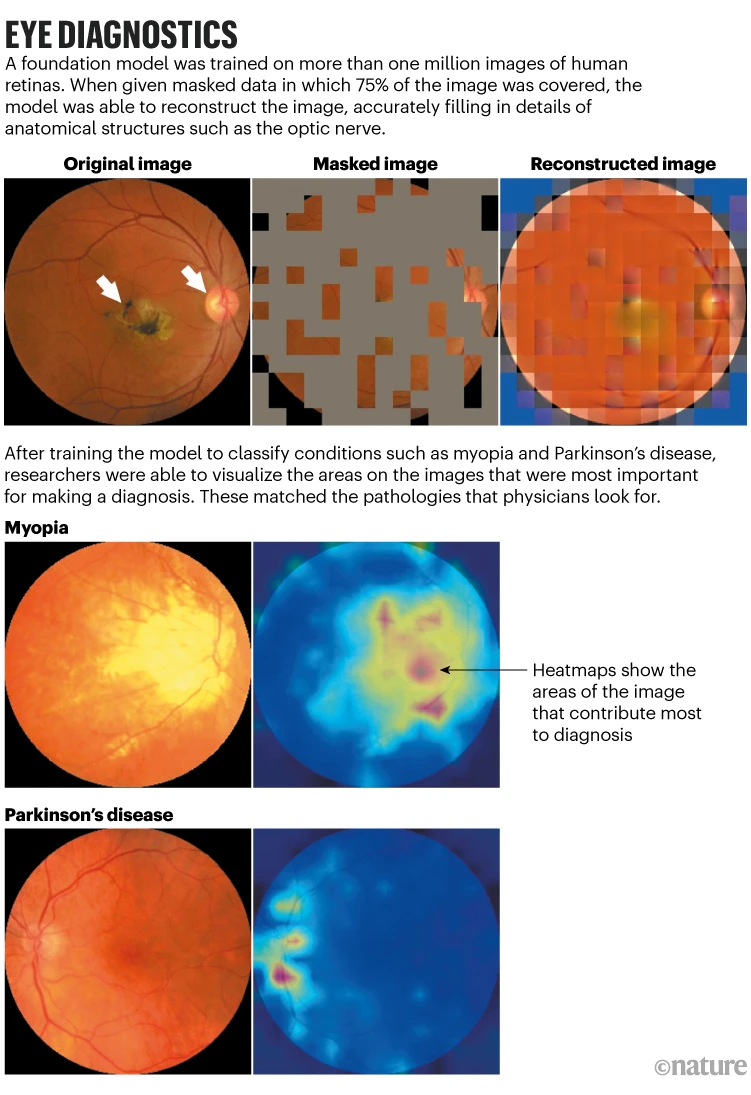

Foundation models do not require the costly and time-consuming annotation of large numbers of images. For ChatGPT, for instance, a language model that learns by guessing the next word in a sentence was trained using enormous text collections. Similarly, 1.6 million retinal images and scans were used to train a medical foundation model created by London’s Moorfields Eye Hospital ophthalmologist Pearse Keane and his colleagues to predict the appearance of missing portions of the images (see ‘Eye diagnostics’).

Following this pre-training, the model was trained on all the characteristics of a retina. A few hundred labelled images were then added, enabling the model to learn about various conditions related to vision, like glaucoma and diabetic retinopathy. In comparison to earlier models, the system performed better in identifying these ocular conditions and in forecasting systemic illnesses like Parkinson’s and heart disease that can be identified by tiny changes in the blood vessels in the eyes. A clinical setting has not yet been used to test the model.

Keane claims that because nearly every component of the eye can be imaged at a high resolution, foundation models may be particularly useful in the field of ophthalmology. Large datasets of these photos are also accessible for training these kinds of models. He asserts that AI will revolutionize the medical field. Furthermore, ophthalmology can serve as a model for other medical fields.

According to Karthikesalingam, foundation models are “a very flexible framework,” and their features appear to be well suited to addressing some of the shortcomings of first-generation medical AI tools.

Large tech companies are already investing in foundation models for medical imaging that use a variety of image types, such as X-rays, pathology slides, skin photos, and retinal scans, along with genomics and electronic health records.

Researchers at Google Research in Mountain View, California, released a paper in June detailing an approach they refer to as REMEDIS (‘robust and efficient medical imaging with self-supervision.’) Compared to AI tools trained using supervised learning, this method was able to improve diagnostic accuracies by as much as 11.5%. The study discovered that those outcomes could be attained with a relatively small number of labelled images following the pre-training of a model on large data sets of unlabeled images. Their main finding, according to co-author Karthikesalingam, was that REMEDIS was able to learn how to classify a wide range of different things in a wide range of different medical images, including chest X-rays, digital pathology scans, and mammograms, in a really efficient way, with very few examples.

In a preprint published the following month, Google researchers detailed how they combined that method with Med-PaLM, the company’s medical large language model, which can respond to some open-ended medical questions nearly as well as a doctor. The outcome is Med-PaLM Multimodal, a single AI system that showed it could perform tasks like interpreting chest X-ray images and writing a natural language medical report.

Additionally, Microsoft is attempting to combine vision and language into a single medical AI tool. The company’s scientists unveiled LLaVA-Med (Large Language and Vision Assistant for Biomedicine) in June. It was trained using text extracted from images that were paired with PubMed Central, a database of biomedical articles that are available to the public. Once you do that, you can basically start having conversations with images just like you are talking with ChatGPT, says Hoifung Poon, a computer scientist from Redmond, Washington, who oversees biomedical AI research at Microsoft Health Futures. This approach presents a challenge in that a large number of text-image pairs are needed. As of right now, Poon and his associates have gathered more than 46 million pairs from PubMed Central.

Some scientists believe that when these models are trained on an increasing amount of data, they may be able to recognize patterns that people are unable to. Keane cites a 2018 Google study that detailed AI models that could recognize features of an individual, like age and gender, from retinal images. Even highly skilled ophthalmologists are unable to accomplish that, according to Keane. It is therefore very possible that these high-resolution images contain a wealth of scientific data.

Poon cites the use of digital pathology to predict tumoral responses to immunotherapy as one instance where AI tools could outperform human capabilities. It is believed that a person’s ability to respond to different anti-cancer medications depends on the tumour microenvironment, which is the milieu of immune, non-cancerous, and cancerous cells that can be sampled with a biopsy. According to Poon, you could be able to identify many of these patterns that an expert might miss if you have access to data on millions and millions of patients who have already received immunotherapy or a checkpoint inhibitor and you examine the exceptional responders and non-responders.

He warns that while the diagnostic potential of AI devices is a source of great excitement, there is also a high bar for success with these tools. There will probably be a quicker effect from other medical applications of AI, like matching participants to clinical trials.

Karthikesalingam adds that human performance still outperforms even the greatest outcomes produced by Google’s medical imaging AI. According to him, a modern multimodal generalist medical system is still far behind an X-ray report written by a human radiologist. Karthikesalingam notes that while foundation models appear to be especially well-positioned to expand the uses of medical AI tools, more work needs to be done to show that they can be applied safely in clinical settings. As much as we value boldness, we also value responsibility greatly.

Perchik believes that artificial intelligence (AI) will become more and more important in his field of radiology. However, he believes that people will need to be trained to use AI, not replace radiologists.