With the insights gained from the use of AI, the focus is shifting from brilliant new models to perhaps more mundane but practical aspects such as data quality and data pipeline management.

A few days ago it was Matt Turck’s report on machine learning, artificial intelligence and data that ZDNet Big on Data colleague Tony Baer reported on. This week it’s the State of AI 2021 report by Nathan Benaich and Ian Hogarth.

After Air Street Capital and RAAIS founder Nathan Benaich as well as AI Investor Angel and UCL IIPP visiting professor Ian Hogarth published what is arguably the most comprehensive report on the state of AI in 2020, they have come back.

In a cherished annual tradition, we met with Benaich and Hogarth to discuss the issues highlighted in the report.

MLOps, machine learning in production

First of all, there is an overlap with the topics that Turck and Baer reported, and for good reason, as Baer pointed out, the wave of IPOs and the proliferation of unicorns is making this market an industry of its own, and that cannot be overlooked. For an overview of market trends, we encourage readers to take a look at Baer’s coverage.

With this in mind, we believe that the report on the state of AI 2021 covers more topics: the latest developments in AI research, industry, talent and politics, but also predictions. Make predictions, and they do pretty well. In 2020, for example, they correctly predicted the hurdles in Nvidia’s acquisition of Arm and IPOs related to AI and biotechnology.

As Benaich pointed out, because of their involvement in various machine learning companies, mostly in the early stages, they have access to leading artificial intelligence laboratories, academic groups, startups, larger corporations, as well as individuals working in government. So they are trying to synthesize all these different angles into one public good that is open source and aims to fully inform all stakeholders.

We picked a few general topics that caught our eye in the report, as we also identified them during the year: The first is MLOps, the art and science of bringing machine learning to production from shiny new models to perhaps more mundane but practical looks.

As machine learning models become more powerful and available, the gains from model improvements have become marginal. In this context, the machine learning community is increasingly aware of the importance of data best practices and better MLOps in general to develop reliable machine learning products.

“Much of the academic world has focused on competing on static benchmarks, showing model performance offline on those benchmarks, and then moving to industry. Therefore, Generation One was very focused on getting a model.

A lot of money, interest and development time has been invested in MLOps and this is motivated by the idea that machine learning is not a static software product that you can write once and forget, but must be constantly updated and it’s not just about updating the model, you have to see how your classes can change over time or if you’re still using the right benchmarks to see if a new model you’ve trained in production works or not. Problems can arise like choosing different random seeds for your model and then displaying a behavior in full. The other data in the real world, or even the data you used, is rubbish. ”

That sounds intuitively correct and is likely to appeal to anyone who has worked with machine learning models and data pipelines. Now people refer to these phenomena as changes in distribution (mismatches in the versions of the data set) and data cascades (problems with the data affecting subsequent operations). As naming things the first step in analyzing it and taking it more seriously, that’s a good thing.

Data-centric AI: good data, bad data, distribution shifts and data cascades

A change in distribution occurs when the data differs from the training data at the time of the test / implementation. In production, this often occurs in the form of concept deviations where the test data change gradually over time.

As machine learning becomes increasingly deployed in real world applications, the need for a solid understanding of distribution changes becomes paramount, starting with developing sophisticated benchmarks, say Benaich and Hogarth in The report.

Benaich believes it is difficult to determine examples of specific real-world changes in distribution because organizations likely do not want the world to know that such problems are affecting them. Web pages.

There is often a dynamic pricing engine powered by machine learning in the backend and the output depends on how much information they have about you, Benaich noted. Product you are looking at, depending on what data is used.Interestingly, it is precisely this practice that is cited by the Chinese Market Authority.

Benaich pointed out that at least two important new datasets have been released to deal with distributional changes, WILDS and Shifts, developed by various American and Japanese universities and companies, and Yandex, respectively.

A more industry-oriented use of data in science ultimately means that academic projects in the production environment are more successful because fewer changes in distribution occur when switching from industry to science and vice versa, Benaich noted.

Google researchers define data cascades as “compound events that have negative effects after data problems”. Supported by a survey of 53 experts from the United States, India and East and West African countries, they warn that current practice underestimates data quality and leads to data cascades.

The domino effect is a pretty intuitive idea. If you have a problem at the beginning, it will likely get tighter by the time you hit the last domino. It is noteworthy that the vast majority of data scientists report one. of these problems.

When attempting to attribute the causes of these problems, the main reason was that the importance of data in the context of their work in the field of AI was not recognized, there was no technical training, or lack of access to sufficiently specialized data. for the specific problem they solved

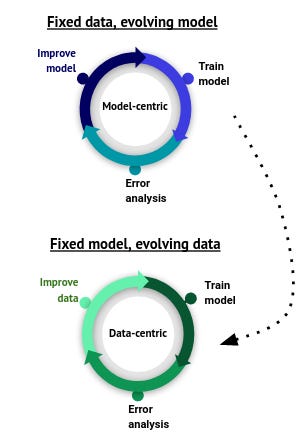

This suggests that there are more nuances in the world of machine learning than “good data” and “bad data”. Since data sets are multi-layered, different subsets are used in different contexts and different versions develop, the context is the key to defining data quality. Findings from machine learning in production lead to a shift in focus from the AI-centric model to the data-centric one .

Datacentric AI is a concept developed at Hazy Research, Chris Rés Research Group at Stanford. As mentioned earlier, the meaning of data is not new; there are well-established mathematical, algorithmic, and systemic techniques for working with data that span decades.

What is new is how these techniques can be set up and checked in the light of modern AI models and methods. Just a few years ago, we neither had long-lasting AI systems nor the current generation of powerful deep models.