Nowadays, machine learning acts as a powerful tool to help us solve problems related to the computer vision department like image classification, segmentation, processing, etc. Most of these types of applications are based on training the neural network in a supervised passion, in which labels are available. In the case of synthetically generated data, these labels are determined by human interpretation. The greatest challenge is that the training of our neural network with such synthetic data is not always generalized to real data, i.e. the target data.

Even at this point we can also have a synthetic model that may work better with real data but requires careful construction of the training set and the inclusion of real noise and some features from the real data set, but practically synthetic data and real ones are extracted from Another distribution, such as synthetic data, can be generated using GAN, which is a function of latent space and very important to the success of the neural network that both data should have been extracted from the same distribution. Neural networks in real data would result in a better model overall. Well, these models are good at the precision of the labels assigned to the data and are performed manually by unattended human or automated algorithms. Hence the problem persists.

The Domain Adaptation

To fill the gap between the source data (train data) and the target data (test data) a concept called domain adaptation is used. It is the ability to apply an algorithm trained in one or more source domains to another target domain. It is a sub-category of transference learning. In the adaptation domain, the source and target data have the same feature space but have different distributions, while transfer learning includes cases in which the target feature space differs from the source feature space.

The change in distribution is commonly referred to as a domain change or a distribution change, which is a simple change to the data. Let’s say we trained our model to diagnose various diseases that were present at the time we apply this model to new unlabeled data to detect COVID19. This change in data is therefore known as a domain change.

Types of Domain Adaptation

There are several domain customization contexts that differ depending on the information considered for the target task. In unsupervised domain adaptation, the training data includes a set of labeled source samples, a set of unlabeled source samples, and a set of unlabeled target samples. In semi-supervised domain adaptation together with unmarked target examples there we also take a small amount of target examples with marking and in the monitored approach all examples should be marked as one

Well, a trained neural network generalizes well when the target data is well represented as the source data. To achieve this, a researcher from King Abdullah University of Science and Technology, Saudi Arabia, proposed an approach called Direct Domain Adaptation (DDA). The method is mainly required to inject the properties of the real data set into synthetic training data, which is achieved by using a combination of linear operations including cross-correlation, autocorrelation and convolution between the source and destination input properties.

This operation leads to a distribution of the source input properties and the destination input properties which are closest to each other, which further supports the generalizability of the trained model in the inference stage. This process is completely explicit and the training model and its architecture are not affected. The method is used specifically in the data area.

Architecture of DDA

This method has achieved close to 70% accuracy when a simple CNN model is trained on MNIST data and validated on the MNISTM dataset. The Ts and Tt transformations help reduce the difference between the data distribution of the source and target datasets using the following methodology and provide the neural network (NN) with new input properties in order to train the network to reduce the L-loss and then apply it to a real data set. Then P is the probability distribution and shows the semantic versions of it for the source and destination data given by the MNIST and MNISTM data set samples.

Methodology of DDA

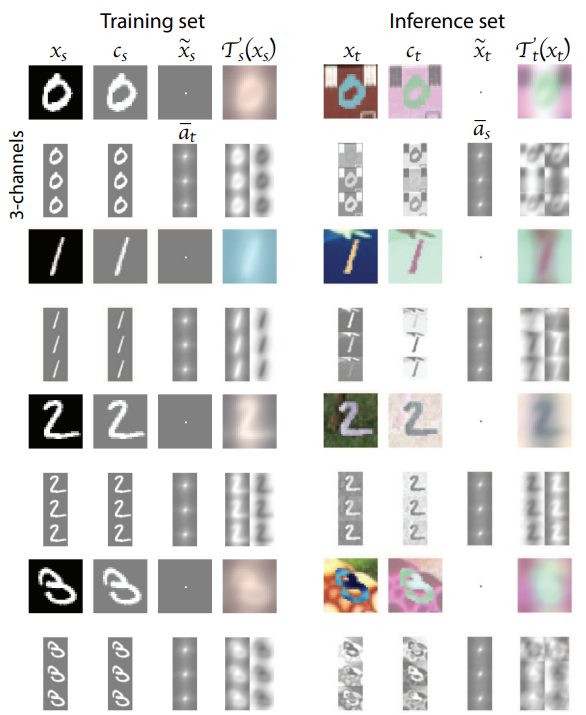

The methodology can be explained effectively with help of below picture;

Adaptation Process

When DDA is applied to the MNIST and MNISTM data sets, the above picture shows the effect of DDA on an example image of the digits 0 to 3. The left column of each figure shows the transformation of the source sample and on the right the target samples in the inference stage it is used for each digit is displayed in the color images in the upper row and the corresponding individual RGB channels in the lower row, which represent the actual entry into the classification network. If you observe clearly the final transformed input features for the target and source data look similar and this similarity becomes more apparent in the illustration of 3 channels that are real inputs.

Evaluation of DDA

Notebooks in the official DDA repository examine the performance using various configurations when transferring MNIST functions to the MNISTM dataset. The results of these experiments are as follows and show the accuracy of both the data set evaluated against the standard CNN network and the CNN + DDA network.

| CNN | CNN+DDA | |

| MNIST | 0.996 | 0.990 |

| MNIST-M | 0.344 | 0.734 |

Conclusion

The DDA is a direct and explicit method to precondition the input properties for the optimization of the monitored neural network, so that the trained model ultimately works best with available laboratory-free test data to incorporate properties of test data into the training without those that are very important for the prediction, change the input properties of the source. For practical implementation of DDA, You can consult the official repository’s notebooks.